Good Data edited by Angela Daly, S. Kate Devitt and Monique Mann will be published by INC in January 2019. The book launch will be 24 Januari @ Spui25. In anticipation of the publication, we publish a series of posts by some of the authors of the book.

“Moving away from the strong body of critique of pervasive ‘bad data’ practices by both governments and private actors in the globalized digital economy, this book aims to paint an alternative, more optimistic but still pragmatic picture of the datafied future. The authors examine and propose ‘good data’ practices, values and principles from an interdisciplinary, international perspective. From ideas of data sovereignty and justice, to manifestos for change and calls for activism, this collection opens a multifaceted conversation on the kinds of futures we want to see, and presents concrete steps on how we can start realizing good data in practice.”

Good Data are Better Data

By Miren Gutierrez

Are big data better data, as Cukier argues? In light of the horror data and AI stories we witnessed in 2018, this declaration needs revisiting. The latest AI Now Institute report describes how, in 2018, ethnic cleansing in Myanmar was incited on Facebook, Cambridge Analytica sought to manipulate elections, Google built a secret [search?] engine for Chinese intelligence services and helped the US Department of Defence to analyse drone footage [with AI], anger ignited over Microsoft contracts with US’s Immigration and Customs Enforcement (ICE) use of facial recognition and internal uprisings arose over labour conditions in Amazon. These platforms’ data-mining practices are under sharp scrutiny because of their impact on not only privacy but also democracy. Big data are not necessarily better data.

However, as Anna Carlson assures, “the not-goodness” of data is not built-in either. The new book Good Data, edited by Angela Daly, Kate Devitt and Monique Mann and published by the Institute of Network Cultures in Amsterdam, is precisely an attempt to demonstrate that data can, and should, be good. Good (enough) data can be better not only regarding ethics but also regarding technical needs for a given piece of research. For example, why would you strive to work with big data when small data are enough for your particular study?

Drawing on the concept of “good enough data”, which Gabrys, Pritchard and Barratt apply to citizen data collected via sensors, my contribution to the book examines how data are generated and employed by activists, expanding and applying the concept “good enough data” beyond citizen sensing and the environment. The chapter examines Syrian Archive –an organization that curates and documents data related to the Syrian conflict for activism— as a pivotal case to look at the new standards applied to data gathering and verification in data activism, as well as their challenges, so data become “good enough” to produce evidence for social change. Data for this research were obtained through in-depth interviews.

What are good enough data, then? Beyond FAIR (findable, accessible, interoperable and reusable), good enough data are data which meet standards of being sound enough in quantity and quality; involving citizens, not only as receivers, but as data gatherers, curators and analyzers; generating action-oriented stories; involving alternative uses of the data infrastructure and other technologies; resorting to credible data sources; incorporating verification, testing and feedback integration processes; involving collaboration; collecting data ethically; being relevant for the context and aims of the research; and being preserved for further use.

Good enough data can be the basis for robust evidence. The chapter compares two reports on the bombardments and airstrikes against civilians in the city of Aleppo, Syria in 2016; the first by the Office of the UN High Commissioner for Human Rights (OHCHR) and the second by Syrian Archive[1]. The results of the comparison show that both reports are compatible, but that the latter is more unequivocal when pointing to a Russian participation in the attacks.

Based on 1,748 videos, Syrian Archive’s report says that, although all parties have perpetrated violations, there was an “overwhelming” Russian participation in the bombardments. Meanwhile, the OHCHR issued a carefully phrased statement in which it blamed “all parties to the Syrian conflict” of perpetrating violations resulting in civilian casualties, admitting that “government and pro-government forces” (i.e. Russian) were attacking hospitals, schools and water stations. The disparity in the language of both reports can have to do more with the data that these organizations employed in their reports than with the difference between a bold non-governmental organization and a careful UN agency. While the OHCHR report was based on after-the-event interviews with people, Syrian Archive relied on video evidence from social media, which were then verified via triangulation with other data sources, including a network of about 300 reliable on-the-ground sources.

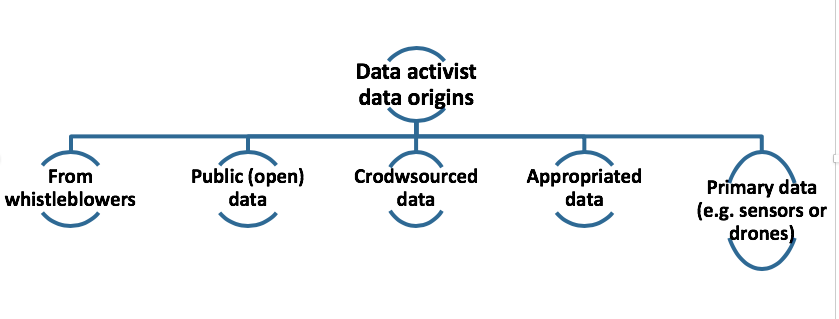

The chapter draws on the taxonomy offered in my book Data activism and social change, which groups data-mining methods into five categories:

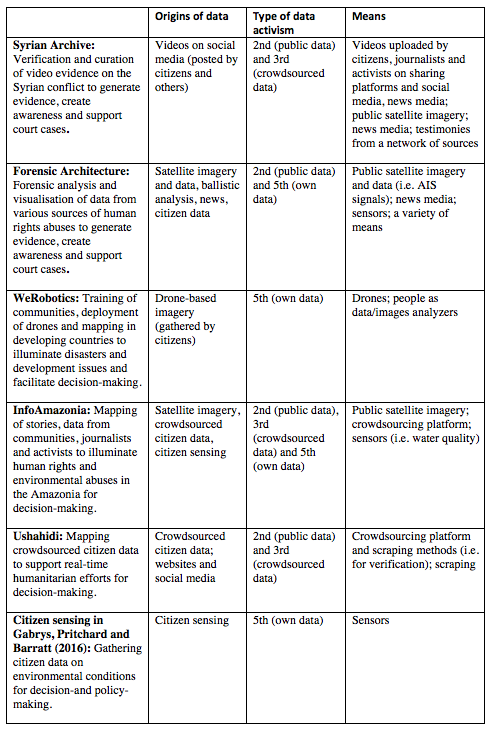

The chapter also looks into the data practices of several activist and non-activists groups to make comparisons with the Syrian Archive’s methods. The Table below offers a comparison among different data activist organizations’ data-mining methods. It shows the variety of data methods and approaches that data activism may engage / employ.

Table: Comparison of Data Initiatives by Their Origins

The interest of this exercise is not the results of the investigations in Syria, but the data and methods behind them. What this shows us is that this type of data activism is able to produce both ethically and technically good enough data to generate reliable (enough) information, filling gaps, complementing and supporting other actors’ efforts and, quoting Gabrys, Pritchard and Barratt, creating actionable evidence that can “mobilize policy changes, community responses, follow-up monitoring, and improved accountability”.

[1] The report is no longer available online at the time of writing.