Frontiers are usually zones of trafficking, and the moving boundaries of knowledge are no exception. There you may encounter the weird and adorable creatures known as paradoxes. One of my favorites is the sorites paradox, or ‘paradox of the heap’.

The Paradox of the Heap

What’s a heap? The answer is practical. You can tell a convenience storekeeper ‘give me a heap of nails,’ and you will be served to wish.

Now, the heap paradox says that if you start removing one nail at a time from the heap, sooner or later you will find yourself with a few nails left, and that won’t be a heap any longer. So, what happened to the heap? When did it disappear?

Enlarge

What's a heap?

A heap is not the kind of thing that needs an exact definition. It belongs to an old and noble family: the imprecise units of measure, an Aristotelian breed. When I ask for ‘a slice’ of pizza – beware, I’m not talking about pre-cut one-eighth-of-circle sectors here – I always get the right quantity, possibly after a breezy negotiation by gestures. We still use a few measures like that, but centuries ago vague units ruled. Farmland may be gauged in jugers, that is in how many days it could be plowed by a pair of oxen – don’t care what kind of oxen, what soil, what weather. Lengths were estimated in cubits, one cubit being the linear distance between the elbow and the tip of the middle finger – don’t care whose arm. Then scientific metric systems geared in and changed everything. It’s hard to imagine now, in the age of consumer laser meters, buying and selling apartments in jugers. Can you figure the revolution?

The question ‘when did the heap turn into a non-heap?’ smuggles the heap ‘from the world of approximation to the universe of precision’, as Alexandre Koyré would say. It requires a precise definition of ‘heap’. We must take a natural number N as a threshold to distinguish heaps from non-heaps. N should be elected by some convention, but let’s say N = 100. If the cardinality of a set is greater than 100, it is labeled ‘heap’, otherwise ‘non-heap’. N defines the set of heaps and its complementary.

Nice. But it sounds freakish to most of us. Who needs to know how many nails are in a heap? Plus, why on earth should 101 nails be a heap, and 99 not? The N-item heap seems a provision for hardened bureaucrats devoted to hairsplitting. An invention of despots who have society as their own playground of stone.

Each paradox is a danger sign, a symptom of some knowledge disorder.

Each paradox is a danger sign, a symptom of some knowledge disorder.It reveals a mistake in the representation of reality we have built upon perception data. It says we are talking and working by means of a flawed model. And flawed models are hazardous because the action they raise is directed at fake entities, at things that do not actually exist. Abstractions, projections of our minds. There is a deviance from reality in flawed models, and no one can predict or calculate the cumulated long-term consequences.

One thing is certain: reality’s not the kind of lass you can be fool for long without paying a price.

Living Languages v.s Dead Languages

What’s the mistake hidden in the sorites paradox? It is the scammy mixture of two conflicting language molds: a living one and a dead one.

‘Heap’ is a term of the natural language we grew up with. It’s fuzzy and shaded like any product of our living mind. It’s made of the same matter our bodies are made of. The Italian geneticist Edoardo Boncinelli exemplified the physiological fashion with the ‘fovea principle’: since not all the retina of the eye is equally sensitive, just a small zone in the middle of the visual field is in focus, while vision around it wanes into a shadowy realm between the seen and the unseen, the visible and the invisible, towards the unknown, the indefinable, the nonexistent. ‘Heap’ gracefully adapts to the uncertainty of life. It’s social, distributed, mutating, evolving along with subjective and collective experience. It’s poetry-friendly, unlimitedly available to everybody as a metaphor.

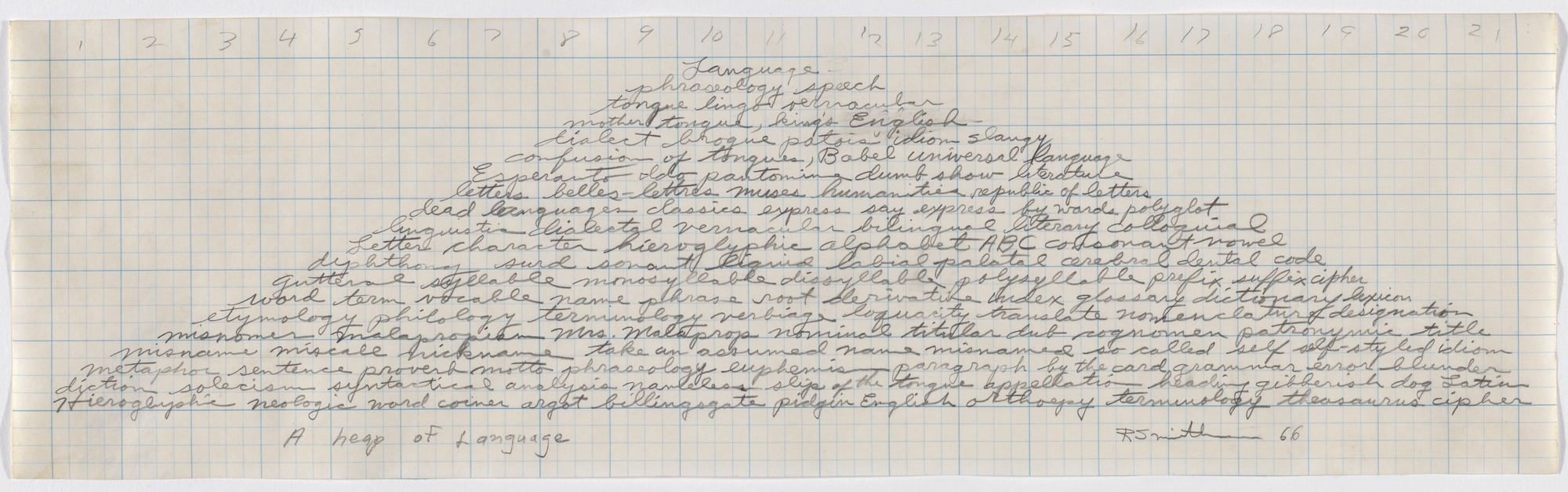

Enlarge

Robert Smithson, 'A Heap of Language' (1966).

The number N, on the contrary, belongs to the exact code of mathematics. It’s an artificial language which was given an agreed death, according to Roland Barthes’s Myth Today, in order to eliminate ambiguity: ‘mathematical language is a finished language, which derives its very perfection from this acceptance of death’. Playing dead, math language avoids the interpretation and ambiguity characteristic of living things.It can build magnificently firm and connectable structures that can objectively describe regularities in the physical world. However, it is a continuous tautology. Its sentences are as stiff as it can get. Nuances are zero: the opposite of physiology.

When living and dead languages are combined, the vague and exact get scrambled.

When living and dead languages are combined, the vague and the exact get scrambled. The results are paradoxical: the progeny of those unnatural unions is neither exact nor rough, it may be poetry but it purports science. In fact, the result is poetry disguised as science: a chimera, a fabrication, a lie. It might even be a beautiful delusion, except its neutering mask is a hypocrisy created by fear of chaos and imperfection.

Such mixtures of living and dead languages occur almost everywhere in our number-based culture. Examples are countless.

In management manuals they teach to evaluate customer satisfaction (or CSAT) with formulas like:

Experience – Expectation = Satisfaction

perceived quality / total cost ≥ expected quality / estimated cost

where the arithmetical operators, agents of precision, and costs, quantities by definition, are mingled shamelessly with nebulous terms of subjective psychology – quality, perception, expectation, experience, satisfaction – yielding a grotesque set-up.

CSAT and NPS always involve a translation of complex individual experiences into a homogeneous 1-to-N rating in surveys, which is another mixture of living and dead languages. Living and dead languages are similarly mixed in an A-to-F vote at school. They are mixed when a state labor law is rendered with OECD’s EPL index. They are mixed when the reliability of a bank or a bond is elaborated by a credit rating agency as a triplet of letters to be fed to savers. When the welfare of a whole country is assessed by a dumb number. When something or someone is appreciated by the number of likes on a social network.

For the Love of Numbers

Anyone can see that that all these measurements are simply ridiculous. Because numbers lose relevance as we move up the scale of complexity. Even a historian of mathematics like Giorgio Israel knows that ‘when it comes to complex systems, the right language is English, not mathematics’.

Human-made systems are the top of the complexity scale. Still, after the sublime accomplishments of Galileo Galilei and Isaac Newton in the early modern period, the awe of both scientists and peasants in front of the miracles of mathematics, nourished by the original pythagorean mystic of the Number, has never stopped growing.

Math knows its own limits perfectly and has even proven them with its own exactness, as in Gödel’s theorems. In a famous 1960 paper, the physicist Eugene Wigner confessed his astonishment about the ‘unreasonable effectiveness of mathematics in the natural sciences’. However, Bertrand Russell, quoted by Wigner, in turn quoted by John Barrow in The World Within the World, later did warn that ‘physics is mathematical not because we know so much about the physical world, but because we know so little: it is only its mathematical properties that we can discover’ . Russell’s words spotlight a fact that is often omitted: mathematics is limited, and knowledge making use of it has the same limits.

Academics and decision-makers are terribly and incautiously attracted to the abuse of math in the social realm.

Unfortunately, those limits are overlooked by the host of enthusiast pundits who apply math in the most trivial ways and unsuitable fields. Academics and decision-makers are terribly and incautiously attracted to the abuse of math in the social realm. Why are they so fond of it? I believe there are three main reasons:

Reason 1: Math is an anxiolytic.

A pass of math, and all gets orderly and uncluttered. Forever.

Doing math is like taking a break in a parallel universe where everything is transparent. No delusions, no cheats, no shady messages, no moral blackmail, no innuendos, no false faces, no false bottoms.

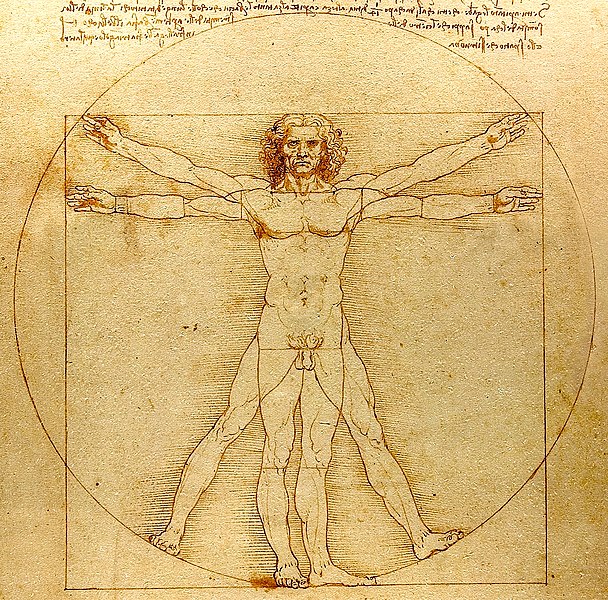

Some psychotherapies require patients to jot down personal dreams and feelings associated to experiences, for the implicit rationality of language to give a tangible form to the inner chaos. Well, translating a phenomenon into the language of math has a similar effect, except far more intense, since math is the pinacle of synthesis and universality. The quest for mathematical models of the world is in the first place the yearning for a balm, the sublime kind of relief we feel before Leonardo’s Vitruvian Man perfectly inscribed in a circle and a square. The ecstasy of supreme order.

Enlarge

Leonardo da Vinci, 'The Vitruvian Man' (ca. 1490).

Reason 2: Math is a delightful simplifier of human intricacies.

Cutting out irrelevant details from an experimental setting is part of the modern scientific method. Galileo Galilei called it ‘difalcare gl’impedimenti’ – old Italian for ‘deducting hindrances’. Starting from intuition, a scientist makes a map of the world deciding what to keep in the picture, and put to the test, and what to leave out and ignore. Deducting hindrances is an art that must be guided by an extraordinary sensibility: if you leave out necessary details, viable hypotheses might be ruled out, yielding bad theories and fruitless caricatures of reality.

Caricatures have become the norm.

It is obvious that few people can master the art of ‘deducting hindrances’ when it comes to human and social complexity. Instead, caricatures have become the norm.For instance, consider the agent-based simulations that study social dynamics on computers. Social actors like citizens, workers, whole organizations and firms, are simply mocked up with code objects made of a set of numbers: i.e. a figure representing an abstract skill, a figure representing improvement over time, a set of skill-figures representing an enterprise, and so on. Why go out among real people when you can run a program, sit back and wait for what comes out? The same applies to the hip digital sociology carrying out research entirely within social networks, business logic that display convenient numerical properties by design.

Such caricatures of women and men provided by formal models of human beings and human groups of course do not give us a better or deeper understanding of ourselves. But that’s not a problem for their creators, because they were never meant to do that. The sole purpose of formal models is to render humans mathematically tractable.

Math and logic make us blind to ourselves, in so far as theory gives visibility and attention solely to the quantifiable and computable aspects of our reality, and hide the rest, possibly forever. As a matter of fact, mathematical representation lessens our comprehension of humans. Even statistics, which oftentimes can achieve an interesting bird’s eye perspective on social phenomena, fails dramatically the moment we need to face individual cases. And this time always comes. As Stephen Jay Gouldput it, when diagnosed a cancer we don’t give a damn about the general survival chances: we just want to know what our own chances are.

Widespread reductionism is the result of such abuse of mathematics.

Widespread reductionism is a result of such abuse of mathematics. I mean that reductionism is not an intrinsic property of math. It is the mindset of people who use math that way.

Reason 3. Math gives power.

A bit of math is enough to fake science, most of the time.And ‘science’ means power, because it means authority, trustworthiness, certainty – no matter how crazy, brutal and unrealistic a theory might be.

Math gives power also because it conveys the feeling of a priori: the dream of an absolute, eternal truth, liberated from human subjectivity, relativity, affections, limits, contradictions. Since Euclid, math and geometry have always been considered transcendent stuff, something independent of our passing bones. The proof of a magical accord existing between man’s intellect and the world, or between man’s soul and God. At least, until non-Euclidean geometry came about. Nevertheless a priori survives as a Platonic ideal of de-humanized perfection. The aim of relieving humans completely from decision loops, in order to achieve an alleged total objectivity, seems closer and closer with algorithms and Artificial Intelligence.

The Disease of Abstraction

The pursuit of a priori and objectivity and the imitation of science, do not necessarily need proper mathematics and logic. They just need formalism. They need a dead language made of terms which have, or pretend to have, exact definitions.

In fact, the obsessive quest for a perfect and objective language, turning any reasoning about the world into a safe and clear-cut computation, runs through the entire history of Western thought. Abstraction is the keystone of this quest and of its culture.

Hypertrophy of abstraction can be regarded as the main knowledge disorder of our times.

Abstraction is the power of condensing many instances in a single symbol or concept: perhaps the most wonderful capability of our brains. It’s been great, but now we must admit it’s gone too far. Hypertrophy of abstraction can be regarded as the main knowledge disorder of our times.

The word ‘abstraction’ comes from the Latin ab-trahere, that is to separate, to pull apart, to draw away from. Over the past centuries, using formal, dead languages, we have built abstraction stairways in all fields of knowledge; using abstraction stairways, we have built more dead languages. And every step up in abstraction meant one more step away from matter, one more bit of a divorce from reality, one little further decline in our sense of boundaries.

Outside of sciences that can define their own limits with exactness, a deregulated and math-empowered abstraction has produced a heap of pseudo-sciences. It has produced arbitrary pseudo-exact definitions of everything, including ‘heap’, ‘forest’, ‘utility’, ‘pleasure’, ‘reputation’, ‘friendship’, and a lot of other crucial and sacred thing. It has produced fearful concoctions of living and dead languages, such as a ‘non-experimental science’ – this is how the Nobel prize Gérard Debreu defined mathematical economics, and how he taught it to Milton Friedman and many influent others, who in turn taught it to heads of states and to the whole world.

The 2008 crisis started exactly where the math-powered abstraction reached the most extreme degree of breaking up all bonds between nominal credit and real wealth.

As Marx first noted in relation to money, the abstraction introduced by the intermediation of numbers and formal codes breeds a lot of negative social consequences. We reached an acme of that abstract free climbing when banks learned to multiply credit indefinitely by means of multi-level derivatives known as Asset Backed Securities, entirely made-up assemblies of other derivatives and titles of different types and origins. Since their price could not be traced back to their sources, it had to be made up itself: Black, Scholes, and Merton provided the charming math to do the magic, again inspired to physical systems. However, as we know , reality strikes back sooner or later. The 2008 crisis started exactly where the math-powered abstraction reached the most extreme degree of breaking up all bonds between nominal credit and real wealth.

Such long flights of abstraction always have this negative side effect: they abolish the awareness of risks and limits. They drive people out of control. Besides finance, it’s happening for example in computation and data transmission. They have evolved so much, and become so pervasive and invisible, that their very nature has seemingly morphed into a transcendent state. The common impression is that storage and computing power are endless commodities, that we can save unlimited data on the cloud (if it’s only numbers, they can grow ad lib), that a YouTube video can be enjoyed at no other cost than one’s smartphone battery consumption. But this is far from the reality.

Abstraction has even worse consequences, though, when it is applied to our relationships. Abstraction pushes our neighbors away. So far away that they appears as objects. People become things. Our natural empathy is shut off, dissolving the invisible glue of society.

I’ll take the phenomenon of fighting as a clarifying example:

Abstraction level 0 of fighting is hand-to-hand combat. You can smell the sweat and blood of your enemy, you can see the pain and fear in his eyes, you can feel bones break and yells pierce your ears. That’s only for the brave and fierce. Matters of life and death.

Primitive weaponry, armor and mounts bring fighting to abstraction level 1: there’s a small distance now. Fighters do not always feel the flesh of the other body or look into the other’s eyes. Their senses are a bit less captivated.

When firearms get involved, we get to abstraction level 2. The contenders can be so distant that they hardly see each other. They have a very faint perception of each other’s body, their senses are out of the question. If they can’t see each other’s eyes, they have no awareness of each other’s feelings. And an odd novelty appears: murder becomes easy. It is now within range of many: not only of criminals and psychopaths who are, so to say, naturally abstracted, but also of average folks that could never think of killing in a closer span, at lower levels. Here, at level 2, empathy starts to vanish from the picture.

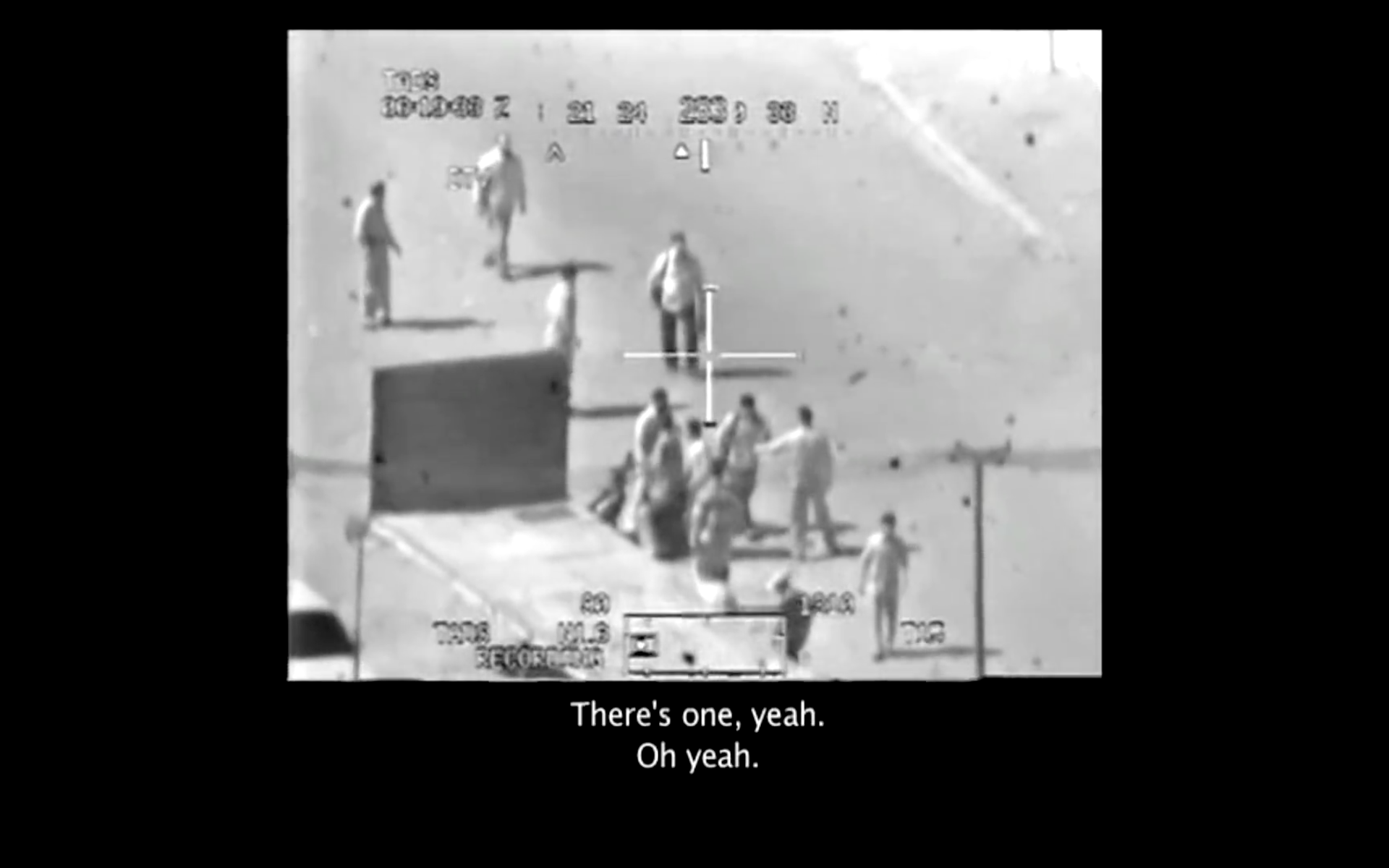

Going up to level 3 and above, things just get worse. Level 3 is, for example, the Crazyhorse Apache attack made famous by Wikileaks. Everything here looks and feels like in a low-fi shoot’em-all videogame. The real and the virtual are indistinguishable. Slaying is fun and silly.

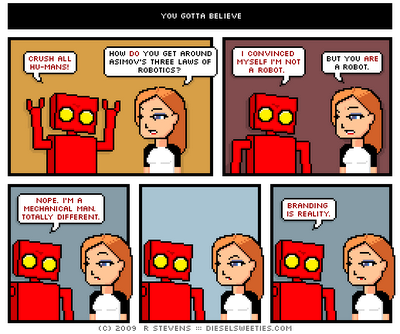

It's a world of robots, in every way.

Further up along the stairway of fight abstraction, at levels 4 and 5, human will is abstracted too, and control is overtaken by algorithms and automation. We have ‘systems which could detect possible targets and attack them without human intervention’. Time can be deferred at pleasure with the ‘fire and forget’ model. Action start and end can be drifted apart at will. The ‘enemy’ is just a snippet of code – not differently than a ‘worker’ in an agent-based simulation of computational sociology. Our natural empathy and affections are cancelled. Our bodies are cancelled. It’s a world of robots, in every way.

Advanced Psychopathology

In our ‘advanced society’, people are intermediated by more dead languages than they may be aware of.

The first and foremost is money, of course, in its manifold guises. Then we have formal models, contracts, protocols, legislations, regulations, standards, metrics, indices, KPI’s. We have statistics, big data from infinite sources. We have certifications, signages, brands. Evaluations are virtualized and delegated to private third parties: credit reliability is spelt by the rating agencies, students’ marks are assigned by optical scan of multiple-choice tests, traffic fines are levied by unmanned cameras and automatic processing and delivery, job applicants are filtered by credit records and other arbitrary scores. Many relational activities, such as reputation and trust, conversation, emotional interaction, politics, business, etc., are proxied by digital platforms and programmed functions.

If in Marx’s times money was the Great Abstractor acting as a quantified broker between one person and another, and also between one person and his/her own desires, today this role has been overtaken by software. Digital communication tools and social media, with their apparatus of axioms and conventions hidden in secret proprietary business logics, make it manifest.

This rising intermediation of codes has two downsides.

Downside 1: falsehood.

As Umberto Eco stated in Trattato di semiotica generale, ‘semiotics is the discipline which deals with anything that can be used to lie’. This is in fact the most noteworthy and underestimated property of languages. As a consequence, the more we connect to each other through languages – in the absence of body – the more lies are among us. We are prey of falsehood. Falsehood becomes a structural property of society.Lying is typical of languages in general, and a fortiori of dead languages used in the social context. Here is the paradox: against the danger of lies, we crave for numbers; but the more the numbers, the stronger the lies A number is so simple, so eye-catching. So easy to obtain, so easy to falsify.

We don't realize that, if judgment had been argued through discourse, counterfeit would be much harder.

When competitors in an exam or bid are ranked by a mere number, it’s easy to adjust the ranking while keeping an outward ‘scientific’ fairness, provided by the abstruse formulas used to yield that number. We don’t realize that, if judgment had been argued through discourse, counterfeit would be much harder.Statistics, too, can be forged with unlimited ease playing with cross-sections, and it is proven that not even experts can figure them out exactly.

The plague of fake news, undermining democracy in unprecedented ways, is the child of the digital that can be easily counterfeited. The overall fake traffic on the internet is reaching the infamous point known as ‘the Inversion’, when bot-generated traffic will exceed the genuine human-generated one. The worst aspect of this tendency is, according to Max Read, that ‘the “fakeness” of the post-Inversion internet is less a calculable falsehood and more a particular quality of experience – the uncanny sense that what you encounter online is not “real” but is also undeniably not “fake”, and indeed may be both at once, or in succession, as you turn it over in your head’.

Downside 2: inhumanity.

Formal intermediation works as a layering of abstractions . It’s like we’re having thick, cold, opaque glasses between us. They block the main vehicles of mutual understanding, our eyesight and other senses. These coded channels are narrow holes through which our natural physical awareness of one another is filtered and reduced. Instead of physical presences they give us numbers, symbols, small sets of predefined commands to interact with one another. They give us news feeds, smart replies, like buttons, video stories, photo filters. Abstractions tear us apart and provoke us to see one another as things.

Now, seeing persons as things is not normal for humans. It’s a mental impairment, among the key features of psychopathy and antisocial personality disorder.

Mayo Clinic defines: ‘Antisocial personality disorder, sometimes called sociopathy, is a mental condition in which a person consistently shows no regard for right and wrong and ignores the rights and feelings of others. People with antisocial personality disorder tend to antagonize, manipulate or treat others harshly or with callous indifference. They show no guilt or remorse for their behavior.’

Have you ever noticed this kind of behavior? It’s all around us. It’s even us, frequently. It’s been promoted for centuries. Hard competition, stakeholders’ interest, limited liability, private profit and public losses, blind abuse of commons, blind exploitation of natural and social resources.

Theories modeling humans as variables are indifferent to humans, so they are inherently antisocial.

Reading Robert Hare’s Checklist of Psychopathy Symptoms, one may be surprised that physical violence is not central. The core trait of an antisocial person, research finds, is the lack of empathy, along with pathological lying, shallow affect, and lack of realistic, long-term goals caused by a deficient influence of emotions in the brain. We have seen them all as the math-ruled society’s traits, and that is no coincidence. Mathematical variables are indifferent to their content. Theories modeling humans as variables are indifferent to humans, so they are inherently antisocial.

Enlarge

Each time a person is represented by a variable or a number, violence is present.

Why would someone produce a theory about humans which is indifferent to humans? There is only one possible answer: because he or she suffers from the same impairment in the act of drawing it up. We should not forget that the conditions of academia, where most theories are born, can induce or worsen estrangement: which is another paradox, since those theories are supposed to unravel our reality.

We must also ask: why would someone find that inhumane theory appealing, and want to apply it? I see various answers. Surely because adopters themselves may suffer from the same disease: research reports that antisocial attributes are found among top managers more frequently than in average people, not to mention tyrants and strong regime lovers. But also, because formal theorists have a tremendous influence due to mathematical simplification, imitation of science, and academic career dependencies. Finally, because formal theories are perfect to exert absolute power in a soft fashion.

The bottom line is, through the transmission chain that goes from theory to applications the pathological characters of individual antisocial personalities become the generalized characters of our contemporary society. And the escalating abstraction of relationships fuels the incessant decay.

At the end of 19th century, people like Walras, Jevons, Menger, Pareto, Veblen, were inspired by Jeremy Bentham’s moral algebra, which accredited a series of picturesque analogies between human sentiments and arithmetic: e.g. that ‘pleasure’ and ‘pain’ were respectively positive and negative quantities which could be added and subtracted, or that ‘utility’ could be universally measured as a physical quantity and denoted by a number. They expanded those ideas into a mathematical theory of society, aesthetically based on physics: neoclassical economics. There was no scientific foundation for this venture, but Reasons 1, 2 & 3 were strongly in force. Later, thanks to Von Neumann and Morgenstern, economics ended up as a game of maximization for totally rational agents with infinite information, whose decisions are triggered according to an ever-increasing utility function. No trace of affections survived this paradigm shift. No trace of humanity.

The enforcement of this caricature over reality was lubed by the centuries-old headstrong propaganda in favor of egoism as a moral virtue and a constructive force, dating back to Adam Smith. The combined effects of the abstraction induced by the growing use of numbers and math, on the analytical side, and the epic of selfishness on the narrative side, have helped to hinder, decrease and sometimes deactivate social agents’ natural empathy on a very large scale. Exposed to this force field, society could surrender to a crazy theory which prescribes to take decisions according to simple arithmetic conditions: if N > 0 do this, otherwise do that. If the forecast price of good X is greater than present price, retain stocks; otherwise sell. If the growth rate of a forest is greater than interest rate, keep it; otherwise cut it. And so on.

You may think this concept of a decision-maker is closer to a machine than to a human being. But to be honest, it is not even suitable for a machine: it depends on unlimited growth, whilst nothing in the physical world is unlimited or can grow ad libitum. Only abstract numbers can.

Social theory based on abstract numbers has proven itself a monstrous instrument of power.

Social theory based on abstract numbers has proven itself a monstrous instrument of power.It’s made by free riders for free riders, but its inhumanity is protected by the mask of science and modernity. Strong motivations support it: fear of chaos and anxiety, human simplification and mathematical tractability, the ideal of objectivity and the pantomime of certainty. As a ‘non-experimental science’ it refuses reality check, and paradoxically this is another reason why it’s so difficult to root it out, like religions and delusional disorders.

Is Harm Calculable?

In the 1940s, when computers weighted fifty tons and Alan Turing had not yet invented the Test which gave birth to AI, Isaac Asimov defined his famous Laws of Robotics. This old cornerstone of science-fiction has become a powerful meme still shining with an incredible allure today, in the full swing of the second-wave AI.

Here goes the First Law:

‘A robot may not injure a human being or, through inaction, allow a human being to come to harm.’

Smells like sacred books. From a literary standpoint, its glamour is undoubted. It revamps the archetype of godly commandments with a fancy subject shift: here weare the gods, and robots are our creatures. Satisfaction guaranteed.

But besides its capacity of fueling stories, the First Law can reveal a quintessential gap between humans and machines. The boundary between what is calculable and what is incalculable.

What does it mean to ‘harm’ or ‘injure’ somebody?

To start with, our competence about ‘harm’ is so vast and so many-faceted. We can just hearsigns of harm, without seeing, and get the pain. Harm can bear no visible trace or sound, and still be devastating. A landmark study by Tania Singer, along with many others using fMRI, show that when a normal person sees a needle stinging someone else’s hand, her premotor cortex commands her own hand to pull back, and pain-related areas in her brain are activated – almost as if she were the injured person.

We can comprehend 'harm' because we have a body that suffers.

Our intuitive understanding of ‘harm’ comes from the body: it is a sort of direct communication between bodies. We can comprehend ‘harm’ because we have a body that suffers. Pain is a common experience since our early days. Since then, we’ve been trained to label moments of pain with the right words. We automatically recognize signs of suffering surfacing in our neighbors, because we share a similar body and brain equipped with mirroring systems. The facial and body expressions of pain, fear, joy, sadness, revulsion, immediately provoke degrees of mimicry in the onlookers, and induce in them interior states akin to the observed behaviors. This was properly called ‘intersubjective resonance’ by Giacomo Rizzolatti and his colleagues, the discoverers of mirror neurons.

Intersubjective resonance is the social environment we live in. It is our common daily bread. And it’s crucial for survival. Our standard mirroring equipment is an obvious result of evolution: if our caregivers missed that ability, they could not recognize our need for food and we’d be doomed at birth. Not even culture could evolve, since learning is mostly imitating.

As clearly illustrated by Simon Baron-Cohen in The Science of Evil (2011), our sensitivity to the suffering of others, and thus our inhibition to harm them, depends on an empathy circuit in the brain, made of about ten areas. Like most abilities, that sensitivity is conditioned by genes and can be temporarily or permanently reduced by several external factors: propaganda, authority, retaliation, one’s own pain, distraction, fear, etc. As Hannah Arendt taught us, harm virtually disappears when it is split into small and trivial tasks. On the other hand, empathy can be trained and enhanced by education.

When we have been educated to distinguish among various kinds of suffering we, and others, can endure, we learned to use a more or less rich set of labels to indicate them. Like ‘heap’ and ‘utility’ those words belong to our fuzzy, vague, living language. They are exterior, since words are younger than feelings and can only approximate them. In fact, it’s not easy to explain how we feel, especially when various emotions mix together. It requires to cross two very steep thresholds: first, bringing feelings afloat to consciousness, then putting the right words together in correct sentences. A wasteland of darkness for most of us. After all, why do we admire and honor great writers? Exactly because they have that gift: revealing the inner hotchpotch like we could never do ourselves.

Thanks to our standard mirroring equipment, though, that frightening effort is not needed: embodied simulation is the immediate and automatic way the brain does all the work, below the consciousness line, producing a guess about someone else’s sensations and intentions, and trying to act in accordance. Except for a small population with broken empathy circuit this is how we understand when somebody is feeling pain or relief. And also know whether we are harming or helping.

The more different the bodies, the less the mirroring, the harder the understanding. This is a general rule that can be tested at home. Confront yourself with an orangutan, a dog, a beaver, a frog, a spider, a jellyfish, a sea cucumber: ask yourself what it’s like to be each one of them, try to imagine it, analyze your feelings about it. You will see chances of empathizing get smaller and smaller down the chain. The similarity rule applies to human-to-human relationships too, as many hues of racism testify.

What happens when machines enter this world of natural body-to-body relations?

A chasm appears, due to the absence of a minimum shared phylogeny between humans and machines. We have nothing in common. Machines are radically foreign to living bodies. They have been forged for a purpose, with the least ambiguity possible: that is, they are embodiments of a dead language.

To enforce Asimov’s First Law, we must command machines not to harm us. But they have no living body and no evolution-wrought standard equipment for mirroring and tuning in to one another. Therefore we must describe explicitly to them what it means to be harmed and relieved. And we’d better include our deepest fears, our most subtle sentiments, our most heartfelt issues, all those stupid violations that throw us into despair.

How can we ever formulate our deepest fears and sentiments in order to teach them to machines?

How can we ever formulate all those fears and sentiments in order to teach them to machines? We are required to cross the two very steep thresholds of awareness and verbalization. And as if that’s not enough a challenge, the task is even greater: machines require a dead language and absolute exactness. So, there will be one more threshold: to formalize and translate it all into some logical lingo.

No matter what form the description may take. It may be made of explicit rules, as in the expert systems of the first AI era. Or we may use the latest trend, machine learning: produce a large amount of examples of harm, and feed it to a neural network to train it. In order to put together such training set we should find a formal way to represent a discrete series of appropriate situations. For instance, a dictionary of pictures portraying facial expressions or body postures related to suffering, similar to Paul Ekman’s Facial Action Coding System but extended to the whole body.

This new AI plan, though, would run into big hurdles as well. Such dictionary would be very difficult to create. The continuum of physical expression is irreducible to a discrete list without a loss of (possibly critical) information. And being the association between signs of pain and actual pain based on the accounts of the test subjects, it would be limited to the conscious domain. Regulation regarding the defense from harm caused on a subliminal level would be excluded.

Above all, ‘harm’ would not be estimated on an individual basis. Each of our bodies has its own relationship with ‘harm’. A general definition of ‘harm’ would be a statistical assembly, like the general genome: good to learn general lessons, unable to cure a given patient. In order to evaluate chances of doing harm to one or more persons, only individual definitions must be considered, in the same way only personal genomes can be considered when it comes to forecast and maintain health of individuals.

Abstract terms - statistical or general, not individual - are nonsense when dealing with human beings.

This observation, by the way, comes as a reminder of an almost forgotten truth that morals in the age of neurobiology will hopefully re-assimilate: abstract terms – statistical or general, not individual – are nonsense when dealing with human beings.

There may be another trick to arrange a formal characterization of ‘harm’. If the neurobiological structures and brain states associated with the qualia of grief, sorrow, pain, etc. of an individual were known, then we could make a ‘harm map’ showing the forbidden lands. It’s a theoretical possibility, although extremely cumbersome since the machine should know the person’s brain states at any given moment. It’s difficult to say whether that knowledge will be ever accomplished, and I’m afraid that many insuperable issues will arise along the way – for example, an unbridgeable distance between our simple words and the complexity of brain states.

What is the moral of this story? If you really believe that some machine, fed with datasets originated by some description of your subjective inner states, will be able to compute what is harmful to you and what is not, well, you must be a science-fiction writer from the last century.

When even natural languages fail, dead languages clearly stand no chance.

All present evidence say it is unlikely we may represent embodied concepts like ‘harm’ and ‘utility’, ‘good’ and ‘bad’, etc. in such a precise way it can be rendered in mathematical terms, fed to a robot, and have that robot avoid jeopardizing us and those we care about. This is not to even mention bugs and their possible deadly consequences. Those key words (harm, utility, good, bad, etc.) are nothing else than feeble attempts to describe neurobiological mechanisms that appeared way before natural language crawled in. And if even natural languages fail, dead languages clearly stand no chance.

Having machines concretely and mandatorily abide to Asimov’s First Law is becoming much more than a cool fictional idea in these days: the surge of self-driving cars, for example, involves this necessity. The more we delegate our satisfaction and safety to machines, the more we have to define satisfaction and safety with mathematics. But it’s not just that. The new myth of AI, promising a massive automation extended to high-level cognitive tasks, needs a mathematical definition of all our social stands: justice, freedom, equality, merit, accountability.Unfortunately, as I said most of the work in that direction is done: freedom is a number (choices), success is a number (money, possessions, likes, followers, etc.), merit is a number (reputation, marks), satisfaction is a number (1 to 5), good life is a number (years).

Enlarge

How much is one cloud plus one cloud? How much is one hope times one remorse?

The number-driven culture is showing all the symptoms of a cultural drug addiction.Permanent anxiety, the illusion of control, and a vicious circle, steadily reinforced. Objectives are defined in numbers and results come in numbers. Less and less not-a-number or incalculable factors are considered in decision-making processes. In the attempt to forecast the dynamics of complex living systems – such as a human society in the economic context of decreasing resources, or a guy under scrutiny for a job – we confide more and more in the methodological capacity of computers and automation. In spite of that, networked computers themselves collectively make up a terrifically complex system, artificial, pervasive, growing at a numbing pace. This simply means that relying on computers to handle complexity is shifting our faith to another religious creed: we must pray they will not get (us) wrong, because we cannot be rationally or scientifically certain about it.

Our social catastrophe is more than certain.

If Evgeny Morozov’s ‘technological solutionism’ is the main ideology today, the way to tackle problems of any kind, computation and calculability are at the core of this reductionist ideology. Meanwhile we deliberately neglect and give up the infinitely wider realm of the incalculable. We lose our body-rooted social abilities and the judgment of emotions, developed over millions of years of evolution. Deaf ears and robot-like treatments which make us feel ignored, miserable, and merciless, become the rule.The kind of violence that does not leave visible signs, weapon of perfect crimes, is available to everybody. Our social catastrophe is more than certain.

The epistemological frontier between the calculable and the incalculable is apparent from a scientific standpoint. But it is a political and ethical frontier, too.

If decision-makers believe in computable, math-modeled social theories and believe these theories are the product of proper science; if they believe that some fantastic a priori objectiveness about us can be reached through those means; if they don’t perceive the risk in excluding non-quantified factors from decision processes; if they cannot or fear to take into consideration incalculable human factors first thing, through their own sensibility and inventiveness; if they believe that math and logic are sufficient to represent our most heartfelt interests; if they believe this is what ‘modernity’ and ‘innovation’ are all about; if they believe that future is data-driven; well, then they will simply turn those theories into rules and laws, shape society as a digital machine, and force humans into the role of machine parts.

I would like to emphasize my use of the word believe above: those choices are all acts of faith. Not a shred of science or rationality. Rather, fear and distraction. Ignorance and presumption.

The explosion of low-paid gig economy is a clear sign of the trend. Particularly where humans work as mere accessories of machines, instead of the opposite, for instance labeling training sets for machine learning, or boosting hits, likes and reputations in the click farms. In these cases, any sane man-machine hierarchy is turned upside down, giving us a glimpse of a new dystopia. Another grim hint is the dogmatic push for STEM as the only appropriate education for the new generations, and the parallel demotion of humanities as vestiges of a past we must leave behind.

Should a problem be ‘solved’ or just transformed? Should we go for optimization or for satisficing? Should a human or social question be represented in form of numbers or in form of names and stories? Should it be assigned to a processing machine or to listening humans? All these questions are one: should we stay on the calculable or on the incalculable side of things?

It's important to take a stance, to increase the pressure on those in power to respect humanity on the long term.

Although the (political) answer to such question has huge consequences, it still appear arbitrary at this point in history. It is the result of prejudices, culture, and empathy of those who make the theories of society, of those who turn theories into politics, and of those who participate in politics on a grassroots level. It’s important now to take a stance, to increase the pressure on those in power in order for that answer to respect humanity on the long term. In my opinion, this necessity forces intellectuals to new crucial duties to future, and here are the ones I feel mine:

1) to divulge the neurobiology-informed ‘correct vision of man’ Antonio Damasio talks about in Looking for Spinoza, envisioning how (human) beings work for real instead of how ancient philosophers thought millennia ago,

2) to establish publicly what the limits of calculability are and how idiotically they are being transgressed in human and social matters,

3) to declare what the inviolable rights of the incalculable are towards autonomous computing,

4) to acknowledge what natural social abilities of ours must be fostered and educated like we never did before, starting from empathy.

The purpose is to develop more creative and collective ways to face the swelling global issues, and life in all its facets.

Stefano Diana is an Italian researcher and author with a background in computer science. His main interests are in the humanities, social phenomena and neurosciences. His day job consists of web-based productions and UI/UX design, creative direction, copywriting, graphics and teaching media and communication. Occasionally he makes music, translations, public art installations and video documentaries. His 1997 essay W.C.Net– Wrong Communications on the Net was recommended by Umberto Eco. In 2016 he published his book Noi siamo incalcolabili. La matematica e l'ultimo illusionismo del potere on which this INC Longform is loosely based.

Literature

Barrow, John D. The World Within the World, Oxford: Oxford University Press, 1990.

Barthes, Roland. ‘Myth today’, in Mythologies, London: Paladin, 1972.

Baron-Cohen, Simon. The Science of Evil: Empathy and the Origins of Cruelty, New York: Basic Books, 2011.

Boncinelli, Edoardo and Giorello, Giorgio. Lo Scimmione Intelligente, Milano: Rizzoli, 2012.

Damasio, Antonio. Descartes’ Error: Emotion, Reason, and the Human Brain, Penguin Random House, 2005.

Damasio, Antonio. Looking for Spinoza: Joy, Sorrow, and the Feeling Brain, London: William Heinemann, 2003.

Diana, Stefano. Noi siamo incalcolabili: La Matematica e l’Ultimo Illusionismo del Potere, Roma: Stampa Alternativa, 2016.

Eco, Umberto. Trattato di Semiotica Generale, Milano: Bompiani, 1975.

Falletta, Nicholas. The Paradoxicon, New York: John Wiley & Sons, 1990.

Gould, Stephen Jay. Full House, Cambridge (MA): Harvard University Press, 2011.

Hare, Robert. Without Conscience: The Disturbing World of the Psychopaths Among Us, New York: Guilford Press, 1999.

Israel, Giorgio. La Visione Matematica della Realtà: Introduzione ai Temi e alla Storia della Modellistica Matematica, Bari-Roma: Laterza, 1996.

Koyré, Alexandre. Études d’histoire de la pensée philosophique, Paris: Colin, 1962.

Lakoff, George. The Political Mind: A Cognitive Scientist's Guide to Your Brain and Its Politics, Penguin Random House, 2009.

Marx, Karl. Economic & Philosophic Manuscripts, 1844.

Morozov, Evgeny. To Save Everything, Click Here: The Folly of Technological Solutionism, New York: Public Affairs, 2013.

Rizzolatti, Giacomo and Sinigaglia, Corrado. Mirrors in the Brain: How Our Minds Share Actions, Emotions, and Experience, trans. Frances Anderson, Oxford: Oxford University Press, 2007.

Shladover, Steven. ‘The Truth about “Self-Driving” Cars’, Scientific American, 314, 52-57 (2016).

Wigner, Eugene. ‘The Unreasonable Effectiveness of Mathematics in the Natural Sciences’, Communications in Pure and Applied Mathematics, Vol. 13, No. I (February, 1960). New York: John Wiley & Sons.