By Nadine Rotem-Stibbe

Unpublish – ‘make content that has previously been published online unavailable to the public’. A stark reminder that this is easier said than done comes from the Oxford Dictionary: ‘Once the images have been published on the internet it will be practically impossible for any court order to unpublish them.’ And: ‘After an outcry on Twitter, the magazine unpublished the column, but the editors at the blog Retraction Watch managed to find a cached version, reminding us all that the internet never forgets.’

Unpublishing belongs to the internet, but only paradoxically. There have always been ways to erase, overwrite, burn, destroy or censor content. But as the position of traditional publishers shifted and self-publishing and online publishing became ubiquitous, unpublishing proves to become one of the biggest conundrums of the digital era. What, in this overload of content creation, does it mean to unpublish? What are the limitations and contradictions it creates? Who has the power to unpublish? Is it even truly possible?

Unpublishing as Impossibility

Publishing is usually understood as an intentional act. On the internet, however, we unknowingly leave trails of digital data, which automatically disseminate into the network. Going online carries with it the risk of becoming a publisher, whether we are aware of it or not. The act of unpublishing is an attempt to take back control. We may actively look to remove specific content from the internet, to limit what others can know about us. But it’s not so easy to unpublish material once it is in the networked domain. Take a message on a Facebook wall: there, ‘unpublishing’ can only mean retracting, never deleting it.

David Thorne, an Australian satirist, publishes correspondences with people on his website. Thorne’s email thread with Jane illustrates the intrinsic problem of digital information. Thorne sends a digital drawing as payment, which Jane refuses. Thorne wants it back, leaving Jane confused: ‘You emailed the drawing to me. Do you want me to email it back to you?’

David Thorne, an Australian satirist, publishes correspondences with people on his website. Thorne’s email thread with Jane illustrates the intrinsic problem of digital information. Thorne sends a digital drawing as payment, which Jane refuses. Thorne wants it back, leaving Jane confused: ‘You emailed the drawing to me. Do you want me to email it back to you?’

The dialogue continues, with Jane insisting that she has returned the original spider to Thorne: ‘I copied and pasted it from the email you sent me.’ But of course, there is no original spider in the way that there would be an original paper drawing. And with every new email in the chain, the spider is replicated again. Once created it can only disseminate, the very idea of receiving it back is absurd. To try to retrieve it is to work against the force of nature; or worse, the forces of the internet.

The persistence of data and the power of tech companies even beats national governments. When the British spy agency GCHQ forced the editors of The Guardian to destroy the hardware containing top-secret documents leaked by Edward Snowden, they used ‘angle-grinders, dremels – a drill with a revolving bit – and masks’, as described by correspondent Luke Harding. The agency even provided a ‘degausser’ to help out, which destroys magnetic fields and erases data. Nevertheless, for all this effort and hi-tech equipment, the definite destruction of the Snowden files was already prevented by actively making back-ups and caring for distribution. Copies of the documents existed in several jurisdictions, and the editors of The Guardian confirmed they would continue to have access to them. The action did not stop the flow of intelligence-related stories. The destruction was at best a symbolic act of intimidation.

Even when content is successfully deleted, metadata will often live on. Metadata is sometimes as telling as the content itself, since it allows interactions to be reconstructed in great detail – even if their precise nature remains unknown. Metadata can even keep a record of when, where, and by whom a specific piece of content was deleted. Archiving bulk telephone metadata in fact became one of the National Security Agency’s most useful tools as they were able to access contacts, IP addresses, and call durations of tracked persons.

Unpublishing is not the same as the wish to delete or destroy, while that may be the means to the end. As with publishing, the act of unpublishing is intentional. The purpose is to limit the access to information – instead of opening the information to the public, restricting it. But even if the destruction of its carrier is in principle possible, once knowledge is made public, the process of unpublishing becomes harder. If data has spread through the network, almost nothing can stop it from spreading further. This is not new, but rather a process that can be traced back to the invention of the printing press or even further.

Unpublishing Across Platforms

If the purpose of unpublishing is monitoring content, then the evaluation of the access to information should answer to a set of criteria that only those in power have the opportunity to decide on. A look at the guidelines of different tech companies shows precisely the problematics inherent to the purpose. What is unpublished, by whose request, and how?

Facebook acknowledges that, even when a user hits the ‘delete permanently’ button, ‘copies of some material (example: log records) may remain in our database […]. Some of the things you do on Facebook aren’t stored in your account. For example, a friend may still have messages from you even after you delete your account.’ In similar vein, Google’s terms of use state: ‘When you upload, submit, store, send or receive content to or through our Services, you give Google (and those we work with) a worldwide license to use, host, store, reproduce, modify, create derivative works (such as those resulting from translations, adaptations or other changes we make so that your content works better with our Services), communicate, publish, publicly perform, publicly display and distribute such content.’

Still, platforms take on an active role when it comes to unpublishing content. Facebook censors images and content relating to child pornography, pornography and depictions of violent death, such as beheadings. The guidelines are implemented by individuals employed as content moderators, most of whom are not even aware of who they’re working for. The company defends its broad and sweeping censorship rules under the guise of a moral compass. This is reminiscent of the Catholic Church’s index of banned books, in which those in positions of power decided not only what should be censored from public knowledge, but what should be upheld as a moral standard. As John Lanchester shows in ‘You Are the Product’, published in The London Review of Books, this leads to puzzling and seemingly senseless arbitrariness:

Facebook works hard at avoiding responsibility for the content on its site – except for sexual content, about which it is super-stringent. Nary a nipple on show. It’s a bizarre set of priorities, which only makes sense in an American context, where any whiff of explicit sexuality would immediately give the site a reputation for unwholesomeness. Photos of breastfeeding women are banned and rapidly get taken down. Lies and propaganda are fine.

Similar to Facebook, Microsoft legitimizes its censorship practices by using the seemingly all-encompassing scarecrow of child-pornography. Still, they seem to have other purposes than the ones they proclaim. ‘Nudity’, ‘obscenity’, ‘child-pornography’, etc. are the misleading denominators of political agendas backed by platform power. Content is also censored because of its ideology. An example is the attempt to remove the video of Osama Bin Laden, as described by a moderator in an interview by Eva and Franco Mattes, an Italian artist duo who made an installation around internet content moderation:

Osama Bin Laden video removal was done as a PR move and a show of respect to the individuals who had been affected by events like 9/11. It was also done to show a patriotic symbolism that the United States has accomplished what we had set out to do and not only is this person dead, but there is no point continuing to talk about him, or even look at him, because his reign stops here. It was a social move to depict having the upper hand or the ultimate say.

Tech companies have started automating these censorship regimes, while content creation increasingly becomes automated as well. How does this align with publishing as an intentional act? If the creation, dissemination, and moderation of online publications become autonomous, if the audience no longer has to be human, the word ‘to publish’ might lose its applicability. Unpublishing, in its turn becomes even harder. An example is the fake news phenomenon: the circulation of content and the speed at which it proliferates has become the most important factor and the purpose for its being. The creator of the content being a bot or a human is of little significance, as long as the shock factor is there to arouse the viewer’s passion, hatred, anger or satisfaction. With such sensational news, unpublishing is nowhere near in sight.

Open Source Unpublishing

In the case of free and open-source software like MediaWiki, which served to create Wikipedia, the terms are laid out differently but not less strictly. The system is quite complex, which is reflected in its accessibility guidelines:

To prevent anyone but sysops (system operators) from viewing a page, it can simply be deleted. To prevent even sysops from viewing it, it can be removed more permanently with the Oversight extension. To completely destroy the text of the page, it can be manually removed from the database. In any case, the page cannot be edited while in this state, and for most purposes no longer exists.

While the user is offered the possibility to delete content, what in fact occurs is a change of access. Users classified as editors (sysops) still have access to the content. On several levels, talk of deletion masks a technical workaround that doesn’t actually result in deletion at all. This problem is exacerbated because each layer requires increasing technical knowledge, which most users probably lack. Additionally, removing data impacts the wiki community and may be disapproved of by others. The friction between the social and the technical elements of removing content makes even partial deletion a foreboding task.

This approach is representative of the complexities of many digital systems and the ambiguities of the term to delete. Another example of the intricacies of unpublishing is offered by Git, the tool for developers that tracks changes through ‘commits’. Every time you save your file, a unique ID is created that allows you to keep record of what changes were made when and by whom. If you don’t want your edit to be tracked, the only route is not to commit to a change. A last example is blockchain, a technology that by design offers a secure way to record transactions that cannot be altered or deleted due to the decentralized consensus. In principle, any action taken using blockchain is additive, it can only carry on growing. Altering or deleting anything is the antithesis to this technology.

Digital Abstinence

An attempt to remove, hide, censor or delete a significant piece of content, might in fact bring more attention to what you are trying to unpublish. The Streisand effect was named after Barbara Streisand, who sued a land photographer for violation of privacy, an action which only brought more attention to the picture of her house. A gruesome example is the case of an American girl named Nikki, who was decapitated in a car accident. A couple of people working on the scene admitted to have taken and shared photos of it. The images circulated the internet for pure shock value. When Nikki’s father inevitably wanted to have these photos removed, he found there was no law to protect the memory of his daughter, no Right To Be Forgotten, a concept implemented in the EU (and Argentina) since 2006. Despite the lack of such law in the U.S., Nikki’s family managed to have the photos removed from two thousand websites. Of course, they are still easy to find online. And even though Google removes or delists some search results from its listings, the company maintains a disclaimer stating that content has been removed, which is a perfect blood trail to a determined bloodhound.

Unpublishing does not exist. We’re forced to practice caution when accessing, using, posting, reading, inevitably publishing anything on the internet. The only option left is not to publish, not to participate, and to stifle our own voices online, which in our current world infringes on our freedom IRL.

Unpublishing does not exist. We’re forced to practice caution when accessing, using, posting, reading, inevitably publishing anything on the internet. The only option left is not to publish, not to participate, and to stifle our own voices online, which in our current world infringes on our freedom IRL.

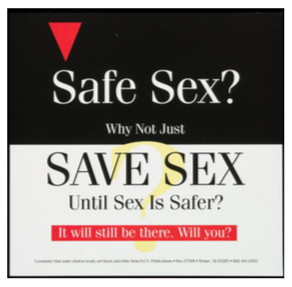

This brings back a conspicuous memory from back at secondary school in a Sex Education class. Every week the whole class was made to repeat out loud what became a saying: ‘The safest sex, is no sex.’ There is not much space for interpretation. If you’re going to regret it, don’t do it in the first place. Digital Abstinence: the safest way to unpublish is not to publish.

—

This is an adaptation of a thesis written for the Experimental Publishing programme of the Piet Zwart Institute in Rotterdam