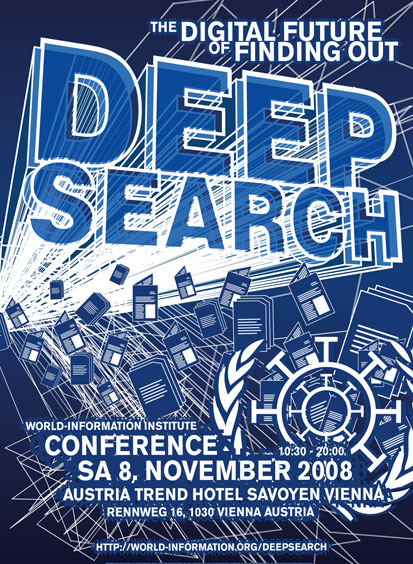

This Saturday, November 8, I had the pleasure of attending the well organized World-Information Institute conference Deep Search: The Digital Future of Finding Out in Vienna, Austria. With Deep Search, conference editors Konrad Becker and Felix Stalder set out to address the social and cultural dimension as well as the information politics and societal implications of search. An impressive line-up of eight speakers, divided over the sessions ‘Search Engines and Civil Liberties’, ‘Search Engines and Power’ and ‘Making Things Visible’, promised to make it an information-dense and interesting day.

As this will be a rather full report, I will post it in two parts. Be sure to keep an eye on the conference website, as the organizers promise to make a full video archive of the conference speeches available soon.

Keynotes

Paul Duguid – The World According to Grep: Both Sides of the Search Revolution

After a timely start and a word of welcome, Konrad Becker introduced the first speaker of the event: Paul Duguid, former consultant at Xerox PARC (1989-2001) and author of The Social Life of Information (Harvard Business School Press, 2000). Currently, Duguid teaches History of Information and Quality of Information at the University of California in Berkeley.

Faced with the ambitious task of giving a broad historical overview of information in 45 minutes, Paul Duguid set off on a strong pace. Taking Latour’s immutable mobiles as a theoretical base, his talk aimed to break the idea of information as a constant notion throughout history. What was the world like when we searched through analogue means, and how to define the quality of results?

Duguid emphasized the importance of storage to the nature of search. Throughout history we have seen selection criteria change with storage space and information carrier. The codex, eventually, gave us classification, indexes and swift distribution. The invention of the printing press rendered us with multiple and reliable copies. Across time, collections arise that are in fact selections, defined in an institutional and social context.

How does one judge quality? Is the original always better, do things get better over time, or are both positions true? This is the problem of the immutable mobile. Information does not speak for itself. Paper is often regarded as irrelevant to news, but it is ignorant to neglect the way we have historically used paper to constrain and select our news. It is important to recognize the integrity of the material as the context of information, it is not free standing or autonomous. We have always relied on institutions to guide us, and removing those constraints leads to uncertainty.

Furthermore, Duguid indicates, it is important to see where the power gets distributed to. Google and the advertisers define our information. There is one traditional institution that we see gaining some sort of power: the university. Alliance to a university, for Google as well as the individual, adds value. In the search for authority however, seeing old institutions made new again should be called problematic, rather than progress.

Claire Lobet-Maris – From Trust to Tracks

Next up was Claire Lobet-Maris, professor at the Computer Science Institute of the University of Namur, and co-director of CITA (Cellule Interfacultaire de Technology Assessment).

Technology Assessment (TA) studies and evaluates new technologies, based on the premise that society has a right to scrutinize their development and investigate possible ethical implications. As Lobet-Maris explained, first generation TA (70’s) was strongly fed by determinism and a sharp distinction between experts and public. Second generation TA came to regard technology predominantly as a social construct. Currently, Lobet-Maris claimed, a third generation of ‘militant and value oriented’ TA is emerging, that aims to increase social responsibility, as well as to open up the political scripts through which technologies actively shape society.

The issues raised by search engines from a TA perspective, concentrate around democracy, autonomy and regulation. Looking at search engines and democracy, Lobet-Maris identifies issues such as the equity of chance to exist on the web, the diversity and richness of public space as a public sphere, and transparency of the indexing and ranking metrics.

Regulation might take place in a number of ways. A market approach of regulating web operators is mentioned, although Lobet-Maris feels the distortion of information is too large a risk. Network regulation, where a trusted social network plays an intermediary role, is already common in the web sphere. However, the practice gives rise to socio-political questions and causes social fragmentation of the web sphere. The way to go in protecting democracy, Lobet-Maris argues, is state responsibility. As the Geneva declaration mentions, the web is a public global good. While the state has a responsibility to protect diversity and minority, such is difficult to endorse. Lobet-Maris suggests a ‘labeling’ of search engines by transparency, and the initiation of R&D projects that are based on democratic search metrics and the stimulation of market competition.

From the perspective of autonomy, Lobet-Maris addresses contextualization and personalization of information. Silent and non-transparent profiling creates an ‘iron numerical cage’ around the user, making it difficult to shape and manage one’s numerical track across the web and the narrative that consequently unfolds.

As these issues are traditionally addressed by a legal framework, finding new paths is important. State regulation in this respect seems the least viable option, as strong liberalization and globalization frustrate the application of laws. Market regulation based on ‘informed consent’ also fails, as the better part of Internet users does not read the terms of agreement. The third alternative Lobet-Maris sees, is based on the technological empowerment of citizens: Give people the technological capacity to manage and reset their profiles, and restore intellectual rights on their social identity.

Session 1: Search Engines and Civil Liberties

Gerald Reischl – Inside the Google Trap

Gerald Reischl is a Viennese journalist, writing for the technology section of the Austrian KURIER newspaper, and author of the book Die Google-Falle: Die Unkontrollierte Weltmacht Im Internet (Uberreuter, 2008).

After presenting the audience with the latest statistics on Google’s workforce and its German/Austrian market share, Reischl went on to list the main arguments defended in Die Google-Falle. Reischl feels Google can hardly be called a search engine any longer but is, in fact, a dangerous corporation with ambitions to control the internet and discard our privacy. Its market position and ever expanding services are dangerous to an information society.

A long string of patents is indicative of both Google’s historic and future incentives. The PageRank algorithm is celebrating its 10 year anniversary, while new patents, such as the Programmable Search Engine, are being claimed.

A recent debate on Google Analytics has inspired German news weekly Der Spiegel to delete the software from Spiegel Online. With 80 % of the websites today running Google Analytics, it is completely unclear which party collects what kind of information about your presence on the web. Reischl illustrated such during his presentation, by running a search for Spiegel Online on http://www.ontraxx.net, a service that detects Google Analytics on any given website. While Der Spiegel had indeed deleted the software from its server, the ontraxx program showed that Google Analytics continued to run, through third parties such as dating sites that advertise on Spiegel Online.

Google’s investment in DNA search project 23andMe is another of Reischl’s worries. Obviously, health care is hardly core search engine business. The website asks you to send your saliva to the United States, after which it will make your complete DNA profile available online. All this can be done as we speak, for about 300 USD, within a time span of four to six weeks. The dangerous part, Reischl argues, is the way Google makes DNA testing seem ‘normal’. Soon enough, companies will want to check a person’s DNA profile prior to employment, or a DNA button will appear on MySpace profiles.

Reischl predicts that Google will not remain everybody’s darling for long. Recent discussions about Chrome might be indicative of a more critical trend among users, and proper education should help to further raise awareness.

Joris van Hoboken – Search Engines and Digital Civil Rights

Joris van Hoboken is a full-time PhD candidate at the Institute for Information Law at the University of Amsterdam. He has a background in the Dutch digital civil rights movement Bits of Freedom, which is part of European Digital Rights (EDRI) and is currently a visiting researcher at the Berkman Center for Internet & Society at Harvard University.

Van Hoboken’s talk focused on the legal framework search engines have to act within. First off, he indicated to be somewhat more positive about Google’s attempts to acknowledge digital civil rights than was Gerald Reischl. Instead of focusing on Google’s power, it is important to review alternatives to the existing policies.

Van Hoboken firstly addressed the various kinds of pressure that is exerted on entities mediating Internet access, such as ISPs and search engines. In France, the three-strikes-out strategy makes the ISP the new gatekeeper. In putting a stop to the violation of intellectual property rights through file sharing, the government might seriously harm the freedom of expression. Similar examples where ISPs decide what Internet we get to see can be found in the US where Verizon widely blocked Usenet access this summer, instead of blocking just the few offending groups.

Search is a focal point in content regulation as well. Pressure is exerted on search providers by institutions like the EU, often resulting in the removal of search results and takedown requests. In the case of national government ordering, filtering results in a geographical suppression of information, while keeping it findable outside of national limits. Self-regulated filtering by search engines takes place as well. Google.de, for instance, has been noted to filter certain right wing extremist content of its own accord completely.

In protecting the freedom of expression, Van Hoboken sees a role for politics. Democratic involvement is necessary, and debates should be taking place at this level. As freedom of expression often seems to be a ‘negative’ right, perceptions on the role of governments vary widely.

Van Hoboken then talked about the current regulatory involvement with search engines in the EU. It is hard to decide when exactly a search engine becomes liable for showing a link to illegal material, or when an ISP can be charged for enabling file sharing. Laws on so-called intermediary liability have been effective on the EU level since 2000. In these laws however, ISPs enjoy some sort of safe haven in order to be able to function at all. Search engines are not excluded from intermediary liability laws, giving cause for possible chilling effects concerning freedom of expression.

The European Commission recently issued a Green Paper on Copyright in the Knowledge Economy, which implicitly states that search engines might in future need prior permission to index a site, instead of the opt-out robot.txt-model that is currently applied. Obviously, publishers should have some means of control, but Van Hoboken feels this scenario could change the search engine landscape extremely.

Concluding his argument, Van Hoboken states that search engines are a primary target of information suppression. We should ask ourselves whether this is the road we want to take. Legal policy discussions affect the access to information, which is why they should be followed closely. And finally, the way in which the government might positively influence freedom of expression and the access to information in future should not be left unexplored.