I don't understand the power of artificial intelligence. Or rather, I wonder why AI overshadows the power of interfaces. We live in a world obsessed with data processing, the resulting “intelligence” it produces and the damage it causes. This emphasis on artificial intelligence and algorithms[1] in mainstream discourse as much as in research, has been accompanied by a parallel devaluation of interaction and interface design, neglecting their importance in the success of digital services that are praised for their algorithms.

Historically, interaction design has always been in competition with artificial intelligence, since, as we will see, the two are based on radically different objectives. However, design does not have (and has never had) the aura of artificial intelligence among the general public. It doesn't carry the same promises that have fueled the fantasies of so many authors, filmmakers and journalists. After two decades of rich developments in interaction design, driven in particular by the design of tactile interactions required for smartphones, the trend has reversed. The return to favour of AI has coincided over the past decade with the progressive standardization and impoverishment of interface design, which is, I argue, far from coincidental.

The rivalry between artificial intelligence and interface design is not new, or even the result of spectacular advances in neural networks and their democratization. It is much more fundamental because it stems from two very different ways of thinking about the relationship between computers and humans. This opposition goes back to the early years of computer science. Researcher Thierry Bardini finds traces of it in the thinking of Douglas Engelbart,[2] who pioneered the field of human-computer interaction (HCI) in the 1960s. He is best known for having, with his team, invented the mouse and designed the “mother of all demos”, which demonstrated numerous avant-garde uses of the computer, such as collaborative document editing. For Engelbart, if his efforts and AI researchers have similar short-term aims, their long-term objectives differ radically. For him, the challenge is to think about and develop the ability of humans to take control of and guide computers, with the ultimate aim of increasing human potential and capabilities. Following Engelbart, many proponents of this vision of HCI have worked to turn the computer into a “bicycle for the mind”[3] or a “meta-medium”[4], through the idea of decentralized, personal computers.

Conversely, for AI advocates at the time, the whole point was to ensure that computers became intelligent enough to be autonomous so that humans could outsource tasks to them[5].

HCI historian Grudin explains that “at the time, artificial intelligence researchers believed that machines would soon be autonomous and intelligent, on an equal footing with humans.”[6]

If we follow this vision, the ideal computer is an assistant who is as discreet and diligent as possible, a vision that is perfectly embodied today in voice assistants,[7] whose ultimate aim is to anticipate the slightest needs and desires, like a butler[8] or maid.[9] To put it another way, paraphrasing Bardini, AI researchers seek to increase the intelligence of the computer, and Engelbart that of the user.[10] Today, behind the concept of AI we find a whole range of technologies based on Machine Learning models, with very diverse applications. But beyond their differences, they all have in common the original desire for autonomous intelligence. A contemporary translation of this vision is the cliché of the smart fridge that learns from our habits and is capable of ordering the food we need, even before we realise we're missing it. The goal of many designers, like Greg Brockman, co-founder of Open AI, when he talks about the future of ChatGPT, is therefore to understand and anticipate your intentions, even if you haven't explicitly expressed them.[11] Welcome to the ultimate personalized experience, one that makes your life easier by solving it in advance. So, even if the promoters of AI don't say it in these terms, their vision of the future, what they are aiming for, is the death of interaction design, because wanting to anticipate everything ultimately means automating everything, eliminating all interaction. In his presentation, Greg Brockman is careful to point out that traditional interfaces are not going to disappear, but the role he leaves them is that of control and correction, when the model has not sufficiently “understood your intention”, and one can easily understand that the aim will be to minimize these moments as much as possible.

Enlarge

The contemporary avatars of this vision are legion: from connected thermostats with their intelligent temperatures to the pre-selection of candidates’ CVs and the suggestion of content in your social network feeds. One of the recurring arguments in marketing speeches is that it will save you time, so that you can finally concentrate on what really matters, freeing your life from thankless, tedious tasks[12]. But where do you draw the line between unglamorous tasks and others? This approach sees people as mere beings with passive needs that need to be met. French philosopher Pierre Cassou Noguès talks about the “benevolence of machines”, i.e. their propensity to monitor us “for our own good”.[13] AI mediates our perceptions and orient our choices which, because they seem to come from us through our data, are insidiously subjugating. Far more so, perhaps, than the dystopian visions we see in science fiction of robots seeking to forcefully impose decisions on us.

The opposition between artificial intelligence and interaction design is also reflected in their relationship to learning. Engelbart differentiated himself from AI advocates by the importance he placed on human adaptation. “When interactive computing in the early 1970s was starting to get popular, and they [researchers from the AI community] start writing proposals [...], they said: well, what we assume is that the computer ought to adapt to the human [...] and not require the human to change or learn anything. And that was just so antithetical to me”.[14] Ironically, on his X feed, Greg Brockman raves about the exhilarating feeling of mastery that comes from the effort invested in learning programming and the tedious work of debugging,[15] which is in complete opposition to his objective, through ChatGPT, which is precisely to enable human beings to offload all tedious work by becoming “managers” or “supervisors” of an artificial intelligence that would do the work.[16]

On the contrary, in Human-Computer Interaction, thinking is aimed at augmenting human intellect. Interaction techniques are designed to promote rapid, incremental manipulation of digital objects, enabling immediate visualization of results and thus active experimentation with the machine's possibilities. Unsurprisingly, this approach is to be found mainly in digital design and modelling tools, such as the concept of direct manipulation,[17] i.e. manipulating objects continuously on the screen, which was a cornerstone of the interface development that fueled the microcomputer revolution.

Enlarge

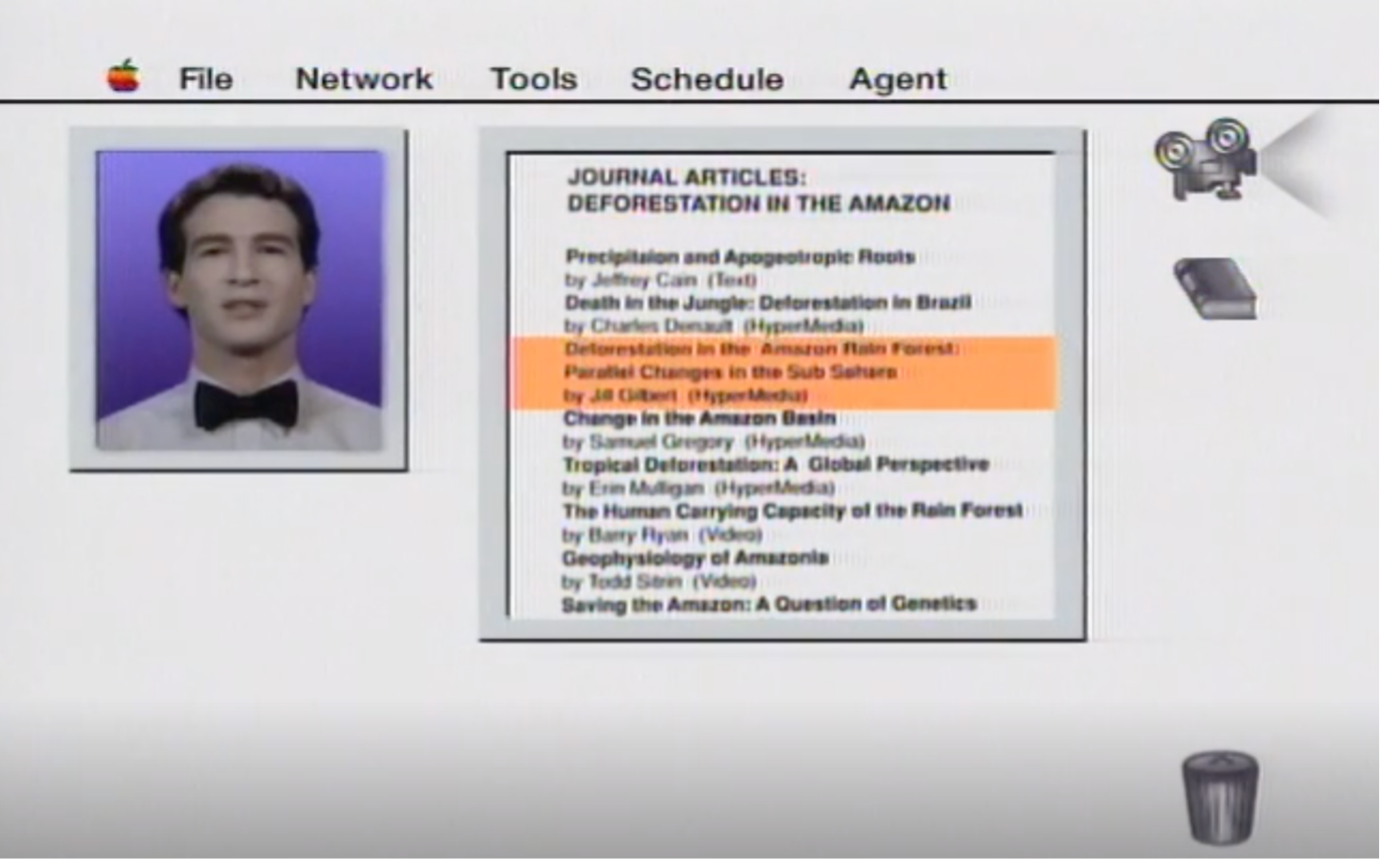

From this point of view too, positions have changed little, and the same criticism is addressed to today's algorithmic systems. Many observers have argued that AI is based on a fundamental denial of the human capacity to evolve and learn. This is reflected in the fact that contemporary, connectionist AI operates based on immense databases that have already been built up. Algorithms that claim to be predictive are not predictive because they have managed to penetrate people's subjectivity to fathom their desires or aspirations. They are predictive because they constantly assume that our future will be a reproduction of our past".[18] It is the famous: you liked this, so you will probably like that. An approach widely criticized for locking people in and denying them their ability to evolve and renew themselves.Because they are so antithetical, the research fields of Artificial Intelligence (AI) and Human-Computer Interaction have always been in competition. More precisely, we could say that HCI has developed in the shadow of AI: “HCI flourishing in AI winters and moving more slowly when AI was in favour."[19] Grudin speaks of AI winters to designate the decades when this field of research languished. Historically, AI has distinguished itself by its ability to promise wonders, attracting huge amounts of funding in the 1960s and 1980s. But it has also been known for its propensity to break promises, leading in turn to a freeze in the funding allocated to it, which was redirected to a certain extent to HCI, which has amply demonstrated its potential in democratizing computing in offices and homes. It was during these periods of AI disenchantment that interaction was seen as a more powerful paradigm for thinking about computing.[20]

Why is AI so atractive? And why is it only when its limitations are so apparent that HCI manages to capture some attention? The causes are complex, and have much to do with political and economic issues, as modern AI techniques are based on the lucrative business model of data mining and monetization. Another issue is the clear distinction between, and lack of connection between, the artificial intelligence and interface professions in companies. However, the role of the imaginary cannot be overlooked. In fact, it's difficult for design to make people dream the way AI can. Creating intelligent, autonomous entities on the one hand, optimizing and facilitating the use of a complex tool on the other - there's no contest. “Research into perceptual-motor and cognitive challenges of GUIs on the one side, exotic machines and glamorous promises of AI on the other. GUIs were cool, but AI dominated funding and media attention and prospered.”[21]

Interfaces are never an end in themselves, but merely a means to enable humans to get things done. As tools, they seem harmless. All the more so since the interface is based on a paradox: it goes unnoticed even though it is right in front of us and we experience it every day - a paradox well known to graphic designers: “For by making things visible, graphic design makes itself invisible. It systematically diverts our attention to something else. I don't see a logo, I see an institution. I don't see a poster, I see a political figure”.[22] In the case of an interface, I'm not typing characters into a text field, I'm talking to an artificial intelligence. AI, on the other hand, can draw on the rich imaginaries conveyed by the ever-vibrant fantasy of its alter-ego, Artificial General Intelligence, in popular culture, and in so many science-fiction films and novels. This confusion between strong and narrow AI maintains the public's interest in it, through the many press articles dealing with the metaphysical questions posed by an AI that would come to surpass humans.

Enlarge

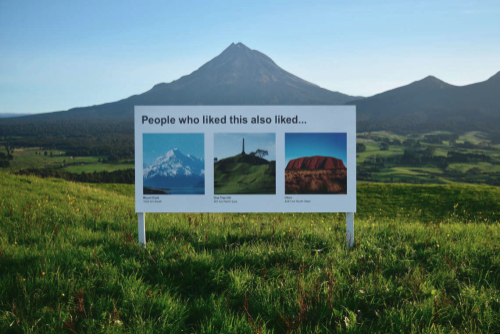

This potential danger is maintained by the fact that AI is perceived as an inaccessible and unfathomable black box, which contributes to its myth. AI lends itself to these narratives because there is a naturalization of its actions: it is perceived as more objective and therefore more reliable, powerful and dangerous because it is based on an accumulation of data. Actions and wills made explicit through our interactions are perceived as subjective, while data recorded via sensors and without our knowledge appear as more objective and therefore true, a largely erroneous presupposition that persists.[23]AI tends to favour interactions based on signals that are not explicit or entirely voluntary on the part of users: the gaze rather than the hand, facial expressions rather than words.

On the French Wikipedia page dedicated to “smart thermostats” we read that “in order to mitigate the problems of human error associated with programmable thermostats, the smart thermostat uses a sensor that determines occupancy patterns to automatically modify the temperature according to the occupants' habits and behaviors”.[24] This example perfectly illustrates the mistrust of human actions in contrast to the full confidence placed in AI. Much more energy is spent on trying to automate a task than on making it easier for the user to set settings right.

We're living through a new summer of AI, saturated with new promises as glittering as ever, but this time fueled by a number of impressive consumer applications, making us forget the failures of the past and the limitations of the present. Beyond the AI-centric critiques that have amply demonstrated the extent of the contemporary problems posed by these technologies, what interests me here is re-embed AI in the interfaces that mediate it. Although the new AI springtime seems to relegate design and HCI to the back burner as old-fashioned approaches doomed to disappear, I argue, on the contrary, that AI still needs design, interfaces and interactions, even if it pretends to ignore them.

These days AI ignores design, as if algorithms and interfaces belong to completely separate worlds. AI is generally not materialized and remains hidden behind the interface. It seems impossible to interact directly with predictive or recommendation systems, i.e. through buttons or gestures designed solely for this purpose and presented as such. Yet interfaces are the key to AI's existence. What's more, the condition of its power and effectiveness also depends on the interface that houses it: the best video recommendation algorithm is worthless if its suggestions don't take center stage in the interface. What makes YouTube's recommendations so effective may be their relevance, but it's also, and perhaps above all, their display in the front line, directly below or after the video, drawing the eye with their thumbnails like so many mini-billboards. There is nothing clever about that, and we might even assume that these thumbnails would certainly be just as effective if there were no personalization and it was a matter of simple human editorialization.

Advertising and its ability to capture attention didn't need algorithms to be terribly effective throughout the twentieth century.[25] In the case of Tiktok's algorithm, which is also often mentioned, addiction certainly comes as much from the strength of recommendations as from interaction choices: the split-screen feature, which favours mimetic logic and encourages participation,[26] or the hypnotic effect of scrolling for perpetual zapping, which extends and intensifies what TV was able to offer, also without an algorithm. What is perceived as the power of the algorithm is in fact often as much a matter of interface design choices that highlight the algorithm as of the algorithm itself.

But beyond this lack of recognition of the power of interface and interaction choices, AI could not exist or function without interfaces, even if it generally pretends to ignore them. AI is always mediated by interfaces, at different levels. For data-collection it is often necessary to build up the corpus of data that will enable models to be trained and updated. However, it seems impossible for users to provide data directly to the AI, due to the strict decoupling that exists with the interface. In many cases, therefore, AI designers will take pre-existing functionality and use it as a proxy to draw inferences, i.e. interpret actions that were not designed to interact with the model. A “like” will be interpreted as “I want more of this type of content."

Liking something doesn't mean you want more of it. Think of all the other reasons why you might have put a like: to let the person know that you've seen their post or to save content that's now needed for one-off use. Examples of this type are legion: the fact that you stopped viewing in the middle of the first episode will be interpreted as a sign that you don't like this series or this type of series. But perhaps you had just received an important call that made you stop watching. Using and interpreting signals that have not been designed for this purpose leads us to associate several meanings with a single action. And as users, we are often acutely aware of this. In turn, we start to adapt our own actions in the blind hope of communicating what we want to the algorithm. A few years ago, I investigated the different ways in which people try to interact with curation algorithms on a day-to-day base.[27] Many people told me how they had adapted their way of doing things to try and “talk to the algorithm”. For example, by liking all a person's posts on Instagram, not because you want to tell that person that you like their posts, but to let the algorithm know that you want it to continue showing you all that person's posts.

Of course, all these actions also rely on interpretations, as we're generally reduced to trying to guess what signals the algorithm is using and how they are being interpreted. But rather than this little game where algorithms and users blindly attempt to dialogue through an interface that is not designed for it, why not allow people to communicate directly with the algorithm? This would require us to question the deeply-rooted belief that the capture of vast amounts of data and the power of machine learning would enable us to know better than people what they really want. “I want to tell the algorithm what I want and then be able to tweak those preferences, not have it learn what it thinks I want from what I do.”[28]. This sterile dissociation between interface and algorithm is, I believe, the result of the blind spot which holds that any explicit interaction with AI is a failure of prediction. Recently, however, these criticisms have become more visible, and we are beginning to see alternative models emerge that finally allow direct parameterization of the algorithm. A recent example is X's competitor, Bluesky, which offers a choice of curation algorithms and the possibility of creating your own.

Enlarge

AI also needs design and interfaces to materialize its action and the results of its production. However, the fact that the logic of the interface is generally disconnected from that of the algorithm poses a problem here too, as it doesn't help to make it readable. Neural networks operate on a probabilistic basis, but interfaces are not designed to represent this. For example, recognition algorithms (for faces, gender, etc.) produce a ranking, generally accompanied by a confidence score.[29]

However, consumer interfaces very rarely display this, suggesting that the judgment is categorical and definitive. In this case, the interface could provide a more accurate representation of how the AI works. Johanna Drucker, for example, suggests representing data uncertainty in visualizations to show the degree of validity of results.[30] The problem also arises in the opposite direction: again because of the disconnect between design and AI, interfaces are considered fixed and become real constraints, dictating in advance the format that the algorithm must respect to display its results. Since they are often based on simple lists or grids, layouts that have become the de facto lazy interface standards, the algorithm can only play with a single parameter that determines the order in which the elements appear. We could well imagine more complex interfaces that allow the algorithm to play with several complementary dimensions. But this would require real collaboration between AI and interface designers.

Another area where such collaboration could be beneficial is that of generative AI. Today, most of them are based on a dialogical textual interface where the user interacts with the model via prompts, through textual interaction. This interaction modality has certainly contributed to its success, as it tends to anthropomorphize the model, giving it the appearance of a sentient human speaker. And although dialogue is an interaction modality in its own right, this type of interface seems transparent and remains generally unthought-of, as it is presented as more natural and intuitive than traditional graphical interfaces.[31]

As Eryk Salvaggio rightly explains:

The interface is a handy way of juxtaposing the required action — using text to trigger a search across the noisy pixelated debris of a corrupted jpeg — with a more human-friendly story. The chatbot is stage dressing meant to obscure the operations that go on behind the scenes. It tells a story: that we are making a request to a powerful machine, which then creates whatever we ask. One can imagine a whole range of clumsier, if more technically accurate, forms of interface. For example, you might be shown a noisy jpeg and asked to describe what the image might have been. But that would have felt less like artificial intelligence, and more like an extremely inaccurate image repair tool — which is, if we are speaking very literally, what a diffusion model is.[32]

A real missed opportunity for designer Amelia Wattenberg, who rails against the poverty of these interfaces: “Compare that to looking at a typical chat interface. The only clue we receive is that we should type characters into the textbox. The interface looks the same as a Google search box, a login form, and a credit card field. Of course, users can learn over time what prompts work well and which don't, but the burden to learn what works still lies with every single user. When it could instead be baked into the interface.”[33}

Enlarge

Fortunately, many designers like her have begun to explore how interaction techniques derived from graphical user interfaces, sometimes as basic as selection and drag & drop, can enrich the way we explore and work with these models.[34] But this means abandoning the dream of an autonomous AI and assuming the importance of its interface.

The media and public interest in AI has also attracted a great deal of critical scrutiny and analysis, which regularly highlight its limitations.[35] Faced with these observations, however, the response of AI proponents is most often to attempt to correct and improve the model itself, its infamous biases, without questioning the interfaces through which it exists and acts. Improvement therefore generally consists in making the algorithm more complex, so that it considers and integrates elements that were previously ignored or left out, which almost inevitably translates into new data to be collected and interpreted. In this way, we multiply the number of inputs and inferences in what looks like a veritable headlong rush. And a necessarily endless one, since it will never be possible to produce perfect anticipations.[36]

Let's go back to the case of recommendation algorithms, which have been heavily criticized for their tendency to lock people into what they already know and consume. The proposed response is to try and find the right mix, e.g. 60% already known content and 40% new discoveries. This mix is necessarily arbitrary and left to the sole discretion of the AI designers, putting the user on the side. But if we were to try to solve this problem through design, a simplistic answer would be an interface that offers both options. This would make all configurations possible, forcing us to ask the question: do I want more of what I've already listened to, or do I want to open up? Interaction design then encourages reflexivity, but requires attention and choice, which is precisely what AI seeks to avoid, and what we are often all too happy to escape.

And yet, interface design, which joins and adopts the original ethos of AI, has found itself caught up in this logic, which seeks to avoid any action or reflection on the part of the user.[37] Worse still, the development of algorithms has gone hand in hand with the standardization and progressive impoverishment of the interactions and interfaces that support them. The current narrative and financial investment in AI is therefore to the detriment of interfaces and interactions, whose potential and possible improvements are no longer perceived. In doing so, interaction design works against itself, since it limits the ability to act. The result is impersonal, incapacitating computing, a trend well described by Silvio Lorusso in his article The User Condition[38].

When it comes to curating information, do we have any interfaces other than those that present us with lists or grids of elements? Are we left with interactions other than those of the click and swipe? There's an obvious and little-questioned paradox between the ultimate personalization promised by artificial intelligence and the universal homogenization of interfaces that has been imposed in recent years. Each feed is unique because an algorithm determines its content. And yet, the one billion users of Instagram or TikTok, no matter where they are or why they are using the application, all have the same interface in front of them, and all use exactly the same interactions to operate it. It is ironic to note that where these companies claim to offer a personalized experience, never before have we seen such homogeneity in interfaces: the whole world endlessly scrolls through simple threads of content. Design rallied to the fight for the least interaction accentuates this logic, progressively obliterating or relegating to the background the settings that often enabled the software to be adapted to individual needs.

What we have lost in terms of explicit adaptation of our interfaces has been replaced by automated adaptation, as algorithms are now charged with compensating for this standardization and bringing to life the dream of a customized, personalized experience. Since all interfaces and interactions are alike, differentiation is also now the responsibility of the algorithm, denying design the possibility of being a vector of experimentation and a creator of value, including economic value. So, today, to solve problems observed when using an application, the first reflex will often be to add an AI. For example, by integrating a chatbot into a site in the hope that it will direct visitors to the information they are looking for, rather than rethinking the hierarchy of information, the site's tree structure and its navigation.

Fortunately, some researchers and designers are attempting to articulate these two visions and to think of AI through the prism of interaction design, as a means of augmentation rather than automation. One example is some of the research work coming out of the interactive machine learning paradigm, aiming to enable a wider audience to participate in the design or interactive use of models.[39] Another approach is to think of AI as a material for designers, which should be understood and apprehended in order to shape and integrate it into interfaces. But it's hard not to follow its logic. For the rich abundance of these approaches is based on interventions around AI, putting design at its service rather than the other way around. An alternative, more radical response is to think in terms of interface customization without algorithms. Faced with YouTube, Peertube is trying to go against the tide and promote ways of navigating that don't rely on algorithms, but on simple, legible and diverse parameters that give pride of place to human editorialization. Admittedly, this may seem less high-tech, but it is far more legible.

All these proposals are difficult to implement in most existing interfaces, given the separation that exists between the teams that deal with design and those that deal with algorithms, which are themselves generally dispersed.[40] The use of pre-trained models on the AI side, and the reliance on libraries of standard, pre-formatted components (system design) on the design side, reduces the scope for interaction between the two. Similarly, while design work is often linked to and constrained by the device for which it is intended, AI requires a very heavy technical apparatus that calls for the help of servers and phenomenal computing power. It looks as if we are somehow returning to mainframe computing, those powerful but controlled computers, an architecture that in essence limits the power of action of its users.

From a design point of view, if we want to prevent AI from completely imposing its will to automate, designers must first and foremost rediscover and reaffirm the importance and potential power of interfaces and interactions, including bringing AI to life. With my colleagues, we have seen how they are already sometimes trying to escape its grip by using certain weapons specific to design, notably through interface work, but also by acting as spokespeople for users for whom adding AI is rarely a solution.[41] I believe that this will also necessarily involve a reaffirmation of the objective of HCI, left behind by that of AI, to put the power of the computer in the hands of users, and to increase human potential rather than that of the machine. Looking forward to next winter…

Nolwenn Maudet is an associate professor of interaction design at the university of Strasbourg, France. She investigates the numerous interactions between the design field and our digital environment.

--

Reference list

Allard, Laurence. “D’une boucle l’autre, TikTok et l’algo-ritournelle : performer entre rage et ennui en temps de pandémie ”, in Quand le téléphone connecté se fait des films, 2021. Available at : https://recherche.esad-pyrenees.fr/phonecinema/

Bardini, Thierry. Bootstrapping: Douglas Engelbart, coevolution, and the origins of personal computing, Stanford, Calif: Stanford University Press, 2000.

Cardon, Dominique. À quoi rêvent les algorithmes ? Nos vies à l’heure du big data, Paris, Seuil, 2015.

Bäuerle, Alex, Ángel Alexander Cabrera, Fred Hohman, Megan Maher, David Koski, Xavier Suau, Titus Barik, et Dominik Moritz. “Symphony: Composing Interactive Interfaces for Machine Learning“, in Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, 1‑14. CHI ’22. New York, NY, USA: Association for Computing Machinery, 2022. https://doi.org/10.1145/3491102.3502102.

Cassou-Noguès, Pierre. La bienveillance des machines: comment le numérique nous transforme à notre insu, Seuil, 2022.

Crawford, Kate. Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence, New Haven London: Yale University Press, 2021.

Drucker, Johanna. “Humanities approaches to graphical display”, in Digital Humanities Quarterly, 2011, vol. 5, no 1.

Feinberg, Melanie. Everyday adventures with unruly data, Cambridge, Massachusetts : The MIT Press, 2022.

Françoise, Jules, Baptiste Caramiaux, and Téo Sanchez, “Marcelle: Composing Interactive Machine Learning Workflows and Interfaces“, in The 34th Annual ACM Symposium on User Interface Software and Technology, 39‑53. UIST ’21, New York, NY, USA: Association for Computing Machinery, 2021. https://doi.org/10.1145/3472749.3474734.

Goldberg, Adele and Alan Kay. “Personal Dynamic Media”, in Computer 10(3):31–41. March, 1977.

Grudin, Jonathan. “AI and HCI: Two Fields Divided by a Common Focus”, in AI Magazine 30 (4): 48‑48, 2009. https://doi.org/10.1609/aimag.v30i4.2271.

Hwang, Tim. Subprime Attention Crisis: Advertising and the Time Bomb at the Heart of the Internet, First edition, 2020.

Koefoed Hansen, Lone and Peter Dalsgaard. “Note to self: stop calling interfaces “ natural ”, In Proceedings of The Fifth Decennial Aarhus Conference on Critical Alternatives (CA ’15), 2015.

Krug, Steve. Don't make me think, New Riders, 2000.

Lorusso, Silvio. The User Condition: Computer Agency and Behavior, 2021. Available at : https://theusercondition.computer/

Maudet, Nolwenn. “Dead Angles of Personalization: Integrating Curation Algorithms in the Fabric of Design”, In Proceedings of the 2019 on Designing Interactive Systems Conference, ACM, 2019.

Phan, Thao. “Amazon Echo and the aesthetics of whiteness”, in Catalyst: Feminism, Theory, Technoscience, 2019, vol. 5, no 1, p. 1-38.

Pan Xingang, Ayush Tewari, Thomas Leimkühler, Lingjie Liu, Abhimitra Meka, et Christian Theobalt. “Drag Your GAN: Interactive Point-based Manipulation on the Generative Image Manifold ”, in ACM SIGGRAPH 2023 (SIGGRAPH ’23), ACM, 2023.

Philizot, Vivien. “Forma, media, modes d’existence du design graphique ”, conference held during the Format(s) festival, 6/10/22 , HEAR Auditorium, Strasbourg, France.

Salvaggio, Eryk. “Interface as Stage, AI as Theater ”, cyberneticforests.substack.com, 2023.

Amelia Wattenberg, “Why Chatbots Are Not the Future”, wattenberger.com, 2023. Available here: https://wattenberger.com/thoughts/boo-chatbots

Sanchez, Téo, Baptiste Caramiaux, Pierre Thiel, and Wendy E. Mackay, “Deep Learning Uncertainty in Machine Teaching”, in 27th International Conference on Intelligent User Interfaces (IUI ’22), ACM, New York, NY, USA, 2022, 173–190. https://doi.org/10.1145/3490099.3511117

Shneiderman, Ben. “Direct manipulation: A step beyond programming languages”, in Computer, 1983, vol. 16, no 08, p.57-69.

Wegner, Peter. “Why interaction is more powerful than algorithms”, in Communications of the ACM, 1997, p.80‑91.

Widder, David Gray and Dawn Nafus. “Dislocated Accountabilities in the AI Supply Chain: Modularity and Developers’ Notions of Responsibility”, 2022. Available here: https://doi.org/10.48550/ARXIV.2209.09780.

Notes

[1]. Here I will use both terms, depending on the examples. However, the term “artificial intelligence” is precisely problematic because it appears monolithic and conceals the diversity of the techniques it encompasses. See Pauline Gourlet for more details (in French) https://www.youtube.com/watch?v=t08XG-Wnqaw .

[2] Thierry Bardini, Bootstrapping: Douglas Engelbart, coevolution, and the origins of personal computing, Stanford, Calif: Stanford University Press, 2000.

[3] Steve Jobs, “What a computer is to me is it's the most remarkable tool that we've ever come up with, and it's the equivalent of a bicycle for our minds”, in the movie Memory & Imagination: New Pathways to the Library of Congress in 1990.

[4] The notion of Metamedium was coined by Adele Goldberg and Alan Kay in the article “Personal Dynamic Media”, in Computer 10(3):31–41. March, 1977.

[5] Jonathan Grudin, “AI and HCI: Two Fields Divided by a Common Focus”, in AI Magazine 30 (4): 48‑48, 2009. https://doi.org/10.1609/aimag.v30i4.2271.

[6] ibid.

[7] The motto of Google Assistant is “at your service, wherever you are”, https://assistant.google.com/intl/fr_fr/, accessed 30 September 2023.

[8] See for example the Apple Knowledge Navigator research project created in 1987 : https://www.youtube.com/watch?v=umJsITGzXd0 .

[9] Thao Phan, “Amazon Echo and the aesthetics of whiteness”, in Catalyst: Feminism, Theory, Technoscience, 2019, vol. 5, no 1, p. 1-38.

[10] Bardini, op. cit. p.29.

[11] “The Inside Story of ChatGPT’s Astonishing Potential”, TED Talk of Greg Brockman in April 2023. Available at : https://www.youtube.com/watch?v=C_78DM8fG6E .

[12] One example, among many others, the company Impress.ai, states online: “Screen candidates efficiently. Our recruitment automation platform screens candidates to identify top talent. Recruiter's time is not wasted on unqualified applicants, enabling them to focus on the most-suited talent to ensure fast, accurate hiring”, https://impress.ai/hr/automated-resume-screening-software/, accessed 30 August 2024.

[13] Pierre Cassou-Noguès, La bienveillance des machines: comment le numérique nous transforme à notre insu, Seuil, 2022.

[14] Douglas Engelbart, in Bardini, op.cit. p.28.

[15] For example, @gdb, “Found a bug that I've been working on all week. Required leveling up conceptual understanding of a particular area of the stack, building new observability tooling, and running many iterative experiments to isolate the issue. Incredible feeling now that it's fixed.”, X post, 27 August 2023, https://x.com/gdb/status/1695761278604812689.

[16] Greg Brockman, op. cit.

[17] Ben Shneiderman, “Direct manipulation: A step beyond programming languages”, in Computer, 1983, vol. 16, no 08, p.57-69.

[18] Dominique Cardon, À quoi rêvent les algorithmes ? Nos vies à l’heure du big data, Paris, Seuil, 2015, p.70.

[19] Grudin, op. cit.

[20] Peter Wegner, “Why interaction is more powerful than algorithms”, in Communications of the ACM, 1997, p.80‑91.

[21] Grudin, op.cit.

[22] Vivien Philizot, “Forma, media, modes d’existence du design graphique ”, conference held during the Format(s) festival, 6/10/22 , HEAR Auditorium, Strasbourg, France.

[23] Melanie Feinberg, Everyday adventures with unruly data, Cambridge, Massachusetts : The MIT Press, 2022.

[24] https://fr.wikipedia.org/wiki/Thermostat_intelligent, accessed 22 September 2024.

[25] Tim Hwang, Subprime Attention Crisis: Advertising and the Time Bomb at the Heart of the Internet, First edition, 2020.

[26] Laurence Allard, “D’une boucle l’autre, TikTok et l’algo-ritournelle : performer entre rage et ennui en temps de pandémie ”, in Quand le téléphone connecté se fait des films, 2021. Available at : https://recherche.esad-pyrenees.fr/phonecinema/ .

[27] Nolwenn Maudet, “Dead Angles of Personalization: Integrating Curation Algorithms in the Fabric of Design”, In Proceedings of the 2019 on Designing Interactive Systems Conference, ACM, 2019.

[28] Ezra Klein on Threads.com, 8 juillet 2023. Online : https://www.threads.net/t/CuagQ2wvhuE/?igshid=MzRlODBiNWFlZA%3D%3D .

[29] For a study on the perception of different types of uncertainty in Machine Learning, see Téo Sanchez, Baptiste Caramiaux, Pierre Thiel, and Wendy E. Mackay, “Deep Learning Uncertainty in Machine Teaching”, in 27th International Conference on Intelligent User Interfaces (IUI ’22), ACM, New York, NY, USA, 2022, 173–190. https://doi.org/10.1145/3490099.3511117 .

[30] Johanna Drucker, “Humanities approaches to graphical display”, in Digital Humanities Quarterly, 2011, vol. 5, no 1.

[31] Lone Koefoed Hansen and Peter Dalsgaard, “Note to self: stop calling interfaces “ natural ”, In Proceedings of The Fifth Decennial Aarhus Conference on Critical Alternatives (CA ’15), 2015.

[32] Eryk Salvaggio, “Interface as Stage, AI as Theater ”, cyberneticforests.substack.com, 2023.

[33] Amelia Wattenberg, “Why Chatbots Are Not the Future”, wattenberger.com, 2023. Available at : https://wattenberger.com/thoughts/boo-chatbots .

[34] an example among many others : Xingang Pan, Ayush Tewari, Thomas Leimkühler, Lingjie Liu, Abhimitra Meka, et Christian Theobalt, “Drag Your GAN: Interactive Point-based Manipulation on the Generative Image Manifold ”, in ACM SIGGRAPH 2023 (SIGGRAPH ’23), ACM, 2023.

[35] see for example Kate Crawford, Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence, New Haven London: Yale University Press, 2021.

[36] Feinberg, op.cit.

[37] Steve Krug’s book entitled Don't Make Me Think, initially published in 2000, is a bestseller in the interaction design field.

[38] Silvio Lorusso, The User Condition: Computer Agency and Behavior, 2021. Available at : https://theusercondition.computer/ .

[39] See for example Jules Françoise, Baptiste Caramiaux, and Téo Sanchez, “Marcelle: Composing Interactive Machine Learning Workflows and Interfaces“, in The 34th Annual ACM Symposium on User Interface Software and Technology, 39‑53. UIST ’21, New York, NY, USA: Association for Computing Machinery, 2021. https://doi.org/10.1145/3472749.3474734. or Alex Bäuerle, Ángel Alexander Cabrera, Fred Hohman, Megan Maher, David Koski, Xavier Suau, Titus Barik, et Dominik Moritz, “Symphony: Composing Interactive Interfaces for Machine Learning“, in Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, 1‑14. CHI ’22. New York, NY, USA: Association for Computing Machinery, 2022. https://doi.org/10.1145/3491102.3502102.

[40] David Gray Widder and Dawn Nafus, “Dislocated Accountabilities in the AI Supply Chain: Modularity and Developers’ Notions of Responsibility”, 2022. Available at : https://doi.org/10.48550/ARXIV.2209.09780.

[41] Jérémie Poiroux, Nolwenn Maudet, Karl Pineau, Émeline Brulé, & Aurélien Tabard, “Design Indirections: How designers find their ways in shaping algorithmic systems ”, in Computer Supported Cooperative Work (CSCW), 1-32, 2023.