According to the essayist and memoirist Rebecca Solnit, to be lost is 'to be fully present, and to be fully present is to be capable of being in uncertainty and mystery.' Solnit considers being lost 'a psychic state achievable through geography'. The flip-side is that geography can also prevent one from getting lost. It is easier to find one's way in the grid-like cities of the new world than in the swerving cobblestone streets of the old. A similar evolution of efficiency is happening on the web. The new world, in this analogy, is Web 2.0, and its shape is neither grid- nor weblike. Increasingly, it's a point of convergence.

Early in the year 2012, in an essay in the New York Times Sunday Review, Evgeny Morozov pronounced the death of the cyberflâneur. One could be forgiven for never having heard of this ill-fated figure, but for those whose use of the web stretches back to the 1990s, its behavioral description would certainly be familiar. The cyberflâneur – like the original flâneur – strolls, wavers, and drifts through the web's streets without any immediate plan or purpose. Or used to, anyway. '[The web is] no longer a place for strolling', Morozov writes in his eulogy. 'It’s a place for getting things done.'

What Morozov gets at for the most part is not some change in online users' behavioral patterns, but a more fundamental shift in the web's infrastructure, in the way its pages are run and in the way they interconnect. What killed the cyberflâneur, ultimately, is an inability to get lost online.

This is more significant than it seems at first glance. 'Not to find one's way around a city does not mean much,' Walter Benjamin once remarked. 'But to lose one's way in a city, as one loses one's way in a forest, requires some schooling.' The question is: what happens if that schooling becomes insufficient?

1949, or, the Aleph

It takes great prescience to pin down a century before it has even half passed. One of the characters in Jorge Luis Borges' short story The Aleph - written in 1949 but set in 1941 - launches into a bold statement with the words 'this twentieth century of ours', as if its days had already come and gone. You can picture his arm sweeping panoramically across space as if it represents the perfect metaphor for that other dimension, time. But 1941 or not, his claim would turn out to be so prophetic that it might describe the 21st century even more than his own: 'this twentieth century of ours has upended the fable of Muhammad and the mountain – mountains nowadays did in fact come to the modern Muhammad.'

For isn't that what technology hath wrought? Nowadays, we don't go to the post office; it comes to us in the form of email. We don't go to the store; we buy online and have our needs sent home. We don't go to the library; we Google.

'The Net is ambient', architect and urban design pioneer William J. Mitchell wrote in 1996, 'nowhere in particular but everywhere at once'. This was exactly what Borges had been getting at, half a century before. The eponymous aleph in his story was a point in space 'only two or three centimeters in diameter', which contained the whole universe, 'with no diminution in size. Each thing was infinite things.' Borges, however, felt it was impossible to describe the aleph, for it was simultaneous whereas language was successive. For all his prescience, he would likely not have predicted this mystical vision of his to come true so quickly. Nowadays, we call it the cloud. The cloud is everywhere. You have only to stick your laptop or phone or watch in the air, and you will see that every point in space at any point in time contains everything – or everything that can be expressed in data.

'You do not go to it; you log in from wherever you physically happen to be' Mitchell wrote in 1996. He described 'the Net' in hushed, almost sacrosanct terms. Logging in was an act of 'open sesame', he wrote. Another commenter, Marcus Novak, elaborating on his concept of 'liquid architecture in cyberspace', was thinking along the same lines, when he wrote that 'the next room is always where I need it to be and what I need it to be.' Their point, and that of many others, was grounded in the utter anti-spatiality of what had come to be known as cyberspace. Surely, if they were right, if cyberspace was a kind of Borgesian point of convergence that begs the question: why did we insist on calling it cyberspace anyway?

Enlarge

What follows is a story not of how space was conquered and lost, but rather how an idea of space came and went. It is about how we are to understand the new virtual reality, and our place in it.

1865, or, Down the Wiki-hole

'Down, down, down,' Lewis Carroll wrote in 1865, as the little girl Alice fell down the rabbit hole into the deep dark well. 'Would the fall never come to an end?' Surely, that accurately describes the feeling of browsing and surfing online. There always seem to be more links to click on, more unfathomable curves ahead.

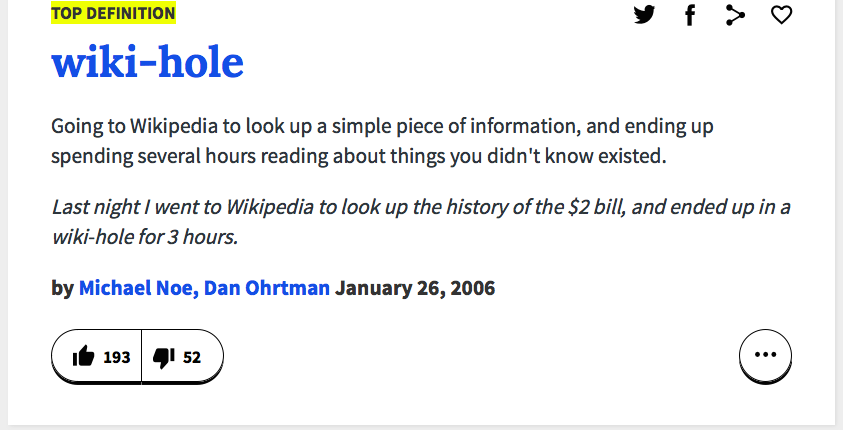

Today, we tumble down the wiki-hole. This term itself is a niche phenomenon at best; when the first few search results are Urban Dictionary definitions, you roughly know where you're at. Nevertheless, surely what the term denotes is familiar to many people who use Wikipedia on a semi-regular basis. Here is the top definition on Urban Dictionary:

Wikipedia, with its plethora of intra-subject links, now seems the perfect place to get lost online. But the fact that it seems this way is in itself telling. When the web came into fruition in the 1990s, this roaming pattern was the main modus operandi online. It is what lodged into popular culture all the explorative metaphors we still employ: navigation, surfing, and – most poignantly of all – cyberspace. 'Reading about things you didn't know existed' was part and parcel to the experience online. It was, simultaneously, both its greatest charm and problem.

1980, or, the Metaphor

If the Net was indeed 'profoundly antispatial', as Mitchell had insisted, then what precisely was the deal with the persistent spatial metaphors? Didn't they suggest the user's experience online was very much a spatial one? Mitchell himself called his book City of Bits, whereas Novak spoke of his liquid architecture as 'spatialized music'. There seemed to be a necessity to the layer of metaphor.

However, not everyone agreed. A 2002 research article by Farris et al. - whose title scathingly asked 'what is so spatial about a website anyway' - perfectly represented the cynics: 'It is frequently assumed that users' schemata contain spatial information about how the pages of a website are interconnected. However, it is not clear how these schemata could contain such information when none is presented to the user while he/she is exploring the website.'

Such an argument against the popularized image of cyberspace as space was a widespread but ultimately rather puzzling one. It betrays a misunderstanding of the use of metaphor. In their influential 1980 tract on the subject, Metaphors We Live By, George Lakoff and Mark Johnson described the spatial metaphor in particular as 'one that does not structure one concept in terms of another but instead organizes a whole system of concepts with respect to one another.' In other words, the metaphor is not applied to draw attention to some inherent spatial quality in its subjugated object, but rather to conjure up precisely such a spatial organization.

Of course, the logical explanation for the proliferance of spatial metaphor online is precisely that non-spatiality of the digital environment. If it isn't inherently non-spatial, there would be no need to apply a spatial metaphor in the first place. The process of navigating the web strongly informs the spatial metaphor, recalling as it does wayfinding and exploration. While the sequential series suggests a one-dimensional space, it is precisely the fundamental idea on which hypertext systems are based, namely the possibility to branch out repeatedly. This allows the linear view to become fully spatialized. It becomes infused by all the roads taken as well as those not taken. When the user backtracks and follows other links, the mental spatial schema becomes a tree. When the user ends up in the same destination through two different routes, the lines intersect and form the beginnings of a map. Such an analysis is in line with philosopher Michel de Certeau's definition of space as 'a practiced place', an 'intersection of mobile elements'. According to de Certeau, it is precisely this act of the pedestrian traversing a place, an act of inscription, that turns place into space.

1984, or, Cyberspace

The term cyberspace was coined by author William Gibson in his 1984 novel Neuromancer, denoting 'a graphic representation of data abstracted from the banks of every computer in the human system'. It was quickly applied to the burgeoning World Wide Web at the beginning of the 1990s. Cyberspace, while often associated with the internet, has generally been defined in a broader sense, as an 'indefinite place' that has come into being of necessity when our communication technologies increasingly facilitated communication at a distance. Cyberspace was the indefinite, virtual place where the two disembodied voices on the telephone, or the two disembodied actors in an instant messaging conversation, met. The new media theorist Lev Manovich has argued that one form or another of cyber- (or virtual) space has been around for a long time. He stretches up the definition of the word 'screen' to include paintings and film, the point being that the boundaries of such a screen split the viewer's consciousness. She or he now exists in two spaces: 'the familiar physical space of her real body and the virtual space of an image within the screen'. For computer and television screens as well as for paintings, 'the frame separates two absolutely different spaces that somehow coexist.'

Moreover, throughout history the idea of space itself has largely been less rigid than it is now. As Margaret Wertheim shows in The Pearly Gates of Cyberspace, her treatise on the history of our perception of space, in past times there was another realm of space besides physical space. 'Just what it meant to have a place beyond physical space is a question that greatly challenged medieval minds, but all the great philosophers of the age insisted on the reality of this immaterial nonphysical domain' (italics mine). Wertheim takes as her prime example Dante's Divine Comedy (c. 1308-1321), whose protagonist moves through the realms of Hell, Purgatory, and Heaven.

Enlarge

Examples such as these suggest that the virtual has always been spatial, that the imaginary realm has always been, in fact, a realm through which the dreamer could move. Such a legacy can for instance be found in the ancient custom of constructing memory palaces, which arranged facts or ideas relative to each other in an imagined building in order to help recall them through associative thought. This act of memory palace creation is today mirrored in the practice of mind mapping, a popular technique for progressing a line of thought through tracing its structure and relations and outlining these spatially. Both memory palaces and mind mapping skew very close to Lakoff and Johnson's idea of 'organis[ing] a whole system of concepts with respect to one another.' And indeed, research shows that practising mind-mapping can aid information recall by as much as 10%, while the benefits of the memory palace as a mnemonic device are much-lauded, and were recently reinvigorated through Joshua Foer's bestseller Moonwalking with Einstein.

1986, or, the Spatial Turn

Perhaps not coincidentally, cyberspace developed largely in concurrence with the postmodern movement in philosophy, and more specifically with something that has come to be known as the spatial turn. The idea being that in the past the temporal dimension had always dominated the spatial one. This dominance was informed by classical Marxist historicism and the idea of technological and societal progress towards some putatively utopian future. However, as the 20th century progressed, some thinkers started to argue that those days were gone. In 1986, Michel Foucault boldly pronounced that 'the present epoch will perhaps be above all the epoch of space.' He proceeded: 'We are in the epoch of simultaneity: we are in the epoch of juxtaposition, the epoch of the near and far, of the side-by-side, of the dispersed. We are at a moment, I believe, when our experience of the world is less that of a long life developing through time than that of a network that connects points and intersects with its own skein.'

This spatial turn was informed by several things. Not long before Marshall McLuhan had already pronounced the 'global village’; a world more intimately connected than ever before due to high-speed traveling and widely dispersed mass media. Globalization, in other words, was afoot. But the spatial turn was also indirectly inspired by Einstein's relativity theory, which trickled down into the humanities and social sciences and partly informed theories of postmodernism. These argued, among other things, against intrinsic values. The value of something, postmodernists argued, is always determined in relation to that which surrounds the thing. And crucially, according to them, this constant relativism was already in us. As art critic John Berger put it:

It is scarcely any longer possible to tell a straight story sequentially unfolding in time. And this is because we are too aware of what is continually traversing the storyline laterally. That is to say, instead of being aware of a point as an infinitely small part of a straight line, we are aware of it as an infinitely small part of an infinite number of lines, as the centre of a star of lines. Such awareness is the result of our constantly having to take into account the simultaneity and extension of events and possibilities.

Indeed, the ‘lateral story’ soon after became all the hype. In 1979, a then little-known Italian writer by the name of Italo Calvino released a short novel called If on a Winter's Night a Traveler. It was the story of two avid readers caught unbeknownst in the throes of this ‘zeitgeist’. In alternating chapters, it describes the reader (called 'You') and his futile attempts to continue reading the story he was reading. Every time he thinks he finds another copy of the book, it turns out to be another story. As such, we are not just reading two intertwined stories, namely the attempts of the reader to read the book and the book he reads, but several more: every book he finds turns out to be wholly separated from the former one.

And yet we experience all the separate stories as one, for the simple reason that it is offered to us as such. Calvino's novel in a way provides a rebuttal to Farris et al's aforementioned scepticism regarding the usage of spatial metaphor online. In Calvino's isolated book openings, just as on websites, hardly any information 'is presented to the user' on 'how the pages […] are interconnected'. True, websites span across different authors, and their sequencing is flexible. Nevertheless, the point is that in hypertext, spatial representation becomes a job the reader performs, as opposed to the author.

Soon after, inspired by Calvino and other proto-hypertextual exercises such as Julio Cortazar's Hopscotch and Jorge Luis Borges' 'The Garden of Forking Paths', hypertext fiction became the new grand project of the literary scene. Much speculation exists on why it never really took off. Maybe, the hypertext authors were dispirited by the advent of the web soon after. In a way, this was hyperfiction's grand statement already; one great text that featured all stories inside it, and that fictionalized fact and factionalized fiction.

Umberto Eco once said if he could take one book to a desert island, he would take the New York Phone Book, for ‘it contains all the names of the world, and therein you can imagine an infinite series of stories with infinite characters’. Perhaps, the web was the New York Phone Book of hyperfiction.

1989, or, the World Wide Web

I was born in the same year as the World Wide Web, AD 1989. While this might constitute an excuse to infuse this piece with personal reminiscing, it is of course little more than mere coincidence. True, it is tempting to say we grew up together, but the analogy would be wholly misplaced. There is no sense in equating my growing up with the ‘growing up’ of an idea or network, in which latter case we are speaking in purely metaphorical terms. The concurrence of my own start in life with the web's is at best 'vague, but exciting', as Tim Berners-Lee's first proposals on the whole project were deemed by his supervisor.

The World Wide Web was a victory of spatiality. No longer would we read and think and write sequentially, gliding along the red narratival thread of the temporal dimension. We would branch out, branch out, and branch out again; we would digress eternally. No text would ever be finished, nor would it ever have to be. We would 'traverse the storyline laterally'.

As the above speculations on the supposed spatial turn showed, it is likely that an idea such as Berners-Lee's was simply in the air. After all, in that same such year of 1989, the Berlin Wall fell and American political scientist Francis Fukuyama pronounced the end of history and the victory of what he called 'western liberalism'. What does this end-of-history spell if not the end of the temporal dimension? Both the World Wide Web and the supposed end of history only confirmed this idea of the spatial turn, of the 'simultaneity and extension of events and possibilities' that John Berger wrote about. Just as the Aleph would later be reified in the cloud, so the global village was to find its ultimate expression in the World Wide Web. That, at least, was the idea.

1994, or, Lost in hyperspace

In 1994, as part of an advertisement campaign created to help launch Windows 95, Microsoft asked the unsuspecting computer user: 'where do you want to go today?' Clearly, back then, cyberspace was a space to travel in. When the pioneers of online culture talked of cyberspace as the final frontier, they were only half-joking. They were explorers. They didn't actually know where they were going with this thing, that was the whole point.

Alice's kooky adventures in Wonderland started with her mere bewilderment at a talking rabbit. The Urban Dictionary user's romp through Wikipedia started by looking up 'a simple piece of information'. Both these examples seem to suggest there is little control on the side of the unsuspecting Wikipedia user, that the visitors of the website are pulled down into these worlds willy-nilly. This was a common enough experience in the early days online, and it was called the ‘lost in hyperspace’ problem. According to Wikipedia, which has an uncharacteristically (certainly for an ‘internet lore’ kind of topic) short article on the subject, the problem pertains 'the phenomenon of disorientation that a reader can experience when reading hypertext documents.' Here, once again, the cyberspace metaphor helps us out: it is easy enough to imagine the lonely astronaut, helplessly hovering away into the more secretive chapters of the vast galaxy.

Microsoft, perhaps, was trying to hit two sweet spots at once. The open-ended question 'where do you want to go' hints at the fathomless depths of the virtual universe ‘the sky is the limit’. While the very fact of the question itself, its outstretched hand, suggests that whatever the intended destination, the good people and paper clips of Microsoft will guide you there.

However, this guiding wasn't particularly easy in those days. Before Google and others had fully indexed and algorithmically ordered the majority of web pages, a destination had to be approached step by step. Needless to say this was cumbersome, but in hindsight it had benefits. This process of browsing - approaching the subject incrementally - instilled in the mind an idea of how various texts fit together. The whole search trail is like a route through a sea of knowledge, and its specific parameters are informative in and of itself. Research shows trail-based browsing to be beneficial in all manner of ways, including recall of information and covering the full scope of a topic.

Other helpful tools, such as site maps and graphs that plot out the structure of a web site, are similarly beneficial. Especially the site map used to be ubiquitous in the pre-Google days. As with search trails, research shows that providing users with a map or graphical representation of how a website's pages fit together helps understanding and recall of information. Such research notwithstanding, the site map has all but disappeared due to improved and more efficient search methods. While it is entirely understandable that the practice has fallen by the wayside – after all, the search goal online tends to be short-term necessity and not long-term learning – there might nevertheless be something to say for a more synergetic solution. For instance, search engines could offer not just single page search results, but search trails – sequenced sets of web pages – that aptly fit the search query. After all, the required information is often enough not found on just any single page, but across a larger swathe of the web's territory.

2001, or, Harder, Better, Faster, Stronger

Today, a quarter century after the fact, Francis Fukuyama's ‘end of history’ seems hopelessly naïve. The temporal dimension had been proclaimed dead too early. If we allow popular culture to speak for us, in 2001 it was boldly stating that we still wanted to go harder, better, faster, stronger. Perhaps the spatial turn existed, but that didn't mean it was desired. McLuhan, before he pronounced the global village, might have paid more attention to the words of Henri-David Thoreau wrote in Walden: 'We are in great haste to construct a magnetic telegraph from Maine to Texas; but Maine and Texas, it may be, have nothing important to communicate.' Similarly, it seemed more and more as if the denizens of the World Wide Web ultimately did not really want to 'traverse the storyline laterally'. Studies showed that web traffic became more concentrated over time. Everyone linked to the same few websites. If there was a flow of traffic, of interest, online, it did not run from the inner to the outer regions – from generic to weird, as the wiki-hole example might suggest – but rather the inverse way, from periphery to core. What was starting to surface was that these exploratory affinities of the web were not considered strength, but a nuisance. What defined the websites in the core was a focus on time-effective use, a focus on giving people what they need and what they need only. These people, it seemed, wanted the ring not the quest, and they didn't want to spend nine hours in getting there.

It is not at all surprising that the ‘reintroduction’ of the temporal dimension online happened in concurrence with the web's commercialization from 1994 onwards. In a brave new world whose denizens' number one annoyance was slow web page loading times, time literally equalled money. The focus of this brave new world, then, would be the search engine. Google, while started as an academic exercise, quickly opted into a commercial model that joined advertising to search queries. It was almost as if we were witnessing a kind of historical re-enactment of Marx's 'annihilation of space by time'. Not only did Google's algorithms drop users blindly in the sea of knowledge (not knowing whether they could swim or not), but the erosion of slow loading times similarly annihilated the illusion of space. Waiting as you surfed from one web page to another was a little bit like traveling, after all. One user quoted by James Gleick in his book Faster literally spoke of 'the spaces created by downloading web pages'.

This second attack on the illusion of space recently reached its zenith in Facebook's new Instant feature. This mostly plays out on the newer battlefield of the mobile web, in which connections are on average still slower. With Instant, Facebook urges quote-unquote content creators (those formerly known as writers and artists) to allow them to host their articles within their social media platform, in order for the articles to 'load instantly, as much as 10 times faster than the standard mobile web'. As a result, the user no longer has to leave the clean blue homepage in order to read articles scattered across the web. The mountain, indeed, has come to Muhammad.

It almost seems sometimes as if we just can't win. First we browsed and surfed in a carefree, antediluvian world, pushed the frontier and ended up lost in hyperspace. Then, increasingly being able to pinpoint all the scattered pages, we stopped being lost, but forgot where precisely it was we were, adrift in a darkly hued sea. What we lost online, it seemed to some, was the space for serendipitous discovery.

2004, or, Web 2.0, or, the Filter Bubble

In hindsight, it is tempting to read every development of the web since its beginning as a move from explorative to more extractive uses. Certainly, the propagators of the modern web would have you believe this teleological narrative. They like to say that Web 2.0 and everything that coincides with it was not simply an option or an ideological turn but rather the fulfillment of the web's participatory and democratic promises.

This claim of democratization was a common one in the early days. It is largely based on the decentralized structure of the network, whose nodes are connected many-to-many as opposed to many-to-one. The general Silicon Valley version of this story is that this decentralization is rooted in the counter-cultural attitude of the internet's inventors. This is a myth. The internet was largely developed in the USA's military organizations, and its decentralization was a simple precaution to make it harder for America's enemies to disrupt the network and its flow of information.

Nevertheless, the result does indeed hold the promise for a more participatory and democratic medium. Sadly, as I explained elsewhere, there is a large gap here between the theory and the practice, between promise and implementation. Despite all its claims, the Web 2.0 revolution is in part responsible for recentralizing the information flow into the hands of a few large platforms. The specifics of Google's PageRank algorithm make sure that those already big will only get bigger. The crucial morale of the story is that being able to speak is not the same as being heard.

This newfound centralization, which has been theorized and modeled by Barabasi and Albert, and elaborately scrutinized by Jaron Lanier and Astra Taylor, among others, also affects the spatiality of the web. Online navigation increasingly runs through these hubs. Users reach websites through social media and search engines. Instead of this being the starting point for surfing, they backtrack to their ‘portal’ or hub, and reach out again. In the process, this negates the beneficial effects of the mental spatial schemas made by browsing sessions and the associative connections drawn through search trails. Moreover, cloud computing and dynamically generated websites have also destabilized link structures by making it increasingly difficult to point and link to specific information.

Today it is easier than ever to find what you are looking for. This seems to have come at the cost of making it more difficult to find what you are not looking for, what you might be interested in, or what you can't aptly put in words. This is partly due to web services learning about you and tailoring their information to your previous ‘needs’. This process has been popularized as the ‘filter bubble’ by Eli Pariser in 2011.

While this filter bubble in itself is a worrisome development, what is discussed here is to some extent beyond it. Pariser's main concern is when you look up information on, for example the war in Syria, you only get viewpoints that confirm your pre-existing beliefs. My concern is if you are unfamiliar with an ongoing war in Syria, you'll find it increasingly difficult to find out about its existence at all. If we only reach websites by typing in an already known search term in Google or by navigating from our tailored walls in Facebook, we collectively seal ourselves in a closed loop of our own interests. We aren't just far from the frontier, it is no longer signposted.

2007, or, the Cloud

I know very well that I rely heavily on metaphors, both as a subject (the spatial metaphor) and as a literary, explanatory device. Of course, it is easy enough to forget that the web itself is a metaphor, one that also contributes in reinforcing the idea of the online realm as spatial. But surely if the web was the great metaphor of the 1990s, the cloud has become the structuring term from the early 2000s onwards.

Cloud computing is a way of pooling and sharing computer power in the form of servers and storage, on demand and to scale. Data is usually stored in ‘remote’ data servers and copied and divided across multiple connected servers, and therefore is accessible from anywhere (kind of) at any time (sort of). All that is required is a little whiff of Mitchell's 'open sesame': an internet connection, sufficient bandwidth, and the holy act of logging in. Over the past years, both individuals and companies have started shifting from the use of local storage and computing power to these cloud services, spurred on by convenience and offers of seemingly unlimited disk space.

The metaphor of the cloud suggests a constant, disembodied and vapid data stream, implying that the world (as far as data is concerned) is now literally at our fingertips. There are religious overtones here, such as when Kevin Kelly talks of 'the One Machine'. Its promise of data available instantly always and everywhere brings to mind the old philosophical concepts of nunc-stans and hic-stans, which represent respectively the eternal now and the infinite place. Thomas Hobbes long ago dismissed these as vacuous concepts that no one, not even its evangelical proclaimers, properly understood. The cloud reinvigorates them to the extent that it negates specific time and specific place. In reality, this infinite space is little more than an absence of spatiality, a phrase that evokes William J. Mitchell's analysis of the web as 'fundamentally and profoundly anti-spatial', back in 1996.

As we have seen before, such anti-spatiality actively invites us to spatialize it, but cloud computing's particular set up makes this difficult. If we recall Michel de Certeau's conception of space as practiced or traversed place, we can understand this absence of spatiality: the cloud is never traversed. It is like a library with closed stacks, where a few (automated) clerks retrieve the data from inside and the user remains ever outside.

'For the common user, the computer as a physical entity has faded into the impalpability of cloud computing,' Ippolita writes in The Dark Side of Google. This impalpability is manifested by a lack of place not just from a client's point of view, but also on the server's side. The file system, as we have known it over the past decades, evaporates. Ippolita, taking music playback devices as their example, explain how 'once uploaded on the device, music ceases to exist as a file (for the user at least) and ends up inside the mysterious cloud of music libraries from programs such as iTunes.' The same thing, of course, has happened with web pages: they are mere moulds filled with the most recent data, and the web page no longer exists as a unit of information, to be associatively structured in relation to other such units of information. Link structure as such ceases to function as a web of the interrelation of bits and pieces of information, but becomes more and more about the interrelation of whole information sets.

Of course, the fluffy metaphor of the cloud hides and obscures the physical reality of cloud computing, which is not nebulous at all. As a data center manager notes: 'In reality, the cloud is giant buildings full of computers and diesel generators. There's not really anything white or fluffy about it.' Physicist Richard Feynman (as quoted by Gleick in Faster) once spoke rather ominously on the nature of clouds: 'As one watches them they don't seem to change, but if you look back a minute later, it is all very different.' He was in fact talking of the real, fluffy kind of cloud, though one could certainly be excused for thinking otherwise.

2015, or, the Homogeneity Problem

So here we are, in that dark sea of knowledge we no longer need to leave. Or nebulous cloud – take your pick of metaphor. We have our friends here, our products, our articles. This dark sea finally contains the whole universe. But suddenly we realize that the whole universe now looks the same. It has all become the blue of some watered-down simulacrum of the real thing. It becomes harder and harder to distinguish.

I exaggerate, perhaps. But those who think content and form can be separated without consequence are mistaken. Those texts incorporated within the Facebook mould, those topics bled to the inoffensive grayscales of Wikipedia, are different texts than they would be anywhere else.

At the very least they make for different readings. In path finding and orientation studies, one identified environmental factor is differentiation, or distinguishability between places. The quite sensible idea is 'a building with halls and rooms all painted the same color would generally be harder to navigate than if the same building were painted with a colour coding scheme.' This principle seems to be even more important online, where the aforementioned absence of inherent spatiality means users have to rely on the navigational tools offered by the websites they visit. Already in 1990, a usability study by Jakob Nielsen on navigating hypertexts called this 'the homogeneity problem'. He experimented with using different backgrounds for different information to prevent disorientation. Unfortunately, user profiles on social networks and websites based on barely customized templates of content management systems like WordPress or Yoomla are usually rather restrictive in their designs. The mobile web, due to the smaller screen size and slower loading times, offers less differentiation still. In one study contrasting desktop and mobile internet usage, people reported that browsing mobile web pages felt like browsing a 'restricted' version of the web, The computer allowed them 'more avenues to just kind of wander off'.

A website like Wikipedia, whose minimalistic design aims to be unobtrusive and make the information feel neutral, can become disorienting simply because every topic page is fit within the same template and within the same layout and (lack of) color scheme. Such a website could at least create the semblance of spatiality by color coding different topics or types of pages, creating the suggestion of distinct neighborhoods that the user can move through. If page layout is the decoration of the room online then, seen through analogy with the memory palace, all of Wikipedia's information is stored in the same room, eliminating the possibility for spatial structuring.

About half a year ago, in August 2015, word got out that for the first time Facebook drives more traffic to websites than Google. It is for this reason that many ‘content providers’ comply with new developments like Facebook Instant, even though it will mean that they will miss out on advertising income. We will be increasingly disoriented in a web on which content is no longer distinguished through form. These providers simply cannot afford to miss the boat out to sea, even if that boat looks rather shoddy and that sea beckons darkly.

2016, or, a Field Guide to Getting Lost

In 1859, a thirteen-year-old boy from Montevideo, Uruguay, was sent to France by his father to pursue a good education. He was called Isidore Ducasse, and would grow up to die as a poet called Comte de Lautreamont, destitute and unrevered, at the tender age of 24. He left behind one lengthy and bizarre prose poem called Les chants du Maldoror as well as a collection of aphorisms posthumously released as Poésies. In a circumspect way, Ducasse's work would be the basis for many later art and protest movements, from the Surrealists to the Adbusters, and from the Situationists to the music of Girl Talk.

The surrealists particularly loved Ducasse for his 'chance meeting on a dissecting table of a sewing-machine and an umbrella', a striking image that very much vouches for the power of the serendipitous encounter. The rebellious Lettrists and Situationists of the next generation, however, caught on to a short passage in the Poésies: 'Plagiarism is necessary. It is implied in the idea of progress. It clasps an author's sentence tight, uses his expressions, eliminates a false idea and replaces it with the right idea.' In his Society of the Spectacle Guy Debord put Lautreamont's ideas to practice, and stole them almost wholepiece. Debord himself had co-developed the concept of détournement, which was a strategy of appropriation practiced primarily on cultural figures, fighting a capitalist culture with its own images. Détournement would find itself mirrored in culture jamming and adbusting, but it also underpins glitch theory. In her Glitch Studies Manifesto, Rosa Menkman notes that 'the perfect glitch shows how destruction can change into the creation of something original', thereby closely echoing the détournists. A glitch in this context is an interruption, a break in the flow of technology. Glitch theory actually poses an interesting solution to the problems that indistinguishable web pages pose. A glitch can function as a much-needed jolt to the user, recasting her or his attention to an oft-visited web page that has long since become banal. Similar to how the innocent questions of a child (‘what are clouds made of?’) can make us wonder about things in the world we have long since taken for granted.

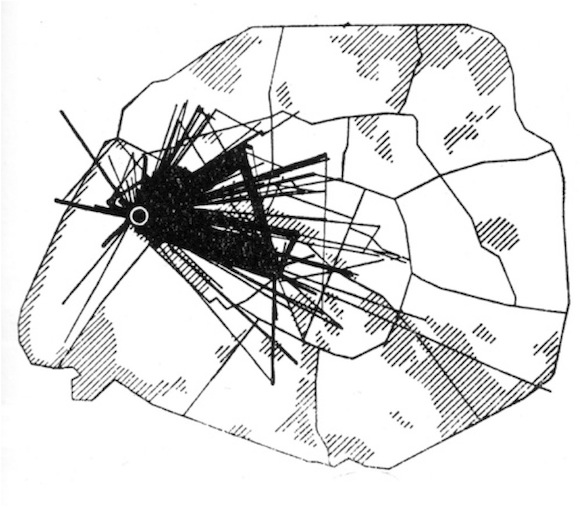

Glitch, Menkman writes, attempts to 'de-familiarise the familiar.' The Situationists attempted a similar thing through détournement, but also through the dérive, a way of drifting through the city without a predetermined goal, being led by 'the attractions of the terrain and the encounters they find there.' The story goes that the practice of dérive was inspired by a map drawn by the French sociologist Paul-Henry Chombart de Lauwe in 1952, which tracked the movements of one young girl in Paris for the duration of a year.

Enlarge

What surfaced in the picture was the limited scope of the girl's life during that year, and to Debord, this evoked 'outrage at the fact that anyone’s life can be so pathetically limited'. The dérive was developed to break this girl, and everyone like her, out of the ‘filter bubble’ of her urban life.

Not only did the method allow the Situationists to end up in new places, it also, rather like the glitch, made old places seem new by approaching and considering them in different ways and from different angles. Like glitch, it was very much a way of getting lost in the familiar.

The Situationists dreamed of a continuous dérive that kept the city in a state of constant flux. 'The changing of landscapes from one hour to the next will result in complete disorientation', writes Ivan Chtcheglov, one of the group's members, and it is clear he considers this a good thing. The phenomenologist Gaston Bachelard once wrote that the poetic image is 'essentially variational', and perhaps this can make us understand the real purpose and appeal of the continuous dérive: continuous poetry; or, as Situationist Raoul Vaneigem has it, 'not a succession of moments, but one huge instant, a totality that is lived and without the experience of “time passing”.' After all, for the Situationists, the point was 'not to put poetry at the service of revolution, but to put revolution at the service of poetry'. Poetry was the goal, not the method.

In a way, Web 2.0's dynamically generated websites do provide landscapes that are constantly changing and can seem to constitute a city in constant flux, but it is a calculated, mathematical flux. Websites have become, following Novak, liquid architecture: what is designed of them is 'not the object but the principles by which the object is generated and varied in time'. While for the Situationists (as well as for the early web pioneers) it was the active act of dérive that allowed the familiar to seem unknown, on the present web the subsumption of content into the sinkholes of the Silicon Valley giants allow the unknown to seem familiar. If the goal is indeed poetry, the problem is the modern web's pragmatic, data-driven preference of quantitativity over qualitativity. For isn't poetry inherently qualitative, fleeting, and ultimately incalculable? Poetry, above all things, seems beyond calculation.

It is therefore not at all surprising that attempts at coding Situationist-type subversion have proven difficult. Some cities' tourist offices now offer prefab psychogeographic tours of routes that, while they might have originated in a dérive, seem now to have been set in stone. This is antithetical to the Situationists' original intentions, as illustrated by Debord's remark that 'the economic organization of visits to different places is already in itself the guarantee of their equivalence'. While there are some net.art projects which attempt subversion in the spirit of the Situationists by the aleatory and auto-magical conjoining of various texts collected online, the results often feel rather formulaic. Not quite the chance meeting of a sewing-machine and an umbrella; merely the simulacrum of one. Auto-magical, after all, is just an automated process that seems magical. What we need is a magical process that – at least to some extent – works automatically.

However, while it is difficult to actually change the interrelationship and structure of the web, or the way the user navigates through it, there are tools available which make it at least possible to get to the outliers, the pages that are left unlinked to. A recent article dubbed these outliers The Lonely Web, and listed a host of services that help you explore it: 'Sites like 0views and Petit YouTube collect unwatched, “uninteresting” videos; Sad Tweets finds tweets that were ignored; Forgotify digs through Spotify to find songs that have never been listened to; Hapax Phaenomena searches for “historically unique images” on Google Image Search; and /r/deepintoyoutube, which was created by a 15-year-old high school student named Dustin (favorite video: motivational lizard) curates obscure, bizarre videos.'

More interesting still are some of the browsing techniques mentioned, like 'searching the default file formats for digital cameras plus four random numbers.' The word random is of course vital here. Wikipedia has a random article function (though some actually consider it too random), and there are a bunch of tools and websites that let you access a random web page. Once again the lesson here, though, seems that it isn't as straightforward to get the right amount of serendipity through these kinds of automated services. Like the random Wikipedia function, the (relatively) unrestricted random website functions can quickly seem so random, so non sequitur, that they will most likely result in disorientation (not to mention the issues of running into viruses, malware, or pornography). This is why these methods are suboptimal, though they could potentially serve as a launching pad for a serendipitous browsing session.

On the other hand, the random website services that algorithmically send a user to a personalized recommendation (like StumbleUpon) in a way defeat their purpose and leave their user stuck in the filter bubble.

The other Situationist practice, détournement, might in a sense be more synergetic with the modern web. Aside from glitch theory, hacking (more specifically hacktivism) also offers obvious opportunities for a digital détournement. Successful hackers can insert their subversive message within the very context and confines of the original. In many of the more publicized cases, this process unfolds rather brusquely. The hacker replaces the whole page with their message. Because it is so obvious, the damage is quickly detected and restored. Hackers would do well to take a page from the book of Lautreamont: clasp an author's sentence tight, eliminate a false idea, and replace it with the right idea.

Of course, hacking into and ‘defacing’ other people's property is illegal for good reasons, and is not the way forward, but an innocuous attempt at such Lautreamontian ‘improval’ of websites can be achieved through client-side ‘hacks’ such as browser plugins. The plug-in Wikiwand, for instance, redesigns any Wikipedia page a user visits, 'making it more convenient, powerful and beautiful'. While it does not necessarily fix the problems with Wikipedia I discussed, the possibilities in this approach are clear. There are also some browser plug-ins which can help the user create spatial schemata. The Firefox plug-in Lightbeam, for instance, while mostly used for shedding light on third-party tracking websites, does much to create a graphical representation of the interrelation of visited websites – a map, if you will. Other plug-ins reshape navigational functions of the web browser such as the back and forward buttons, aiding their memory to allow them to remember all the branches taken and backtracked upon, and not just the last straight path. Another plug-in orders open browser tabs according to their relation to each other, turning the tab bar into various hierarchical trees. The same feat is possible for ordering the user's complete browsing history.

Such browser plug-ins, as well as hacks, glitches and conscious Situationist browsing strategies, while providing only temporary and transient solutions, do offer a way of getting back to the explorative power of the early web, a way of making the found, the banal and trite, lost again. They reinstate the geography through which to achieve that psychic state of being lost and prove that, in the end, sometimes the first step to find out where you are is admitting you are lost.

The novelist Walker Percy once wrote: 'Before, I wandered as a diversion. Now I wander seriously and sit and read as a diversion.' If nothing else, this is to urge you to revel in the paradox of wandering seriously.

Jeroen van Honk is a writer living in Leiden, The Netherlands. He holds a B.A. in Linguistics and an M.A. in Book and Digital Media Studies. He is intrigued by what technology is doing to us. His work is divided between fiction and non-fiction and can be found at jeroenvanhonk [DOT] com.

References

Gaston Bachelard, The Poetics of Space, Boston: Beacon Press, 1994.

Walter Benjamin, Berlin Childhood around 1900, Cambridge: Harvard University Press, 2006.

John Berger, The Look of Things, New York City: Viking Press, 1972.

Jorge Luis Borges, 'The Aleph', The Aleph and Other Stories, London: Penguin, 2004.

Italo Calvino, If on a winter's night a traveler, San Diego: Harcourt, 1982.

Lewis Carroll, Alice's Adventures in Wonderland, London: Macmillan, 1865.

Michel de Certeau, The Practice of Everyday Life, Berkeley: University of California Press, 1984.

Guy Debord, The Society of the Spectacle, London: Notting Hill Editions, 2013.

Joshua Foer, Moonwalking with Einstein: The Art and Science of Remembering Everything, London: Penguin, 2011.

William Gibson, Neuromancer, New York City: Ace, 1984.

James Gleick, Faster: The Acceleration of Just About Everything, London: Abacus, 2000.

Ippolita, 'The Dark Side of Google: Pre-Afterword – Social Media Times', in R. König and M. Rasch (eds.), Society of the Query Reader (Amsterdam: Institute of Network Cultures, 2014), p. 73-85.

Lev Manovich, The Language of New Media, Cambridge: MIT Press, 2001.

Walker Percy, The Moviegoer, London: Paladin Books, 1987.

Rebecca Solnit, A Field Guide to Getting Lost, Edinburgh: Canongate, 2006.

Bruce Sterling, The Hacker Crackdown, New York City: Bantam Books, 1992.

Henry David Thoreau, Walden, Boston: Ticknor and Fields, 1854.

Margaret Wertheim, The Pearly Gates of Cyberspace, New York City: W. W. Norton & Company, 1999.