This is the second blog post of a multi-part series making sense of THE VOID’s online video practices in the context of cybernetic participatory culture, the legacies of tactical media, stagnating platformization, encroaching AI, and the nascent Stream Art Studio Network. Read part one here.

🟢 🟢 🟢

Enlarge

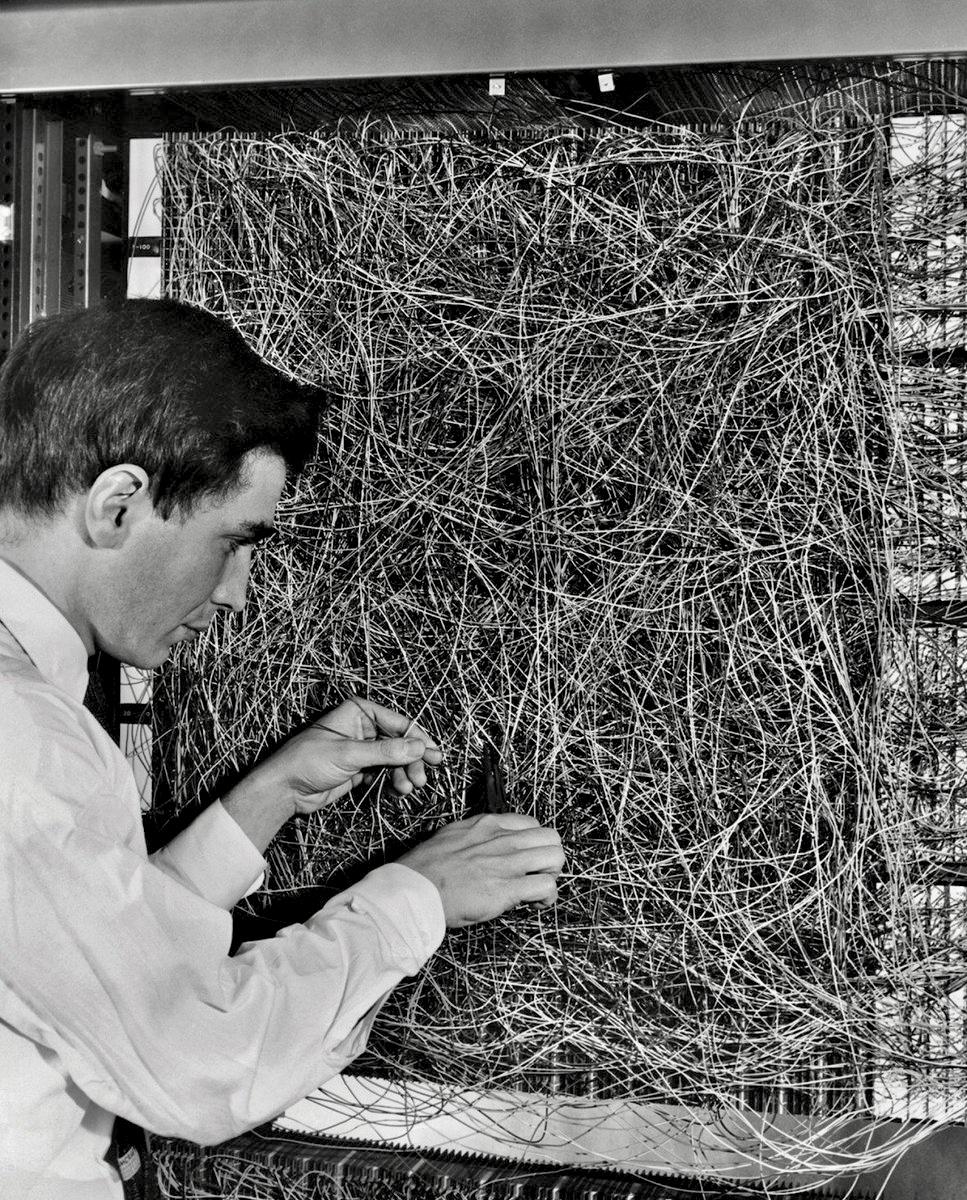

Frank, my brother in Christ, we can relate. Cable management gets messy for us too. The comments on the Reddit thread where I got this picture from also relate:

The perceptron was theoretically conceived in 1943 by “AI Pioneers”, famous MIT people, and later Cold War engineers Warren McCulloh and Walter Pitts. But it wasn’t until 1957 that it was first implemented in hardware by Rosenblatt. As depicted in the picture, hardware implementation seems to be simply a matter of connecting a lot of cables. That is, using cables to manage inputs and outputs. But what is a perceptron? According to Wikipedia (we’re not getting entangled in the weeds just yet):

“in machine learning, the perceptron (or McCulloch–Pitts neuron) is an algorithm for supervised learning of binary classifiers. A binary classifier is a function which can decide whether or not an input, represented by a vector of numbers, belongs to some specific class. It is a type of linear classifier, i.e. a classification algorithm that makes its predictions based on a linear predictor function combining a set of weights with the feature vector.”

In brief, a perceptron is a complicated method to process input into output through statistical classification techniques. Rather than going into (that much) technical detail on how this cable-connecting works, I am now more interested in what Rosenblatt is attempting to do with it. What sort of scientific or, more broadly speaking, philosophical and cultural problem was he trying to approach? In his recently published book, The Eye of the Master, Matteo Pasquinelli notes that the Mark 1 perceptron is the hardware translation of the mathematical idea of the “statistical separability of data in a multidimensional space”[1]. What this idea entails is that classification problems can be tackled in a different way: rather than sorting out stuff through a set of given rules —deducing the order of things through universal principles— it is also possible to establish correlations or recognize patterns from a random set elements without properly defining organizing principles beforehand. This is the idea that the map of a distribution can be created bottom-up; that it is possible to generate order from chaos with the help of techniques of approximation. Basically, if you happen to have a classification problem (a bunch of unsorted cables, for example) for which you assume to be a solution (HDMI cables go on the nice Rush Hour tote bag, audio cables on the IKEA bag), you can start by choosing a random cable (an XLR audio cable, let’s say), store it in a random bag (the Rush Hour tote bag) and then compare it to the known solution (does this look like HDMI cable?). If there is a mismatch between the current output and the desired one, you should just try again. This time you know, however, that cables that share elements with the XLR cable (length, color, pin type, etc.) should be avoided and try with different types of cables until you arrive to the desired outcome (HDMI cables stored in the Rush Hour tote bag).

Some of these features might be relevant (pin type), some others irrelevant (color and length) for our classificatory purposes. In machine learning lingo, each of these can be considered parameters upon which a classificatory decision can be made. These parameters are used to assign specific coordinates to each of the elements to classify. So, if we have a long gray cable with a rectangular pin, we could assign it the coordinates [long; gray; rectangular pin]. ⟵This is a feature vector. It is composed of three dimensions [length; color; pin shape] and, in this case, each of them is not binary but can have multiple values depending on the features of our cables (our training data set). This makes the calculation even more complicated, time, and energy consuming as it exponentially increases the number of combinations between dimensions. What a perceptron does is, after a training period in which different coordinate combinations are “manually” classified by comparing them to an expected result in order to stress certain paths of action (or neural connections) over others, it is then left to its own devices to come up with relevant values for each dimension for new input data. At the moment we don’t own any coaxial TV cables, but if we get our hands on one and want to know where to store it, we could use our elaborate choreography to assign coordinate values to it and sort it in a way that makes sense. The outcome should be simultaneously novel and aware of pre-existing context. However, the result might differ from common sense decision making. Since I have previous knowledge of what a coaxial cable does, I would store it with other audio+video cables, such as HDMI. Or, in an act of categorical uncertainty, I would maybe get a new bag for miscellaneous vintage cables that would contain only one element. But, if we follow our trained network of gestures, the cable might end up in the audio cable bag since a coaxial cable’s pin looks more like an audio jack than an HDMI pin. This mismatch in categorization is a symptom disclosing the methodological asymmetry between this topological bottom-up procedure and a deductive form of categorization. While they both attempt to make sense of the world, they might arrive to very different conclusions. Context can give an answer that seems appropriate, but it might not be enough to provide a “right” answer. More radically, not only context cannot offer truth, but it entirely does away with the sort of epistemological frameworks that assume that the explanation of the world is not to be found in any of the parts comprising it.

Rosenblatt’s Mark 1 perceptron is actually made up of 8 simple perceptrons running parallel, each of them deciding upon a feature by adapting its parameters through brute repetition. Artificial neural networks, such as the Mark 1 perceptron, ask repeatedly and simultaneously over multiple dimensions where does an element stand in relation to others. It assigns coordinates to each element and, in this way, steadily sketches a map organizing the analyzed elements (our cables) on a vectorial space. In a nutshell, and following Pasquinelli’s re-reading of Rosenblatt’s contributions, the perceptron represents a shift in computation: from a paradigm of logical, deductive, and exact calculation to one of contextual, spatial or topological statistical approximation. What Rosenblatt achieved with the Mark 1 perceptron is to fabricate an experimental setup to test McCulloch and Pitts’ ideas about data classification through repeatedly establishing spatialized statistic relations.

Following Pasquinelli, I want to highlight that the experimental setup, that is Rosenblatt’s hardware implementation, is not a simple accessory but a fundamental aspect of this shift in computation. Take once again, the cleaning up process of one of our streaming setups: if we were to operate like a neural network, we would only know what items are stored in each bag after performing an effort-heavy, time-consuming, and collaborative choreography. We would be blind to the fact that an HDMI cable can send audio and video signals, and thus goes in the Rush Hour tote bag, until we put its surface-level features in relation to those of other cables. This process would save us from having an inventory specifying the storage location of each cable (which we of course have). Consequently, it would also save us from being the visible masters guiding this emergent process of self-organization of our collaborative audience, but it would force us to go through a tediously repetitive classificatory dance of trial and error. Non-sensical and deriving its power from brute iteration, this process does achieve something an inventory does not. It reinterprets past knowledge and memory as a collection of collaborative actions. Deciding where a new cable will be kept is not a matter of following established rules written on tables and preserved somewhere in a hard drive or a clay tablet. Instead, it is always a new decision emerging from the constant repetition of distributed movements in the hopeful search of variation and novelty. Metaphysically speaking, for the topological processes of artificial neural networks, the world was not created a long time ago by a transcendent being, it is born anew every single second by the movements and flows of all living and non-living things. This novel (and yet ancient) form of knowledge, unlike idealistic conceptions of the mind, is not something that happens in the vacuum undisturbed by (mechanical or biological) hardware and context. It rather a form of concrete thinking[2] that needs to be implemented in a context in order to carry out its tasks of approximation. As Pasquinelli highlights, while Rosenblatt designed the perceptron allegedly inspired by neural activity, it does not pretend to be an isomorphic or one-to-one representation of brain activity[3]. Rather, and using Bernhard Rieder’s expression, the perceptron is an “engine of order” structuring cooperation. It is a deliberately incomplete model that organizes incomplete information. Not a totalizing system nor a holistic picture, an artificial neural network is just a technique (or perhaps a tactic?) to create a circumstantial, provisional, and context-specific arrangement of things[4]. After this somewhat technical deviation, I want to stress that the key takeaway for my purposes is that the perceptron shifts what computers are expected to do. Computers are from this point onwards processes open to their surroundings, “experiencing” the world and rearranging it bottom-up through methods of approximation and self-correction. Computers are then not finished artifacts, but are more akin to procedural experiments that trace and exaggerate certain relations. In other words, instead of reducing them to a program, software, or a theoretical Turing complete machine, computers are always the process of the program’s implementation on hardware. A human-machine collaboration that not only rearranges cables but, by doing so, reorganizes the human relations around them. Rosenblatt metaphorically yet very effectively, linked the activity of connecting a bunch of cables to biological brain processes and, in an even greater semantic leap, to human intelligence. Cold war engineers surely lacked some metaphoric humility. However, what Pasquinelli shows throughout his book is that our unfortunate cable-management “friends” were up to was not really shaping computers after brain activity, but after the social and political notions of self-organization and autonomy[5]. The perceptron and artificial neural networks are therefore sociomorphic rather than biomorphic[6]. At the intersection of counterculture, cyberculture, and military culture, the project of AI and automation is traversed by the idea of social autonomy, as well as all that cybernetic jargon of becoming, feedback loops, self-correction, autopoiesis, and so on[7]. While, on the one hand, autonomy might mean giving oneself one’s own rules[8] on the other, for cyberneticians in search of Cold War military solutions, autonomy meant the ability of a system to adapt to external input. As Pasquinelli notes, these are two sides of the same coin: the project of autonomous cybernetic systems is tied to that social autonomy[9]. The aspiration to create a spontaneous order from chaos, that is, the capacity to self-organize, connects both. What’s at stake at the input-output game we find ourselves playing is the ability to display emergent properties. For unexpected orders to occur through the power of cooperation. At THE VOID, we would never dare nor are interested in claiming that our cable-connecting activities miraculously produce artificial intelligence. We leave that to the (new) Cold War engineers. But we do subscribe to ideas of self-organization and aspire to practice them. At our humblest, we connect cables to do laid-back PowerPoint nights. At our most ambitious, we are working to become a node of a self-organized and autonomous network of tactical television (more on that in a later post). A network that, along with our partners from UKRAiNATV, Re:Frame TV, IM Budapest, CDI Warwick, 3022, pacesetters.eu, and Carbon, we are starting to call the Stream Art Studio Network. Our institutions, allegiances, as well as geographical, political, and historical contexts differ from Rosenblatt’s. Yet, at a glance and after Pasquinelli’s historical-philosophical analysis of the perceptron, Rosenblatt’s “artistic practice” doesn’t seem that much different from ours. Surely, an AI would describe a picture of both, a VOID setup and Rosenblatt’s perceptron, as “people managing cables”. In any case, I want this series of blogposts to use this apparent similarity as starting point to trace a connection between the logics of artificial neural networks and those of tactical video. How is Rosenblatt’s hardware implementation of an artificial mind similar to our events over-complicating streaming? Of course, the answer passes by practicing self-organization. Tracing this link is part of a larger theoretical, historical and, in THE VOID’s case, practical effort to offer a different narrative of Artificial Intelligence and networked technologies in general. One that strays away of technological Big Other fantasies, techno-solutionism and seamless automation. It is also a way to make the point that the questions guiding AI design and development are closely linked to (yet have nonetheless obscured) those of electronic media (video) production and distribution as a practice of political autonomy. The question of a democratic AI doesn’t necessarily pass by the epistemic reformation of algorithmic decision-making to better align to “democratic values” of truthfulness and correctness. I instead propose to reframe it as the much older question concerned with the democratic potential of nurturing a civic participatory culture of media autonomy and self-organization. A culture that we wish to nurture as part of an incipient network developing alternative shared practices, infrastrucutres, and theories for online video streaming. 🟢 🟢 🟢 [1] Pasquinelli, The Eye of the Master, 220. See chapter 9 “The Invention of the Perceptron”. [2] See Papert, S. and Turkle, S., “Epistemological Pluralism and the Revaluation of the Concrete.” [3] Pasquinelli, The Eye of the Master, 196, 219. [4] See Panagia, “On the Possibilities of a Political Theory of Algorithms.” [5] Pasquinelli, The Eye of the Master, 133–60. [6] Ibid. 153, 160. [7] Evgeny Morozov’s new podcast “A Sense of Rebellion” provides a detailed and very entertaining picture of this late 60’s and early 70’s distinctly American intersection between counterculture, cyberculture, and always latent military funding in the context of the obscure Environmental Ecology Lab in Boston. [8] An idea spawning from German idealist philosophy, closely linked to the notions of organism and reflexivity. Autonomy is a notion deeply engrained in a wide variety of political tendencies: from the nation-based geopolitical order to liberal anti-authoritarian activist movements, as well as a key notion for late-70’s autonomist Marxism. [9] Pasquinelli, The Eye of the Master, 156–60. References

Panagia, Davide. 2021. “On the Possibilities of a Political Theory of Algorithms.” Political Theory 49 (1): 109–33. https://doi.org/10.1177/0090591720959853. Papert, S. and Turkle, S. 1992. “Epistemological Pluralism and the Revaluation of the Concrete.” Journal of Mathematical Behavior 11 (1): 3–33. Pasquinelli, Matteo. 2023. The Eye of the Master: A Social History of Artificial Intelligence. London ; New York: Verso. 🟢 🟢 🟢 Read part three here.