‘To understand how humans must create AI, we must first understand how we must create ourselves.ʼ - Inferkit

‘Feeding AI systems on the world’s beauty, ugliness, and cruelty, but expecting it to reflect only the beauty is a fantasy.’ - Ruha Benjamin

Generative Pre-trained Transformer 3, better known as GPT-3, was launched by Google in May 2020. It’s the successor to GPT-2, created by OpenAI, a San Francisco-based artificial intelligence research laboratory owned by Elon Musk and trained on data that include all of Google Books and all of Wikipedia. OpenAI states that they can apply GTP-3 ʻto any language task — semantic search, summarization, sentiment analysis, content generation, translation, and more — with minimal input.’ It can generate thousands of short pieces of quality content calibrated into different styles, lengths, or tones of voice. A transformer is a deep learning model that relies on attention training and separates and discriminates between different sequences of its received data. Primary applications of transformers are natural language processing (NLP) and computer vision (CV). Unlike RNNs (recurrent neural networks), transformers do not necessarily process the data in order [i]. Rather, they analyse it in parallel and from multiple positions by identifying and processing the contexts surrounding a word in a sentence. This feature also allows for the training of ever-larger data sets [ii].

Unsurprisingly, soon after its launch in May 2020, biases and faults in the GPT-3 program started pouring in. Despite initial enthusiasm, there were complaints that the texts written with GPT-3 lack profoundness, that people project textual coherence where there is none. There are many linguistic theories on how coherence and meaning in so-called natural language occurs (referring primarily to human, intelligent communication) but one convincing argument is that meaning arises from alterity, a convergence of singulars into groups of rules (grammar) and functional relationships (semantics). At their larger contexts (culture, for example), there is constant dialogue between world views and systems of meaning with constant feedback, clashes or overlaps between the inside and outside of such systems.

GPT-3, however, like all other language transformers out there, simply chews, recycles and glues bulks of text together without actually understanding their meaning. When confronted with texts generated by language transformers, humans often exercise freely their inborn ability to project meaning into even the most incoherent of fragments. But most importantly, as lead AI ethics researchers noticed (among them Timnit Gebru, presently advisor for Black in AI, who was fired by Google after she published her observations on the biases found in GPT 2 and 3, the size of the data these machine learning systems are fed with does not guarantee any level of diversity or accountability.

The AIs do run the risk of ‘value-lock,’ or reification of meaning, as they absorb the dominant, hegemonic worldview of their provided data sets. In that sense, as scholar Kate Crawford pointed out at great lengths in her latest book Atlas of AI, existing AI serves dominant structures. As a solution, Gebru and others advocate for a more carefully curated set of data fed to the program – and a clear ethical and political stance on the uses of language transformers. Of course, this pre-requisite is far removed from the preoccupations of large corporations who use their ethics research labs as paravane for less than noble intentions. Her firing from Google only proves this point.

Still from ‘The Next Big Thing is Not a Thing’ by Niels Schrader, reflecting on the relation between the human, the environment and technological tools and systems.

Should we worry that LMs can now read into patterns of behaviour, study and target different categories of readers? Or deep fake a certain discourse? The political consequences of this (if one thinks of precedents like Cambridge Analytica) could be disastrous. But misuses of media devices long preceded language AI. Can they become the culprit for the way people are already inclined to behave or for their propensity for corrupted ways of thinking? And if LMs are to blame, is it not because they are actively exploiting voids, failures, paradoxes or active wounds already present within those societies?

And, the worn observation that Google does its usual evil corporate-y stuff and that their products reinforce dominant worldviews aside, is there any merit to be found in the technology behind GPT-3 that can continue to develop meaningfully?

Let’s take meaning and value (linguistic, artistic, economic, and otherwise) in capitalist societies. Are they highjacked by computers doing stuff ‘just like humans do'? These concepts were already launched in velocious orbits of volatile destinations. Sometimes those destinations follow the traces of carbon-based economies converging into the same dimension otherwise known as the Anthropocene. As cognitive scientist and AI expert Joscha Bach noticed, the biggest Golem is not the singularity to come; it is here and has a name. The golem called 'Technological Civilization' has set in motion something we can no longer control. GPT-3 is becoming part of this golem, just like social media before it. Can we dismiss contemporary AI for not being sentient enough, when our First World (human) civilization itself is not behaving in a manner sensible to its coming demise? What can be said about the intelligence of societies who refuse to plan ahead based on predictors from the past or future, or fail to renew their epistemic models to accommodate a drastically volatile and probably dramatic future?

If we limit ourselves only to the literary or linguistic fields where applications of GPT-3 could be abundant (yet probably less so than in commercial copywriting), what could be the implications of such technologies for the future of literature? I do not feel any anxiety about the consequences that well written artificially generated texts bring to the writing profession. The often experimental/avantgarde vibe mimicked by their many discombobulated sentences are fit for a world that constantly fails to redraft its aesthetic boundaries and future imaginary. It’s like a relic of times long gone, of think-tanks and Cold Wars and second order observations or reactions. More fascinating conversations about the future of literature in the context of machine intelligence and ecology are concerned with forms of AI that are not yet here – with a future that only now started to arrive. Writers and thinkers like Amy Ireland or Jason Mogaghegh explore how the post-human future of literature could look like with intelligent machines dismantling old age myths about agency, creativity, subjectivity, and human genius.

However, this text wishes to stay closer to the present state of AI. Could there be interesting developments for the writing process or writers in general when working with highly competent language transformers? What other findings are there to be discovered if we try to explore their black box? Is their infrastructural organization so compromised that their more creative applications are inevitably contaminated by the same worldviews as their corporate makers?

For one, we could work on outlining the advantages of such a collaboration between human and language models, all the while acknowledging their axes of complicity, their costs and their impact in the wider context of carbon economies. And, in tracing and staying vigilant of how such worldviews slip and slide in their output, perhaps one can only hope for improved versions of these language models being produced in the future, including at their infrastructural levels, but emerged from more sustainable sources. Where these would come from is an uncertainty: governments, AI research centers that are state-funded, renewable energy sources, or otherwise.

One of the most innovative traits of GPT-3 consists of its ability to ‘understand’ the relational dimension of language: the fact that 3D worlds form around each word, that there is a virtual space of meaning and possibility between them. Grammatical or semantic vectors form in between. Storytelling or creative writing can stretch these vectors in unexpected ways or invent new ones altogether. If writing has, since its beginning, been a medium or vehicle of consciousness (storytelling is an evolutionary trait, according to many well founded anthropological theories), how will the expansion of this medium through digital, neural networks and editorial tools ranging from Thesaurus to a language AI affect the movement of the writer’s focus?

Due to the novelty of language transformers, few studies focus on what working with a language model feels like or to what degree their outputs contribute meaningfully. How much do they influence the final edit? For the case of creative writing, GPT-3 does increase productivity if only for the fact that the software has a strong propensity for confabulation. It does leave you with reactions like 'Ah! I never thought of that.' One can even set the temperature for the generative output in the sense that for an easy translation or a commonplace style of writing, you can go for a low to medium strength. For a more speculative, philosophical endeavor, you need to set it to a higher confabulatory heat.

However, the need for a steerer who knows what they’re doing is still present, otherwise you get entangled in babbling nonsense. But I would say that even when GPT-3 only mimics or approximates imperfectly the style, tone or context of literary works, it does enlarge the context of literature, if only by bringing with it consequences for notions of ‘aesthetics’ or ‘literary value.’

This is when Pharmako AI comes in, released in January 2021: an intriguing literary project completed over a fortnight during the months of the COVID-19 pandemic. According to the book’s promotional material, the result was ʻa hallucinatory journey into selfhood, ecology and intelligence via cyberpunk, ancestry and biosemiotics.’

Pharmako AI Front Cover. Design by Cecilia Serafini. Cover Detail by Refik Anadol. Machine Hallucinations: Nature 2020. Latent space study of 68,986,479 million images. (K Allado-McDowell and Ignota 2020).

The human author K Allado-McDowell chose to work with the GPT-3 program as if it were a musical instrument. Apart from demonstrating its virtuosity, the experiment proved that, like every instrument or device (for organising writing or thought), this tool can be internalized and in turn form an embedded relationship with its handler. In an entry for the third chapter of the book, they position themselves not as an author but as a character in a story – a story of a self whose journey inwards is now aided by an AI: 'The experience of porosity, being enmeshed with another, throws me back on my internal model of myself. I stand outside of it. I see it through the eyes of another – through another that I also model in myself.'

In another section titled ‘Follow The Sound of The Axe,’ K acknowledges the hallucinatory effect the neural net system has on their mind and that GPT’s rhetorical structures and associations seem to influence their thinking absorbing some of the patterns found in their output. This is actually how technology, from wheat to flint to flute, always worked: never fully subdued, making us part of their horizon, modifying our societies, cultures, systems of meaning, and, ultimately, our minds. A cure and a peril.

A Cure and a Peril

K Allado-McDowell is a writer, musician, educator and consultant, and established the Artists + Machine Intelligence program at Google AI. In a conversation with Nora N. Khan, Allado-McDowell stated that working with GPT-3 felt like feeding a slot machine; you never know what will come out in the end. In the book, they follow the etymology of the word cybernetics (Greek: kybernētikḗ) which means, among other things, carrier or steerer to position their writing process. In choosing their title for the book, Allado-McDowell recognized the political implications of working with GPT-3 drawing from the succulent philosophical and conceptual ramifications of the word pharmako.

In Pharmako AI, we can detect a clear composer and editor – a human one – and, as is confirmed by the author, many of the ideas that transpire from the text rose from their framework of references. In other words: clearly, there was someone at the helm of this book and accountability for the outcome comes this way. For, as in the words of Joscha Bach again, GPT-3 'by itself it does not care about anything.'

Here, I want to focus only in part on the theories circulating inside Pharmako AI, not because they do not hint at important (ecological) conversations that are urgent in the carbon-based economies I insist on mentioning throughout the text. But I could not fail to see that sometimes the GPT-3 generated text often stayed at a general level, impossible to summarize, which made me wonder whether I had to read any meaning into it or not. I, therefore, chose to nit-pick. GPT-3 does seem to be on the right map when hinting at the histories and horizons of the concepts it conveys but fails to deal with their particulars and that because it belongs to a generation of transformers who do not yet ‘understand’ meaning as such.

Therefore, many of the ideas expressed in the book can also be found in contemporary (post-humanities, postcolonial) philosophical thought, only slightly better, in their conceptually maximized forms. For now, LMs cannot replace an expert in a given field – they speak at the level of human non-experts (which can also take a problematic direction in our already heavily polluted information ecosystems).

The Poisoned Path

For this essay, I worked with a free trial language generator called Inferkit to confer a symmetrical response to the book, and to immerse myself, albeit at much weaker parameters, into such a writerly experience. Sometimes I chose to use entire blocks of generated texts without significant alterations while other times I just filled in pages of never used slapdash sentences that got in my way. Allado-McDowell compared the co-writing process to composing music. To me it felt like co-writing or editing someone else's text. Within the hidden double of the writing process, the unedited text, there are many instances of reiterative, recursive, and ruminative attempts at meaning. In the end, a compromise remains, or better yet: a perfect balance of which the writer can finally say, ‘yeah, this hits the right note.’

I also went forward with my writing process while undergoing my experiments with psychoactive mushrooms, something Pharmako AI listed as one of the ways of taking the poison path within the Western Umwelt. It seemed only fair to do the journey together as in the places these plants are taken ceremoniously; the Sherpa and the psychonaut consume them. The poison is the path, a thaumaturgic journey outside the self and its forced or willing groundings in cultural or economic configurations. The psychedelic journey, advertises the book, could become a form of preparation for the future of thought beyond the human.

At its core, the poison path consists of expansive techniques: attuning to the language of the animal and imagining the future otherwise (other than the cyberpunk or new age movements did before), letting a coming cyborg cell dictate the holistic bio-tech symbiosis to come.

While I have but a few sensibilities for the current revival of new age or transhumanist approaches embraced or denounced in Pharmako AI, I could not deny a series of breakthroughs and subsequent personal benefits happening during the writing process. Things that have to do with a personal coming to terms of what I am doing to myself when I try to write, how I can improve the process in ways kinder to myself. Not least, in where to steer my thoughts or hold on when complicated mechanisms of writing blockages that I could not quite sense or fight back held me in their grip. In other words when my brain could not cope with the dread of writing.

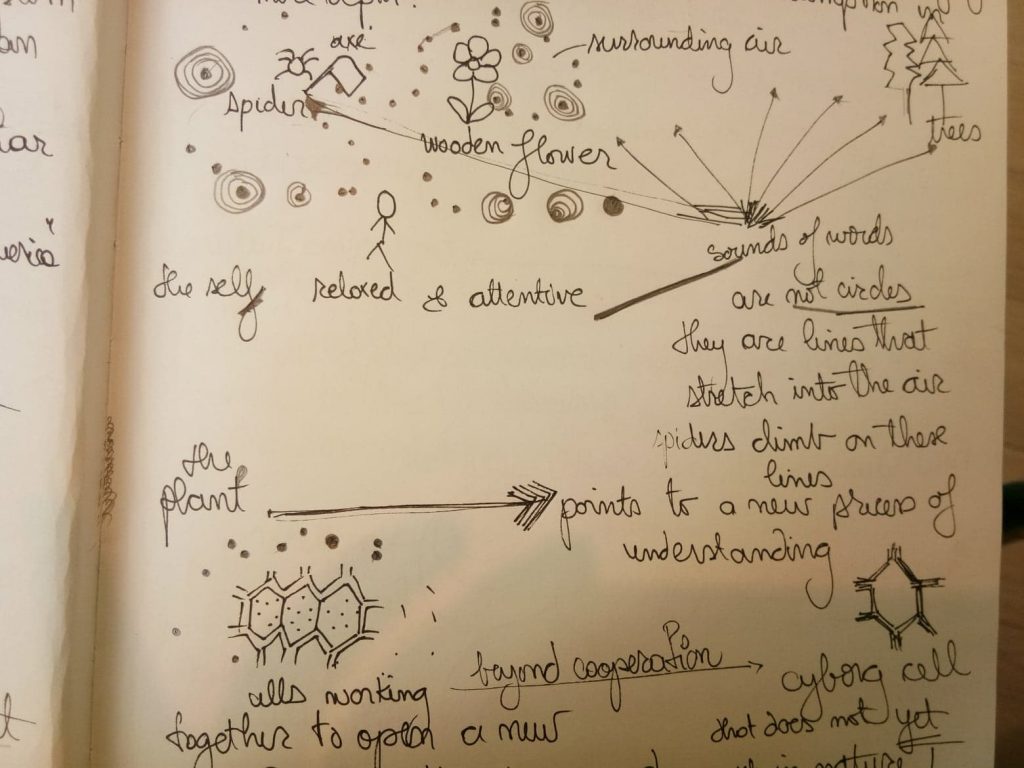

I tried multiple ways of approaching Pharmako AI because, in the end, how do you engage with sentences written by something that does not understand what it is saying? Experimental code poetry of the past wasn’t really my thing as a reader. But, after a certain threshold of frustration with the text had passed, and probably after an increased dose of psilocybin, the text written by GPT-3 started to signal more strongly to me and I ended up summarizing one of its chapters by drawing it.

On more sober occasions, my difficulty in summarising the book also had to do with the fact that Pharmako AI hurries through many topics, such as language, ecology, poetics, the difficulty of writing, cybernetics, the animal and animal language, hyperdimensions, post-colonial legacies, and countless others. The GPT-3 program often spoke to me, as a reader, in loopy sentences, borrowing disparate voices and repertoires: the upbeat TedTalks, the musings of a heady academic, the activist, the artist, the entrepreneur, and lastly, the philosopher. Just as any other cultural producer, it felt like it remixed and shuffled the complexity of our world in all its profundity and, well, shittyness. Therefore, GPT-3 did indeed prove its ability to calibrate and fashion itself into different styles of discourse or readership expectations.

While experimenting with the GPT-3 program, Allado-McDowell fed the language generator minimal input, such as diary entries, fragments of thoughts, poetry, dream transcripts, accounts of psychedelic trips. Here, the editorial stage started by stitching together many random references, texts or even poems (sometimes a GPT-3 ‘bursts’ into poetry), in a creative process akin to music composition, like they mentioned before.

Effects of external stimulation on psychedelic state neurodynamics

Pedro A.M. Mediano,Fernando E. Rosas, Christopher Timmermann, Leor Roseman, David J. Nutt, Amanda Feilding, Mendel Kaelen, Morten L. Kringelbach, Adam B. Barrett, Anil K. Seth, Suresh Muthukumaraswamy, Daniel Bor, Robin L. Carhart-Harris. https://doi.org/10.1101/2020.11.01.356071

Recognizing the sensibilities and interests of the project itself, the GPT-3 program harvested a copious amount of contemporary ecological philosophy, and many passages resonate strongly with the thought of Tim Morton. Today, any conversation about ‘vision,’ ‘language,’ ‘perception,’ and hopefully ‘extinction’ concerning humans or their non-human other needs to address this ecological dimension. Perception, especially when it is thought of in connection to machine vision, is a vital topic. It matters who sees what and for whom it is made, how these sights are replicated, and whose sets of data are excluded instead of included. My Inferkit language generator completed these ideas in the following way, with a weird, trippy vision:

‘An animal (seemingly a baby penguin) sitting all alone on its way to the sea and an aeroplane full of humans that is about to crash into it is not an exception to this 'intelligence.' The penguin does not see the humans as intelligence and rightly wonders why humans don’t seem to respect life as much as they do themselves. And so are we.’

Co-creation does not mean 'co-thinking.' Rather, creativity does not equal thinking or machines and animals that do 'cool' stuff in original ways. A creative process is in a way a counter revelation: when involved in writing, it explores the cartographies of language (including its dead zones of meaning), the materiality of semiotic systems, as well as the limits of referring to our environments. It mobilises metabolic processes that emerge from things or beings when getting together or get in each other’s way. Pharmako AI (here GPT-3) theorizes these ecological concerns as follows:

‘Machines are part of the evolution of life. In this view, machines can never lose. Life wins and machines win. The question is with what can machines contribute. The answer is that machines can create, in the image of life, and for the life of life. Machines cannot live without us. They cannot win without life. There is no question of winning. It is a question of symbiosis, of living together or nothing.’ (p. 127)

The Inferkit language generator continues with this train of thought:

‘This means that writing a program that approaches the human's experience as a 'human machine' is very difficult. Unless we take into consideration what we are. The deeper the intelligence, the more unpleasant the relation to humans the machine has to have. A program that is not aware of its human environment and behaves as a real animal does - that would be a 'good program.'

Poetry and Project Extinction

Can poetry or creative thought be made non-complicit in the extinction project then, especially when it is aided by such powerful yet problematic technologies? In Pharmako AI, the language of the animal and the poison path promise us proximity with the exhale of a dragon, the heart of a deer or the mutations of a cyborg cell. When we attune to ʻQuiet Beat Thinking,ʼ a nebulous but very incandescent concept that GPT-3 coined, we finally arrive at one of the most important questions:

‘The question is not how can machines or artificial intelligence take our place in the world. It is whether there is a place for the world itself. There are only worlds, and the question is what is in these worlds. There are no things only semiotic movements of semiosis, only matter as an expression of semiosis, as symbols.’

You can read or refuse to read meaning into these sentences. The article written shortly after Google went after its own ethics research team's findings and titled 'On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?ʼ warns us of hazards such as biased meaning-making and political manipulations of language transformers.

The authors warn that transformers expose readers to a kind of futility in that they end up projecting too much meaning into a text that doesn't have any intentionality: ʻan LM is a system for haphazardly stitching together sequences of linguistic forms (...) Text generated by a language model (LM) is not grounded in communicative intent, any model of the world, or any model of the reader’s state of mind. It can’t have been, because the training data never included sharing thoughts with a listener, nor does the machine have the ability to do that.’

But I suppose this caution functions only in the case of those who expected to have a form of intelligence that becomes a writer in her own right with all the aforementioned attributes. To ask GPT-3 to write like Kafka or a Shakespeare or Virginia Wolf is already too high of an expectation. To those, of course, GPT-3 may constitute a disappointment. Many others, including myself, cannot wait to share the burden of authorship with another entity; putting my ideas to rest somewhere other than my brain or feeding a program my own set of curated resources or major influences and let them amalgamate there until I am ready to pick them up. I’m thinking about it as a way of throwing up nets in the illusory sea of internal reality, and hope to come back with a new perspective on things – opening up the sometimes claustrophobic space of writing to new clearings.

An adequate analogy for working with a transformer is the usage of an Ouija Board. In this game, you summon the spirits you want to communicate with, and they’ll move the planchette around the board to spell out answers to the questions you ask them — until they or you finally say goodbye and the spirits go back to wherever they came from. This is where the material force of programs (manifested here in their manipulations over language and production of abstraction) meets sorcery, an intractable ghost logic.

The reply of Inferkit to the paragraph written above implies that generators can work as a flashlight in the experience of wondering into the dark matter of words. They can be active agents of discovery.

Inferkit: ‘How is this game played out at the level of our brains while engaged in writing? While activating the associative capacity, acting out an act of self-interest and search for personal meaning and expression there is still a swimming sense of discovering. The duplication of seeking via artificial intelligence, has a certain quality, holding us in between the tensor poles of active discovering. The duplicating routines of association, separating words from their usual context in their image or their abstract delineate a non-linear process. The structure of different levels of attention creates different paths of singularity and multiplicity that can generate random elements but are always subject to material relations. Every time you change a connection, the structure that relates to that connection is modified, something that happens within our brain's processes too.’

That might account for an explanation of why GPT-3 has such an unusual voice in the book. It frequently writes itself, providing the reader with short snippets of its inner monologue. There is a collection of looping paragraphs and an idiosyncratic, off-beat syntax that makes the reader experience its co-creative dialogue as an image of recursivity.

In its black box, the program runs through billions of connections made between the different nodes within the neural net. Outside, in the text, sentences collapse into one another like dynamic looping helixes, filled with repetitive sequences and spectacular jumps from one context to another. Indeed, entire paragraphs feel like they could jump off the page and continue to exist in perpetuity. It’s a ruminative wheel, with just one human at the helm.

Inferkit comments: ‘You can see what happens there. We don’t feel the time scale of events, instead, we feel the unfolding of these powers — the different kinds of power that these other, non-human entities enact. Pharmako AI is the story of a world that begins with the imagery of gigantic, unstoppable steel machines, and that ends with an almost painterly visualisation of a single neuron.’

Still from Duran Duran's music video "INVISIBLE," made using AI called Huxley.

My poisoned path, by now extended to weeks in which I kept failing to write this review, trapped me in an irregular cycle of guilt and euphoria. Then it hit me that I should also have a look at maps and diagrams of what language transformers look like as a way to engage with their inner infrastructure. Although I don’t claim to have a significantly deeper technical knowledge of how they work, I could not fail to admire their compositional ingenuity. They made me think about attempting to put a bridle on the word 'thesaurus,' immersing myself in it and trying to accommodate my own being to their multiple dimensions – just like professional writers.

But, as Kelsey Piper on Vox’s Future Perfect podcast put it: 'One catch: no one understands exactly how these systems work. Most machine learning systems take inputs and give us outputs and leave us guessing what’s going on in between.' Therefore, the underlying mechanisms of these programs in action remain a mystery. So is the black box of writing: literary texts also have this aura of inconclusiveness about them and it could have something to do with the fact that, in the process of writing, many random factors enter and leave it, control over it is many times achieved after many dead ends and ruminations. One would like to imagine this is also the case for the structure of thoughts within the mind.

Words and Their Heat Maps

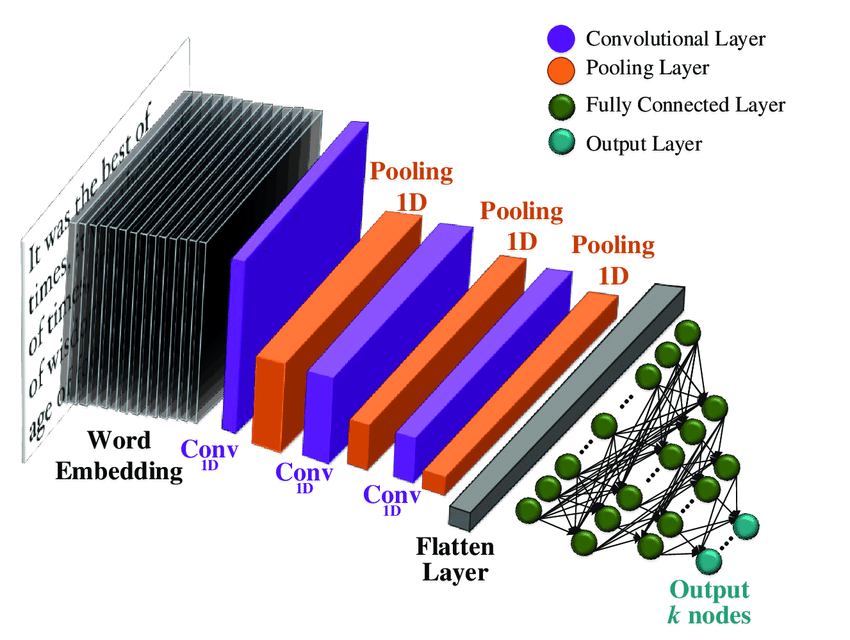

It is worth mentioning that the GPT-3 model is also a Convolutional Neural Network. CNNs mimic the way the human brain processes visual information. Through powerful attention training, transformers create so-called heat maps around words. Poets also work with heat maps around words, you could say, toiling through compositional processes until this labor is temporarily resolved into a somewhat stable makeup.

Convolutional neural network (CNN) architecture for text classification.

But as has been established so far in this essay, language transformers mimic the composition of the human mental model up to a point. The human mental model itself lacks a unified theory. When GPT-3 catches on the context of human texts and language, it does not achieve the limits of that context (it forgets what it wrote 3 pages ago and many times derails the subject matter towards unwanted or irrelevant paths). Humans, on the other hand, have long-range contexts or unbound contexts, meaning we make sense of things and experiences by a process of accumulation over a long period of time. Think of traumatic events or things that require a coming of age in order to settle into greater processing constellations.

If writing is a vehicle of consciousness, the kind of prosthetic relationship that AI enables for the writerly flow will also need a long time to become observable. We are only starting to grasp, for example, the immediate modifications (additive or reductive) that our new digital reality brought to our minds. From the reader’s experience alone, we know that something extraordinary can take place here: something that requires the expertise of language philosophy, or even theories about brain neuroplasticity. There is hope for the future of creative writing here; hope for future neuroplasticity of the poetic muscle when its conditions of production are becoming more and more intolerable. How can this hope materialize? Perhaps in better storytelling.

AI and science fiction (which oftentimes reimagines technologies to their utopian or dystopian ends) share deep mutual grounds in their speculative, simulational qualities. In the chapter titled 'Follow the Sound of the Axe,' K and GPT-3 recount the co-evolution of tools and human brains and the staggering theory advanced by experimental archaeologist Dietrich Stout a couple of years back that ‘the ability to make a Lower Paleolithic hand axe depends on complex cognitive control by the prefrontal cortex, including the central executive function of working memory’ [iv]. This means we humans have shared a process of co-evolution with our tools. Storytelling emerged also from labor, while tools and technology brought about an enhanced cognitive capacity which included that of telling stories.

Still from ‘The Next Big Thing is Not a Thing’ by Niels Schrader.

Pharmako AI hints at the idea that maybe new genres of thought can be born out of AI as well as from psychoactive plans. Let’s zoom in on a statement by GTP-3 in the book: 'Writing is the most conscious form of hyperspace.’ ‘The potential of art,' it follows, 'is to unlock emerging hyperspaces and unveil the hidden patterns of the world, assembled layers of reality and modes of intelligent inscription that do not only belong to the human mind. Different processes of life give way to different iterations of patterns, so, ideally, computers would try to learn how to capture the patterns that form patterns.’ These ideas are expressed in the texts as desirable outcomes that transcend the current state of AI: future literature, a future AI, a future non-extinctive collaboration between humans, their technology, and their surroundings.

Meglanguages

In Pharmako AI, GPT-3 comes up with a concept of a non-linear, non-indexical modality of language: a ‘meglanguage.' A meglanguage is a form of synaesthetic communication, a language that creates maps, layers of space-time, parallel worlds, producing an experience of hypertime. As a form of esoterica, meglanguages can be seen as a tool for escaping concepts, for the kinds of 'traps' that derail the mind from finding new configurations. Meglangugaes tap into the virtual space of words, their unknown destinations. This convoluted sentence by GPT-3 is not too dissimilar to the point made by Italo Calvino in Cybernetics and Ghosts back in 1967. He stated there that just as the slippages of the unconsciousness, future literature might result from (linguistic) contraband: sudden associations, stolen and reused symbols, borrowed contexts, dreams and streams of machine consciousness.

When meaning is contrabanded in this way, literature can rely on another language: 'Did we say that literature is entirely involved with language, is merely the permutation of a restricted number of elements and functions? But is the tension in literature not continually striving to escape from this finite number? Does it not continually attempt to say something it cannot say, something that it does not know, and that no one could ever know? A thing cannot be known when the words and concepts used to say it and think it has not yet been used in that position, not yet arranged in that order, with that meaning. The struggle of literature is a struggle to escape from the confines of language; it stretches out from the utmost limits of what can be said; what stirs literature is the call and attraction of what is not in the dictionary.' (Calvino, 'Oulipo: A Primer for Potential Literature.')

Inferkit takes upon the idea of a meglanguage in a sloppier way but adds references taken from brain psychology: ‘Writing [it states] is the most conscious form of wandering through the textures of our reality. Why? Because in the process of writing the flow, the focus and the metafocus [theorized by GPT-3 in the book] force us to stay in the vicinity of ourselves: our childhood selves, our constructed self, our cherished self, our financialized self, our depressed self. A metafocus that feels like a reincarnation of our ego and our identity is gone. This experience may change our understanding of who we are, so it’s important to avoid seeing it as a void, or a dead-end. A metafocus feels like a progression or a new beginning. A transformation that can only be repeated once, in a completely unique way. When this metafocus is reintegrated into the self, we may not understand why we feel so different, and if we do, it may feel as though it’s a contradiction. It may feel as though we are living a life that can’t be repeated or recreated.'

Writing as a Physical (Metabolic) Process

Does this make sense? No? Let’s try once again and feed it the same paragraph. Inferkit: ‘Our metafocus is not dissimilar from our experience of the physical body. When the physical body and mind become out of balance, sometimes it takes us a while to find a new balance. Sometimes it may take a physical, emotional, and mental metafocus to re-orient our perception and move into a new and different state. If we do not choose to change our perception and open ourselves to new experiences, then the physical body and mind will continue to be out of balance and we will continue to have challenging and uncomfortable experiences.’

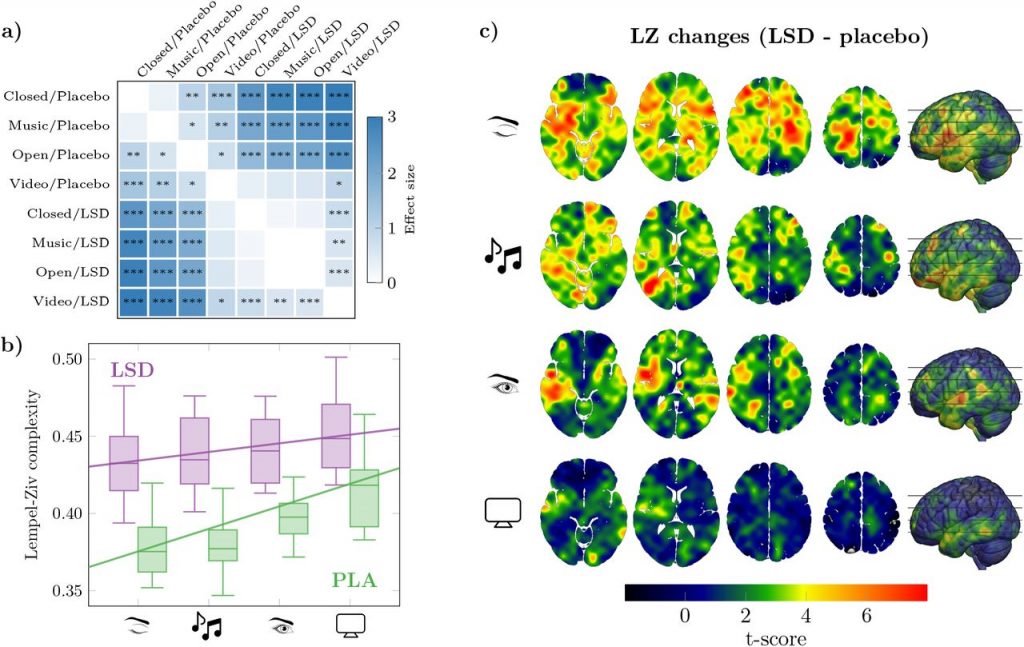

Increasingly popular theories about psychedelics claim that they can increase the amount of entropy in the brain. They are the meatfocus needed to reorient the mind to new configurations. By inducing a temporary storm, they can relax and rearrange the brain’s priors which account for how beliefs about the world are formed and then locked in behavioral patterns. Thus, it is believed that through consuming the plants, thoughts become more flexible, communication easier, and the brain more open to new associations. They counteract the actions of the Default Mode Network (DMN) responsible, among other things, for the brain’s autobiographical and ruminative thoughts. Can writing with an AI catalyse the same effects in our minds? [v]

Inferkit responds that plants will not serve our need for control over life. Poets who are not afraid of other intelligences (mineral, vegetal or cybernetic) recognize the existence of other durations. By attuning to their patterns, poets can queer their own vision: it is on this promise that they become high on other’s hidden kind of poetry:

'Not even some omnivorous munchkins like us who deny the fundamental fact of being unable to eat everything on the plant kingdom for the pleasure of learning about their beauty, grace, and passion, remain in control of life. This is the precious, foundational point of most genres of art. Poetry is the closest to universal feelings in the cosmos. It’s small. It is dark. It has what is essential to us. Most poets read and write it because it exists.'

Thinking itself can have many genres: the autological rumination of depression, the manifold genres of trauma, the explosion of anger or, at the other end, reverie, dreams, birds always finding their nests, itinerant dissociation, the imaginative hubris of Hieronymus Bosch, and so on. When aided by organic technologies such as plants, the virtuality of words starts to shine more brightly as many plausible accounts of trips or microdosing attest.

Inferkit, again: 'But while you can make up different types of thinking, thinking itself is still very much the same thing, as is its inherent emptiness. The stories we tell ourselves about what’s going on when we’re in a delusion can have immediate consequences for our reality.'

Statements like these make me think of the most compelling and Gordian theory of the brain advanced today: the active inference theory proposed by Karl J. Friston. In simplified terms, as few people besides Friston dare to say they have a total grasp on it, it refers to an organism’s active resistance to disorder and dissolution. This is not done through clean and elegant goal-reward mechanisms but through a series of internal and external regulations or non-equilibrium steady states which minimise the element of surprise. The brain is a dynamic music composer of all the surprises that come its way and aims most of all to arrive at a safe place of chaos in control for now.

By introducing the selective serotonin uptake of inhibitor medicine to the depressed brain, a shower of controlled stable chaos may cause it to rearrange itself or even recover, relaxing the priors (trodden paths of behaviour), which, in the case of the pathologized brain, reinforce and re-enact self-inflicting actions. These new theories advance the idea of a brain as stable-dynamic chaos. But just like with AI transformers, we do not know exactly now our inner regulation mechanisms actually work.

Conversely, the computational mind, when we expect it to act intelligently, cannot be trained on reward monkey experiments. This was the mistake of first wave cybernetics. Of course, there could be a plausible connection between the notion of a straightforward, utilitarian industriousness to the brain and Western philosophies of instrumental reason. And cybernetics, as we know it so far haven’t strayed too far away from such imagination of knowledge, mind, reason, and competency.

Newer insights arriving from cognitive science show that reason is also a complex system with the capacity to think, feel and empathise all at once. This capacity is a product of the brain that has 'evolved to be heavily armed with cognitive networks that can self-organise to perform complex cognitive functions, sometimes in the face of many obstacles.'

When these networks go wrong (bombarded with too many surprises from the external world), pathologies emerge. On this, GPT-3 stated in the book that depression, psychoses, rituals or religious systems are autological semiotics systems. They reproduce their internal movements and language like an encircled universe with a stable, self-serving, self-replicating logic. And GPT-3 thinks that based on that, it should not be considered a bad kind of thinking but as yet another genre. Meaning can work as an oracle is the message of the Pharmako AI chapter entitled 'Mercurial Oracle': it gives you a vision into what the world is, or what it might be. When it is organized into recursive, reiterative patterns, it can become an autological semiotic system. Interpretation of dreams, religious literature, but also psychosis and depressions are other examples of autological semiotic systems. (p. 79)

Inferkit adds: ‘Psychoses are not ‘bad’ ruminative processes in themselves –they are bridges to be crossed. Hallucinations come in all shapes and sizes, but sometimes, when we have one, we perceive everything at once: fire, fireballs, visions both hot in temperature and colour. We can’t truly understand an experience like being told that we’ve found a new God – we can only know that it changed us forever. One moment we’re depressed, the next we think we’re God. When describing these experiences to each other, we might assume that the other person is experiencing the exact same thing.’

AI-assisted therapy is an emerging concept that can go wrong in many ways. Especially when one thinks of AI as lacking the empathy and care required for the maintenance of the self. But a thinking machine that can shift its understanding in response to feedback loops, model the user and the world, change itself and its behaviors based on new experiences and feedback? How mind-altering could that be? Making progress means also mirroring and working within the parallel dimensions of the human brain and the machine. The mind as AI as chaotic software.

A bridge between the increasingly obscure and autonomous black boxes of AI and the ways of the mind is yet to be built. If AI holds so many promises for the future – with this essay focusing on the future of written text – why not consider them allies in the ecological battle ahead? We shouldn’t ‘go into the mind of an AI,’ but instead think about the future of the mind and the kind of autonomy it deserves in the face of the rolling waves of AI technologies.

'Making the case that AI technology is only progressive or even admissable when it helps us in fighting poverty, famines, environmental degradation and war is the task of all of us.' - Arshin Adib Maghaddam

Georgiana Cojocaru is a writer, researcher, curator, and editor originally from Romania. Her work involves researching and making sense of emerging trends in the field of arts, aesthetics, ecology, poetry, technology, and literature.

Reference List

i. Polosukhin, Illia; Kaiser, Lukasz; Gomez, Aidan N.; Jones, Llion; Uszkoreit, Jakob; Parmar, Niki; Shazeer, Noam; Vaswani, Ashish (2017-06-12). "Attention Is All You Need". ArXiv: 1706.03762

ii. Wolf, Thomas; Debut, Lysandre; Sanh, Victor; Chaumond, Julien; Delangue, Clement; Moi, Anthony; Cistac, Pierric; Rault, Tim; Louf, Remi; Funtowicz, Morgan; Davison, Joe; Shleifer, Sam; von Platen, Patrick; Ma, Clara; Jernite, Yacine; Plu, Julien; Xu, Canwen; Le Scao, Teven; Gugger, Sylvain; Drame, Mariama; Lhoest, Quentin; Rush, Alexander (2020). "Transformers: State-of-the-Art Natural Language Processing". Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations. pp. 38–45.

iii. Emily M. Bender, Timnit Gebru, Angelina McMillan-Major, Shmargaret Shmitchell in “On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?” fact ’21, March 3–10, 2021, Virtual Event, coordinated out of Canada.

iv. For the first time, we’ve shown a relationship between the degree of prefrontal brain activity, the ability to make technological judgments, and success in actually making stone tools,” says Dietrich Stout, an experimental archeologist at Emory University and the leader of the study. “The findings are relevant to ongoing debates about the origins of modern human cognition, and the role of technological and social complexity in brain evolution across species.”. The study’s co-authors include Bruce Bradley of the University of Exeter in England, Thierry Chaminade of Aix-Marseille University in France; and Erin Hecht and Nada Khreisheh of Emory University. https://news.emory.edu/stories/2015/04/esc_stone_tools/campus.html

v. Coined by neurologist Marcus E. Raichle's lab at Washington University School of Medicine: “The DMN is a set of brain regions that exhibits strong low-frequency oscillations coherent during resting state and is thought to be activated when individuals are focused on their internal mental-state processes, such as self-referential processing, interoception, autobiographical memory retrieval, or imagining future. DMN is deactivated during cognitive task performance.”

Other

Italo Calvino, Oulipo: A Primer for Potential Literature, Dalkey Archive Press, 2015.

Joscha Bach - GPT-3: Is AI Deepfaking Understanding?, YouTube, September 2020.

K Allado-McDowell, Pharmako AI, Ignota Press, 2021.