The word ‘default’ has lost its essence and meaning. Facebook has once again changed default features and settings of its software, which can simply be regarded as yet another push of the ideology of ‘connectedness and openness’. Let us not forget Beacon (2007), changes of the privacy policy (2009) and the introduction of the Open Graph API along with confusing privacy control renewals (2010). Timeline, ticker and the ‘new’ social applications add to Facebook’s long history of protocological implementations and consequential uproars.

Story of your life Facebook.

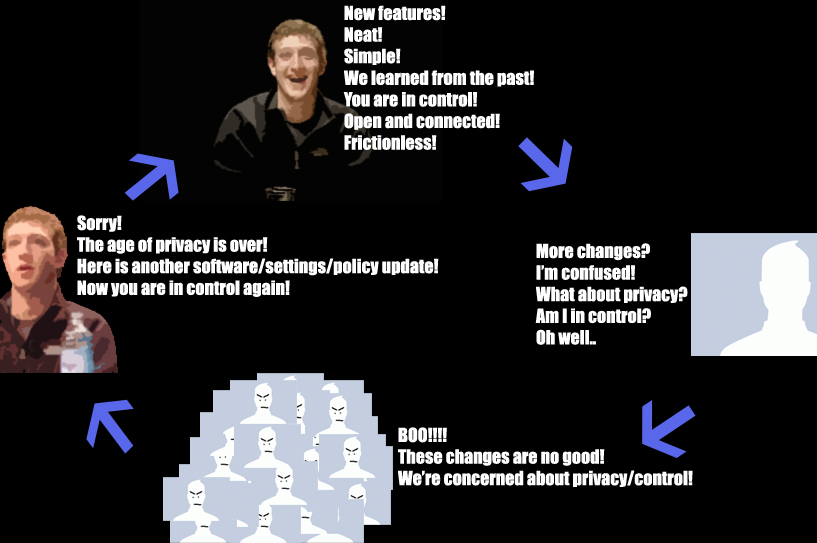

Facebook recently had a big PR moment at the so-called F8 conference, where they announced Timeline, ticker, real-time serendipity through new social applications and the discovery of patterns and activities. Well, if you ask me, the only pattern worth discovering by Facebook users is how the corporation recurrently frames their goals and image with a particular set of words to support the immediate changes. The discursive analysis of PR communication and critical discourse surrounding software changes over time (2007-2010) I conducted for my MA thesis The Politics of Social Media. Facebook: Control & Resistance lead me to the following pattern. As a counterbalance to Zuckerberg’s law on information sharing, you may refer to this as ‘Stumpel’s Law on discursive control’:

1: Make beneficial promises to the users by announcing a new feature or change of settings (which are often not or scarcely realized).

2: Enact an immediate process of ‘reprogramming’ or/and ‘switching’. In other words: changing the goals and operating logic of the network and/or (dis)connecting Facebook to other networks (such as Spotify’s). This usually results in more exposure of the users’ information and/or more exploitable data connected to your Facebook ID.

3: Give interested users, bloggers and journalists time to acknowledge and write about the changes.

4: Apologize for the rigid steps, often with another confusing software update (This occurs or not, depending on the impact of the manifested critical discourse).

Recurrent Pattern of Discourse[1]

This law is based on the notion that networks are primarily controlled through discourse. As sociologist Manuel Castells argues in Communication Power: “Discourses frame the options of what networks can or cannot do” (2009: p. 53).

Now let’s briefly look at some typical phrasing at the recent f8 event: ‘Frictionless sharing/experience’, “We’ve learned from Connect that social experiences are a lot more engaging for people”, ‘Simple’, “(..)Helping you to tell you the story of your life”. “Information design is sort of a religion”.

Every time Facebook makes beneficial promises to the users, their own gain with a greater ‘order of magnitude’ of exploitable data is left out. The goal with the new Open Graph social apps, is to track and share as much as possible; everything that you -insert verb here- will automatically be posted to your Facebook profile once connected to the application. For a vast amount of users this will likely cause much more friction, complexity and confusion then there already is. Trading prompts -that actually make users more aware about their data connections- for an I-agree-forever permission dialogue.

“I like the new Facebook. It´s so f*cking confusing and chaotic that I don´t nearly spend as much time on it as I used to.” (a friend on fb)

Those who connect to social apps might even forget they did in a later stage. Then, when they are browsing on the Web and are automatically and unwillingly sharing/posting information to their profile, the case would rather be how Facebook is not helping them to tell the story of their lives. Spending hours of removing posts from their profile that doesn’t quite fit to their identity. My main concern is that, through discursive control, Facebook continuously justifies new and potentially troublesome changes to their platform. In my honest opinion, if you call your ‘information design a ‘religion’, it isn’t really fair to convert users without their consent.

In the past, there have been cases in which Facebook reconfigured and even retracted certain software changes after critical discourse manifested in online protest groups, petition websites, class-action law suits, blog posts, and news articles. Discursive resistance can have its effect and lead to drawbacks. This time, however, with many business partners on their side, this may very well not be the case. At the end of the day all related discursive processes determine how far Facebook can go with pushing the envelope.

It is difficult to measure how many users are aware of Facebook‘s striking decisions in governing the platform. This depends on several factors including the level of engagement with the medium, concern over how to manage the flows of personal information, a view regarding privacy -if they have one- and the time of registration.

Given that Facebook imposed many changes to the software over time, it is hard to keep track. Thus, the specific moment that someone registered for the service either reduces or increases the chance of knowing about certain changes. For instance, it is likely for people who join Facebook right now to be unaware of the early Beacon debacle and its implications. However, users with the need for situational awareness easily stumble upon new and old relevant blog posts, news articles and press releases.

What does ‘Do not judge Facebook by its cover’ actually mean? Facebook users can never completely judge nor understand the software changes if they keep staring at their wall (timeline if you must). The user interface does not provide users with enough clarity about the implications of new protocological implementations. If you really want to know how Facebook’s changes affect you as a user, it pays off searching for and reading –articles which do make sense. This time around there are several articles that are definitely remarkable for bringing about discursive resistance:

–Unlike : Why Facebook Integration is Actually Antisocial by Mat Honan, September 21st , Gizmodo

–Not Sharing Is Caring. Facebook’s terrible plan to get us to share everything we do on the Web by Farhad Manjoo, September 22nd, Slate.

–“Read” in Facebook – It’s Not a Button, So Be Careful What You Click! by Richard MacManus, September 22nd, Read Write Web.

–What Facebook Really Wants by Nicholas Thompson, September 22nd, The Newyorker.

–The mischief of the ‘WANT’ button by Nastassia Epskamp, September 22nd, Meta Reporter.

–Facebook Users Are About To Riot Over Massive Changes, And This Is Fantastic News For Facebook by Nicholas Carlson, September 22nd, Business Insider SAI.

–Why Facebook is building a second internet. Facebook will be watching every move you make by Gary Marshall, September 23rd, Tech Radar.

–Facebook is scaring me by Dave Winer, September, 24th, Scripting News.

–Logging out of Facebook is not enough by Nik Cubrilovic, September 25th.

–As ‘Like’ Buttons Spread, So Do Facebook’s Tentacles by Riva Richmond, September 27nd, The New York Times.

–Groups ask Feds to ban Facebook’s ‘frictionless sharing’ by Declan McCullagh, September 29th Cnet News.

–Occupying Facebook by Dave Winer, October 5th, Scripting News.

[1] Some rights reserved (CC BY-NC-SA 2.0). Pictures adapted from Andrew Feinberg (CC BY 2.0) and kris krüg (CC BY-NC-SA 2.0)